Julian Theis

Process Mining Model to Predict Mortality in Paralytic Ileus Patients

Aug 03, 2021

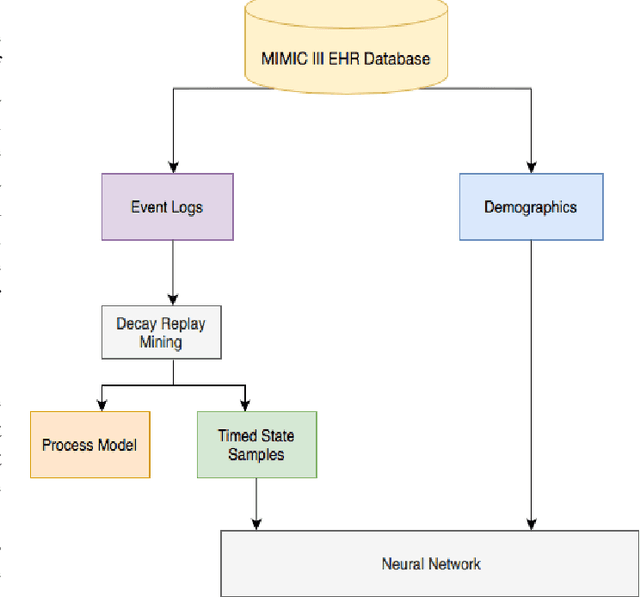

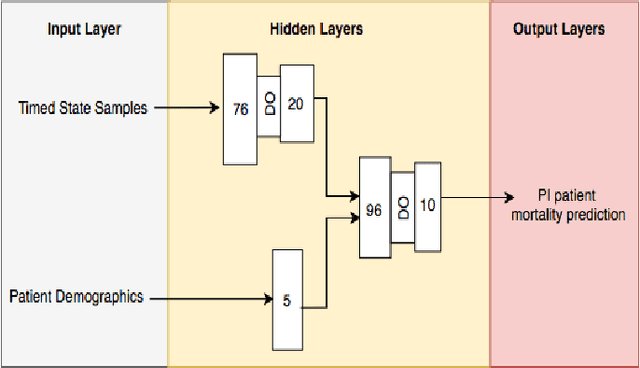

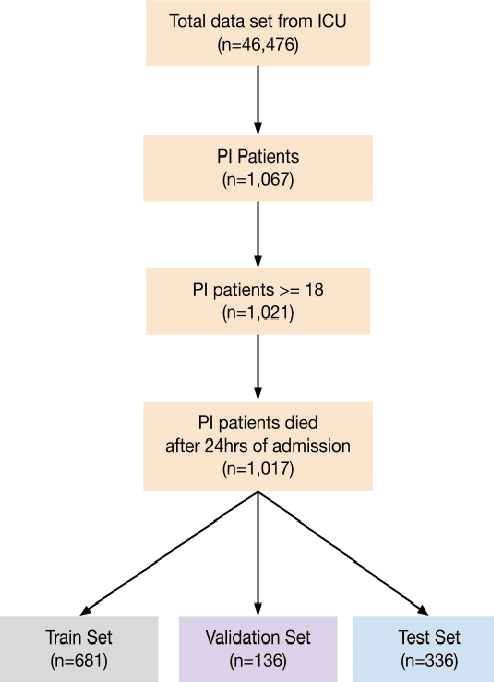

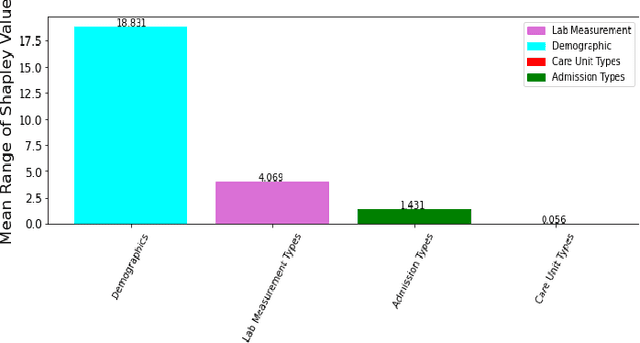

Abstract:Paralytic Ileus (PI) patients are at high risk of death when admitted to the Intensive care unit (ICU), with mortality as high as 40\%. There is minimal research concerning PI patient mortality prediction. There is a need for more accurate prediction modeling for ICU patients diagnosed with PI. This paper demonstrates performance improvements in predicting the mortality of ICU patients diagnosed with PI after 24 hours of being admitted. The proposed framework, PMPI(Process Mining Model to predict mortality of PI patients), is a modification of the work used for prediction of in-hospital mortality for ICU patients with diabetes. PMPI demonstrates similar if not better performance with an Area under the ROC Curve (AUC) score of 0.82 compared to the best results of the existing literature. PMPI uses patient medical history, the time related to the events, and demographic information for prediction. The PMPI prediction framework has the potential to help medical teams in making better decisions for treatment and care for ICU patients with PI to increase their life expectancy.

Masking Neural Networks Using Reachability Graphs to Predict Process Events

Aug 01, 2021

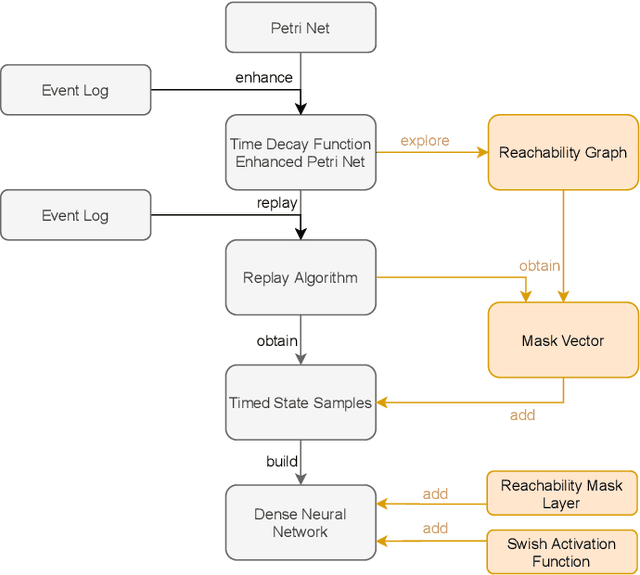

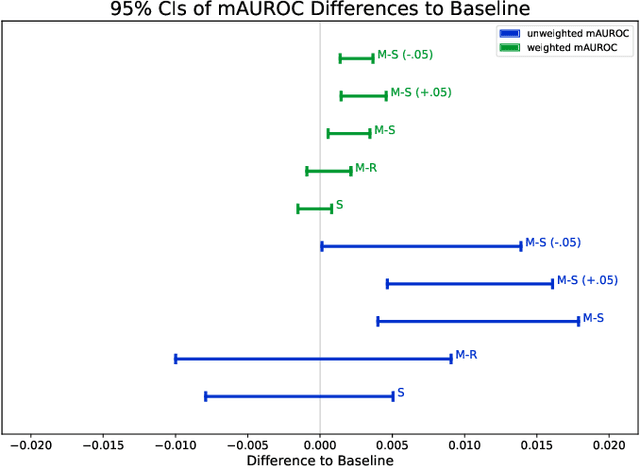

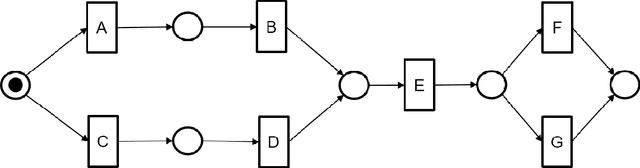

Abstract:Decay Replay Mining is a deep learning method that utilizes process model notations to predict the next event. However, this method does not intertwine the neural network with the structure of the process model to its full extent. This paper proposes an approach to further interlock the process model of Decay Replay Mining with its neural network for next event prediction. The approach uses a masking layer which is initialized based on the reachability graph of the process model. Additionally, modifications to the neural network architecture are proposed to increase the predictive performance. Experimental results demonstrate the value of the approach and underscore the importance of discovering precise and generalized process models.

On the Performance Analysis of the Adversarial System Variant Approximation Method to Quantify Process Model Generalization

Jul 13, 2021

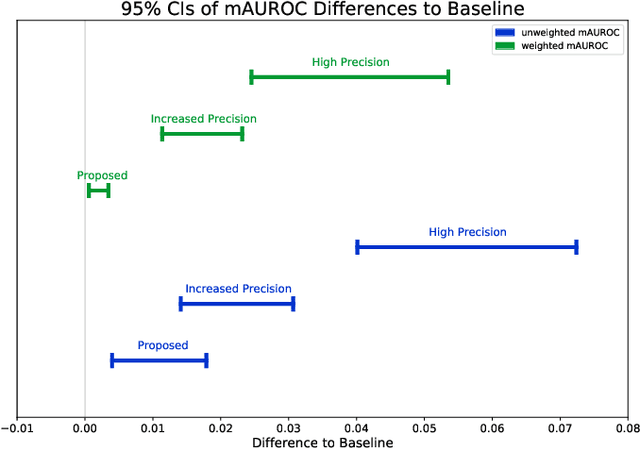

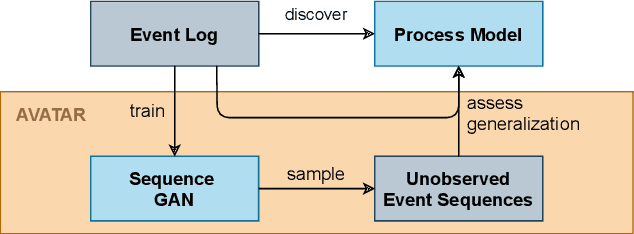

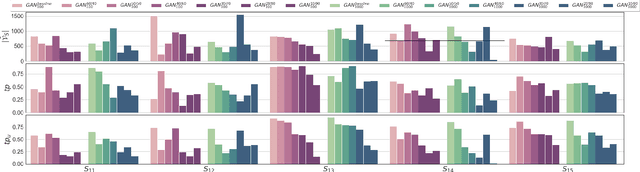

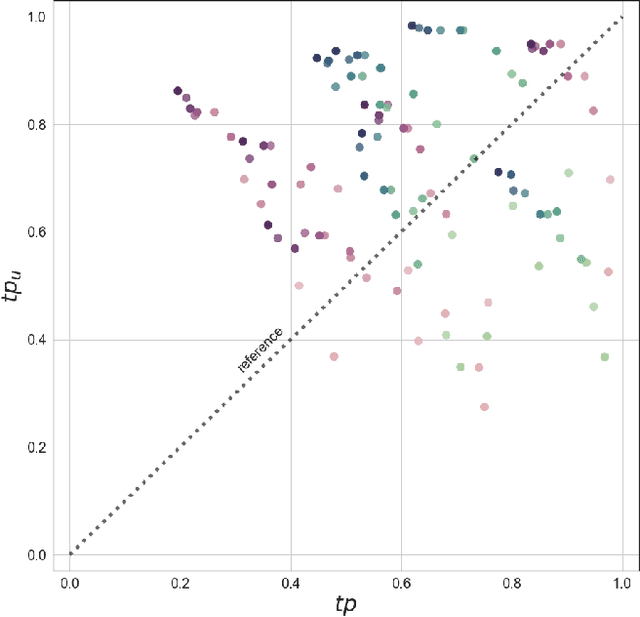

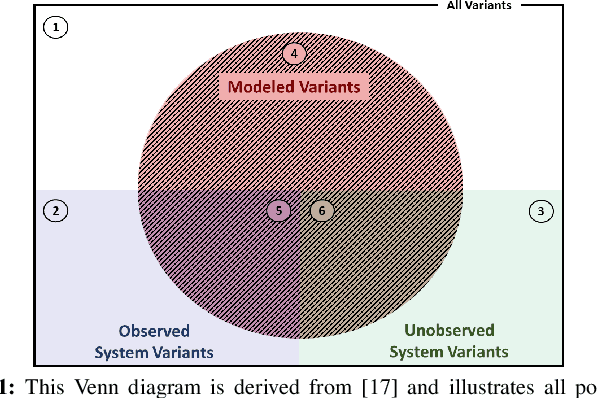

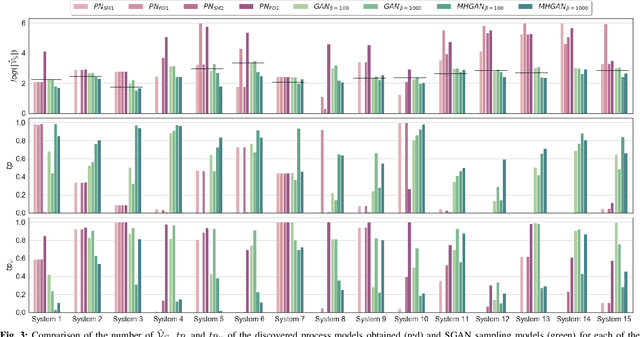

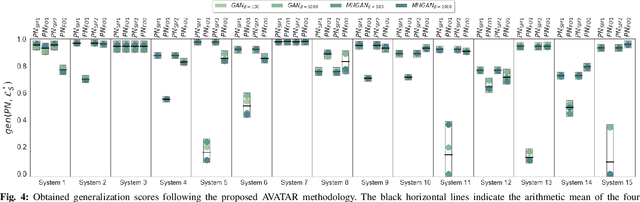

Abstract:Process mining algorithms discover a process model from an event log. The resulting process model is supposed to describe all possible event sequences of the underlying system. Generalization is a process model quality dimension of interest. A generalization metric should quantify the extent to which a process model represents the observed event sequences contained in the event log and the unobserved event sequences of the system. Most of the available metrics in the literature cannot properly quantify the generalization of a process model. A recently published method [1] called Adversarial System Variant Approximation leverages Generative Adversarial Networks to approximate the underlying event sequence distribution of a system from an event log. While this method demonstrated performance gains over existing methods in measuring the generalization of process models, its experimental evaluations have been performed under ideal conditions. This paper experimentally investigates the performance of Adversarial System Variant Approximation under non-ideal conditions such as biased and limited event logs. Moreover, experiments are performed to investigate the originally proposed sampling hyperparameter value of the method on its performance to measure the generalization. The results confirm the need to raise awareness about the working conditions of the Adversarial System Variant Approximation method. The outcomes of this paper also serve to initiate future research directions. [1] Theis, Julian, and Houshang Darabi. "Adversarial System Variant Approximation to Quantify Process Model Generalization." IEEE Access 8 (2020): 194410-194427.

Adversarial System Variant Approximation to Quantify Process Model Generalization

Mar 26, 2020

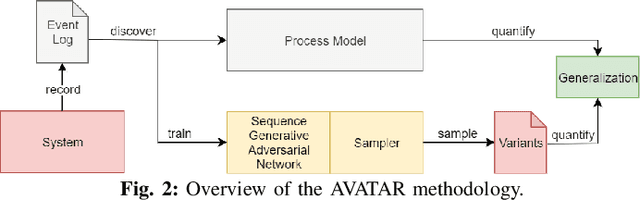

Abstract:In process mining, process models are extracted from event logs using process discovery algorithms and are commonly assessed using multiple quality dimensions. While the metrics that measure the relationship of an extracted process model to its event log are well-studied, quantifying the level by which a process model can describe the unobserved behavior of its underlying system falls short in the literature. In this paper, a novel deep learning-based methodology called Adversarial System Variant Approximation (AVATAR) is proposed to overcome this issue. Sequence Generative Adversarial Networks are trained on the variants contained in an event log with the intention to approximate the underlying variant distribution of the system behavior. Unobserved realistic variants are sampled either directly from the Sequence Generative Adversarial Network or by leveraging the Metropolis-Hastings algorithm. The degree by which a process model relates to its underlying unknown system behavior is then quantified based on the realistic observed and estimated unobserved variants using established process model quality metrics. Significant performance improvements in revealing realistic unobserved variants are demonstrated in a controlled experiment on 15 ground truth systems. Additionally, the proposed methodology is experimentally tested and evaluated to quantify the generalization of 60 discovered process models with respect to their systems.

DREAM-NAP: Decay Replay Mining to Predict Next Process Activities

Mar 22, 2019

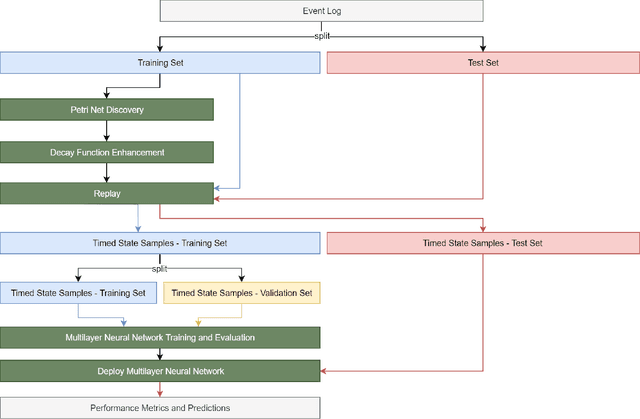

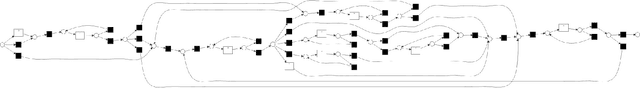

Abstract:In complex processes, various events can happen in different sequences. The prediction of the next event activity given an a-priori process state is of importance in such processes. Recent methods leverage deep learning techniques such as recurrent neural networks to predict event activities from raw process logs. However, deep learning techniques cannot efficiently model logical behaviors of complex processes. In this paper, we take advantage of Petri nets as a powerful tool in modeling logical behaviors of complex processes. We propose an approach which first discovers Petri nets from event logs utilizing a recent process mining algorithm. In a second step, we enhance the obtained model with time decay functions to create timed process state samples. Finally, we use these samples in combination with token movement counters and Petri net markings to train a deep learning model that predicts the next event activity. We demonstrate significant performance improvements and outperform the state-of-the-art methods on eight out of nine real-world benchmark event logs in accuracy.

Behavioral Petri Net Mining and Automated Analysis for Human-Computer Interaction Recommendations in Multi-Application Environments

Feb 26, 2019

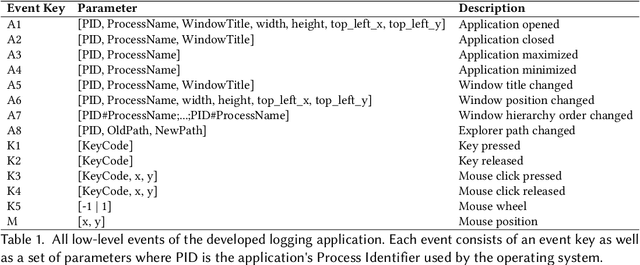

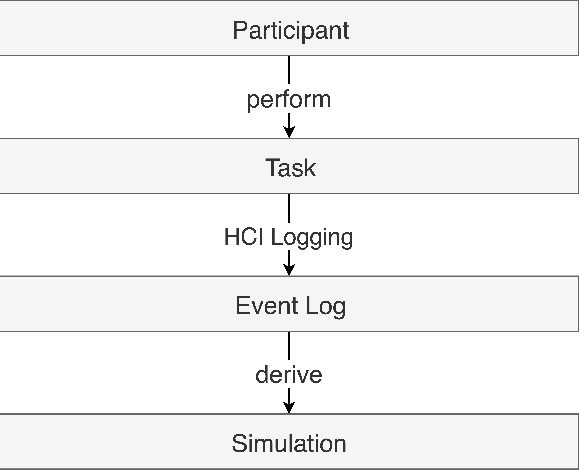

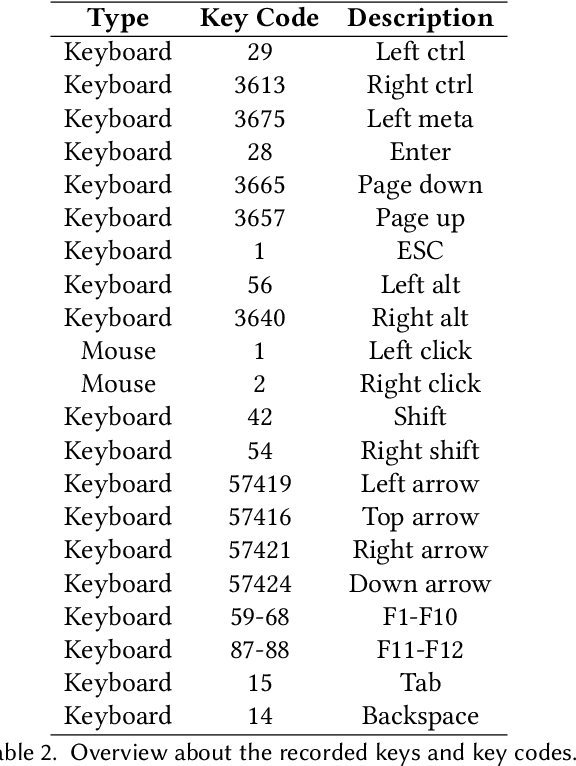

Abstract:Process Mining is a famous technique which is frequently applied to Software Development Processes, while being neglected in Human-Computer Interaction (HCI) recommendation applications. Organizations usually train employees to interact with required IT systems. Often, employees, or users in general, develop their own strategies for solving repetitive tasks and processes. However, organizations find it hard to detect whether employees interact efficiently with IT systems or not. Hence, we have developed a method which detects inefficient behavior assuming that at least one optimal HCI strategy is known. This method provides recommendations to gradually adapt users' behavior towards the optimal way of interaction considering satisfaction of users. Based on users' behavior logs tracked by a Java application suitable for multi-application and multi-instance environments, we demonstrate the applicability for a specific task in a common Windows environment utilizing realistic simulated behaviors of users.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge