Julian Skirzynski

Automatic discovery and description of human planning strategies

Sep 29, 2021

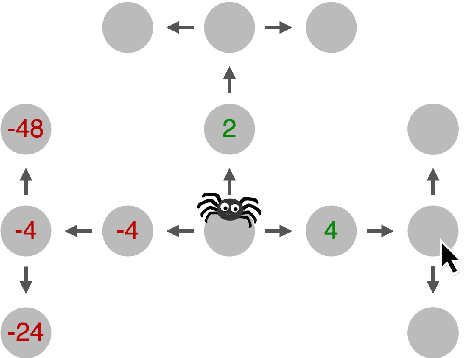

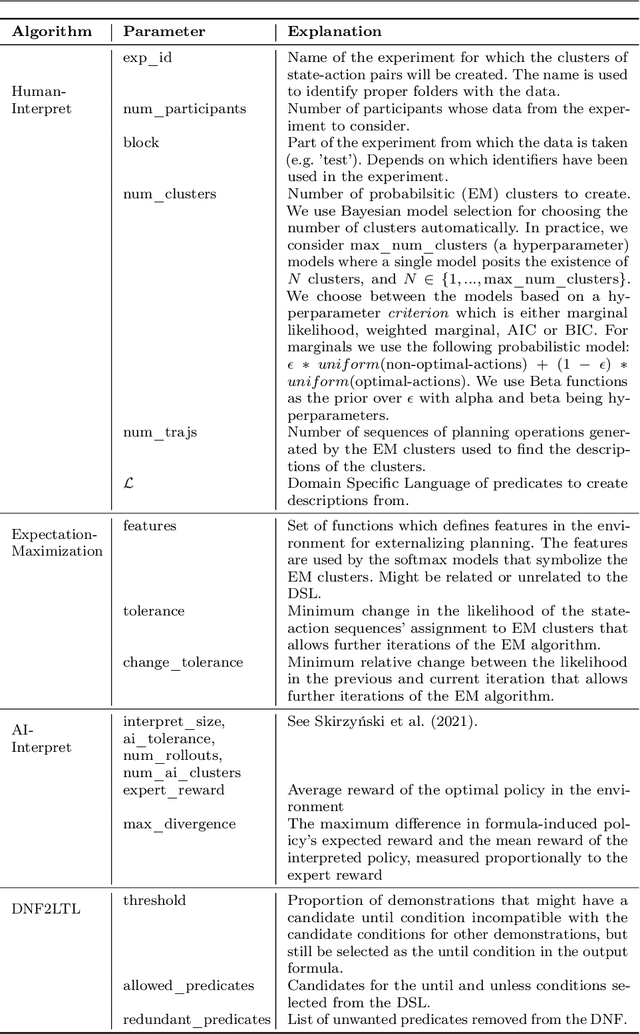

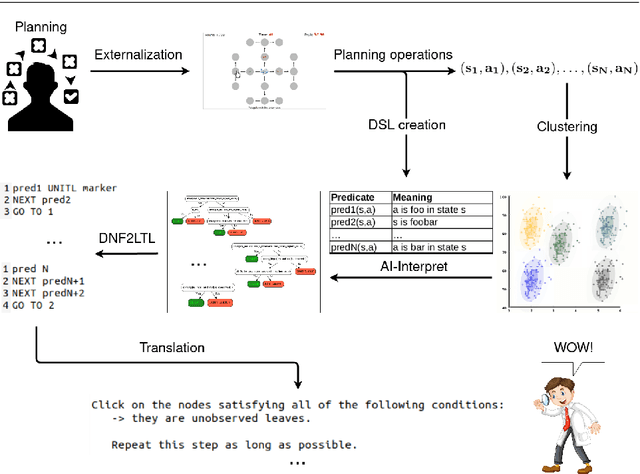

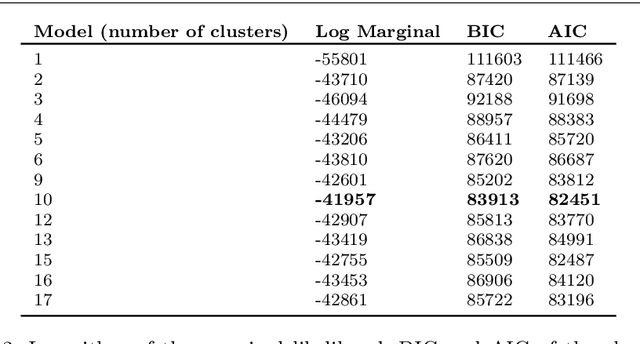

Abstract:Scientific discovery concerns finding patterns in data and creating insightful hypotheses that explain these patterns. Traditionally, this process required human ingenuity, but with the galloping advances in artificial intelligence (AI) it becomes feasible to automate some parts of scientific discovery. In this work we leverage AI for strategy discovery for understanding human planning. In the state-of-the-art methods data about the process of human planning is often used to group similar behaviors together and formulate verbal descriptions of the strategies which might underlie those groups. Here, we automate these two steps. Our algorithm, called Human-Interpret, uses imitation learning to describe process-tracing data collected in psychological experiments with the Mouselab-MDP paradigm in terms of a procedural formula. Then, it translates that formula to natural language using a pre-defined predicate dictionary. We test our method on a benchmark data set that researchers have previously scrutinized manually. We find that the descriptions of human planning strategies obtained automatically are about as understandable as human-generated descriptions. They also cover a substantial proportion of all types of human planning strategies that had been discovered manually. Our method saves scientists' time and effort as all the reasoning about human planning is done automatically. This might make it feasible to more rapidly scale up the search for yet undiscovered cognitive strategies to many new decision environments, populations, tasks, and domains. Given these results, we believe that the presented work may accelerate scientific discovery in psychology, and due to its generality, extend to problems from other fields.

Automatic Discovery of Interpretable Planning Strategies

May 24, 2020

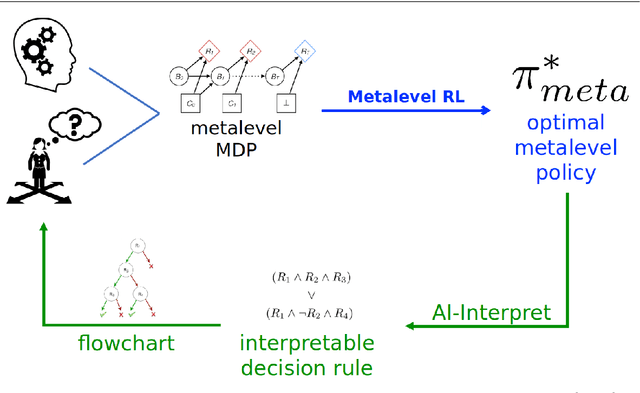

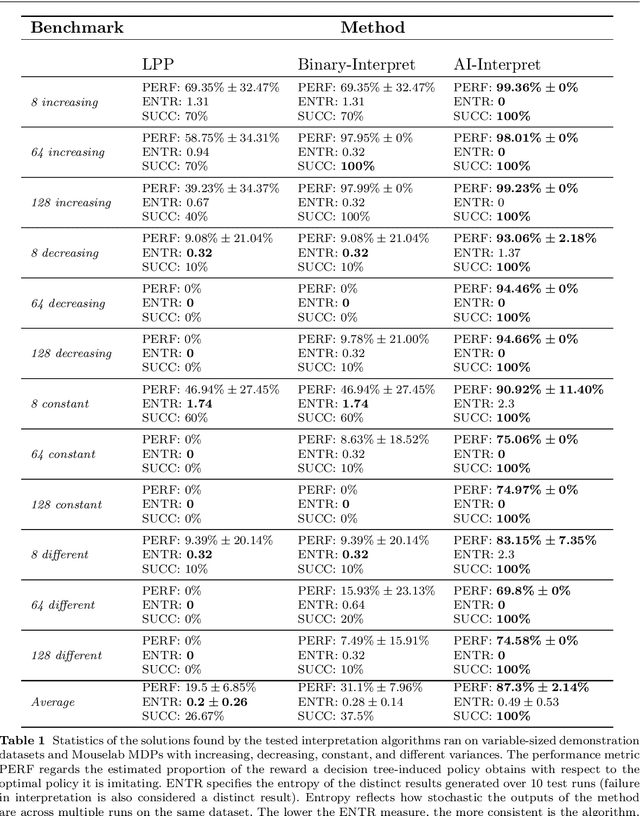

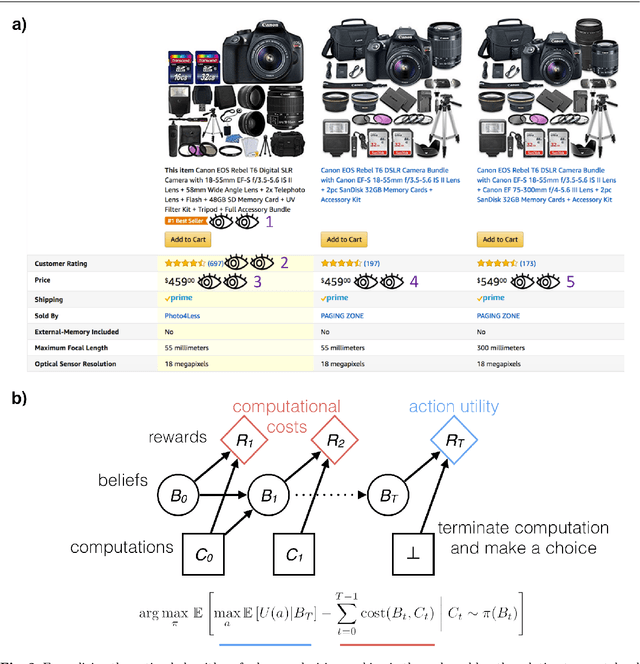

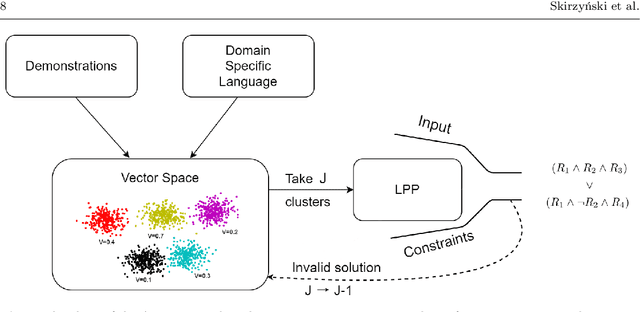

Abstract:When making decisions, people often overlook critical information or are overly swayed by irrelevant information. A common approach to mitigate these biases is to provide decision-makers, especially professionals such as medical doctors, with decision aids, such as decision trees and flowcharts. Designing effective decision aids is a difficult problem. We propose that recently developed reinforcement learning methods for discovering clever heuristics for good decision-making can be partially leveraged to assist human experts in this design process. One of the biggest remaining obstacles to leveraging the aforementioned methods for improving human decision-making is that the policies they learn are opaque to people. To solve this problem, we introduce AI-Interpret: a general method for transforming idiosyncratic policies into simple and interpretable descriptions. Our algorithm combines recent advances in imitation learning and program induction with a new clustering method for identifying a large subset of demonstrations that can be accurately described by a simple, high-performing decision rule. We evaluate our new AI-Interpret algorithm and employ it to translate information-acquisition policies discovered through metalevel reinforcement learning. The results of three large behavioral experiments showed that the provision of decision rules as flowcharts significantly improved people's planning strategies and decisions across three different classes of sequential decision problems. Furthermore, a series of ablation studies confirmed that our AI-Interpret algorithm was critical to the discovery of interpretable decision rules and that it is ready to be applied to other reinforcement learning problems. We conclude that the methods and findings presented in this article are an important step towards leveraging automatic strategy discovery to improve human decision-making.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge