Josselin Garnier

CMAP, ASCII

Dimension-Free Multimodal Sampling via Preconditioned Annealed Langevin Dynamics

Feb 01, 2026Abstract:Designing algorithms that can explore multimodal target distributions accurately across successive refinements of an underlying high-dimensional problem is a central challenge in sampling. Annealed Langevin dynamics (ALD) is a widely used alternative to classical Langevin since it often yields much faster mixing on multimodal targets, but there is still a gap between this empirical success and existing theory: when, and under which design choices, can ALD be guaranteed to remain stable as dimension increases? In this paper, we help bridge this gap by providing a uniform-in-dimension analysis of continuous-time ALD for multimodal targets that can be well-approximated by Gaussian mixture models. Along an explicit annealing path obtained by progressively removing Gaussian smoothing of the target, we identify sufficient spectral conditions - linking smoothing covariance and the covariances of the Gaussian components of the mixture - under which ALD achieves a prescribed accuracy within a single, dimension-uniform time horizon. We then establish dimension-robustness to imperfect initialization and score approximation: under a misspecified-mixture score model, we derive explicit conditions showing that preconditioning the ALD algorithm with a sufficiently decaying spectrum is necessary to prevent error terms from accumulating across coordinates and destroying dimension-uniform control. Finally, numerical experiments illustrate and validate the theory.

Quantitative synthetic aperture radar inversion

Jan 28, 2026Abstract:We study an inverse scattering problem for monostatic synthetic aperture radar (SAR): Estimate the wave speed in a heterogeneous, isotropic and nonmagnetic medium probed by waves emitted and measured by a moving antenna. The forward map, from the wave speed to the measurements, is derived from Maxwell's equations. It is a nonlinear map that accounts for multiple scattering and it is very oscillatory at high frequencies. This makes the standard, nonlinear least squares data fitting formulation of the inverse problem difficult to solve. We introduce an alternative, two-step approach: The first step computes the nonlinear map from the measurements to an approximation of the electric field inside the unknown medium aka, the internal wave. This is done for each antenna location in a non-iterative manner. The internal wave fits the data by construction, but it does not solve Maxwell's equations. The second step uses optimization to minimize the discrepancy between the internal wave and the solution of Maxwell's equations, for all antenna locations. The optimization is iterative. The first step defines an imaging function whose computational cost is comparable to that of standard SAR imaging, but it gives a better estimate of the support of targets. Further iterations improve the quantitative estimation of the wave speed. We assess the performance of the method with numerical simulations and compare the results with those of standard inversion.

A reproducible comparative study of categorical kernels for Gaussian process regression, with new clustering-based nested kernels

Oct 02, 2025Abstract:Designing categorical kernels is a major challenge for Gaussian process regression with continuous and categorical inputs. Despite previous studies, it is difficult to identify a preferred method, either because the evaluation metrics, the optimization procedure, or the datasets change depending on the study. In particular, reproducible code is rarely available. The aim of this paper is to provide a reproducible comparative study of all existing categorical kernels on many of the test cases investigated so far. We also propose new evaluation metrics inspired by the optimization community, which provide quantitative rankings of the methods across several tasks. From our results on datasets which exhibit a group structure on the levels of categorical inputs, it appears that nested kernels methods clearly outperform all competitors. When the group structure is unknown or when there is no prior knowledge of such a structure, we propose a new clustering-based strategy using target encodings of categorical variables. We show that on a large panel of datasets, which do not necessarily have a known group structure, this estimation strategy still outperforms other approaches while maintaining low computational cost.

Preconditioned Langevin Dynamics with Score-Based Generative Models for Infinite-Dimensional Linear Bayesian Inverse Problems

May 23, 2025Abstract:Designing algorithms for solving high-dimensional Bayesian inverse problems directly in infinite-dimensional function spaces - where such problems are naturally formulated - is crucial to ensure stability and convergence as the discretization of the underlying problem is refined. In this paper, we contribute to this line of work by analyzing a widely used sampler for linear inverse problems: Langevin dynamics driven by score-based generative models (SGMs) acting as priors, formulated directly in function space. Building on the theoretical framework for SGMs in Hilbert spaces, we give a rigorous definition of this sampler in the infinite-dimensional setting and derive, for the first time, error estimates that explicitly depend on the approximation error of the score. As a consequence, we obtain sufficient conditions for global convergence in Kullback-Leibler divergence on the underlying function space. Preventing numerical instabilities requires preconditioning of the Langevin algorithm and we prove the existence and the form of an optimal preconditioner. The preconditioner depends on both the score error and the forward operator and guarantees a uniform convergence rate across all posterior modes. Our analysis applies to both Gaussian and a general class of non-Gaussian priors. Finally, we present examples that illustrate and validate our theoretical findings.

General reproducing properties in RKHS with application to derivative and integral operators

Mar 20, 2025Abstract:In this paper, we generalize the reproducing property in Reproducing Kernel Hilbert Spaces (RKHS). We establish a reproducing property for the closure of the class of combinations of composition operators under minimal conditions. As an application, we improve the existing sufficient conditions for the reproducing property to hold for the derivative operator, as well as for the existence of the mean embedding function. These results extend the scope of applicability of the representer theorem for regularized learning algorithms that involve data for function values, gradients, or any other operator from the considered class.

Learning signals defined on graphs with optimal transport and Gaussian process regression

Oct 21, 2024

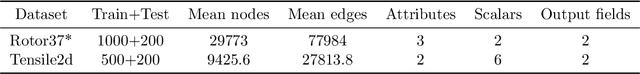

Abstract:In computational physics, machine learning has now emerged as a powerful complementary tool to explore efficiently candidate designs in engineering studies. Outputs in such supervised problems are signals defined on meshes, and a natural question is the extension of general scalar output regression models to such complex outputs. Changes between input geometries in terms of both size and adjacency structure in particular make this transition non-trivial. In this work, we propose an innovative strategy for Gaussian process regression where inputs are large and sparse graphs with continuous node attributes and outputs are signals defined on the nodes of the associated inputs. The methodology relies on the combination of regularized optimal transport, dimension reduction techniques, and the use of Gaussian processes indexed by graphs. In addition to enabling signal prediction, the main point of our proposal is to come with confidence intervals on node values, which is crucial for uncertainty quantification and active learning. Numerical experiments highlight the efficiency of the method to solve real problems in fluid dynamics and solid mechanics.

Taming Score-Based Diffusion Priors for Infinite-Dimensional Nonlinear Inverse Problems

May 24, 2024Abstract:This work introduces a sampling method capable of solving Bayesian inverse problems in function space. It does not assume the log-concavity of the likelihood, meaning that it is compatible with nonlinear inverse problems. The method leverages the recently defined infinite-dimensional score-based diffusion models as a learning-based prior, while enabling provable posterior sampling through a Langevin-type MCMC algorithm defined on function spaces. A novel convergence analysis is conducted, inspired by the fixed-point methods established for traditional regularization-by-denoising algorithms and compatible with weighted annealing. The obtained convergence bound explicitly depends on the approximation error of the score; a well-approximated score is essential to obtain a well-approximated posterior. Stylized and PDE-based examples are provided, demonstrating the validity of our convergence analysis. We conclude by presenting a discussion of the method's challenges related to learning the score and computational complexity.

Gaussian process regression with Sliced Wasserstein Weisfeiler-Lehman graph kernels

Feb 06, 2024

Abstract:Supervised learning has recently garnered significant attention in the field of computational physics due to its ability to effectively extract complex patterns for tasks like solving partial differential equations, or predicting material properties. Traditionally, such datasets consist of inputs given as meshes with a large number of nodes representing the problem geometry (seen as graphs), and corresponding outputs obtained with a numerical solver. This means the supervised learning model must be able to handle large and sparse graphs with continuous node attributes. In this work, we focus on Gaussian process regression, for which we introduce the Sliced Wasserstein Weisfeiler-Lehman (SWWL) graph kernel. In contrast to existing graph kernels, the proposed SWWL kernel enjoys positive definiteness and a drastic complexity reduction, which makes it possible to process datasets that were previously impossible to handle. The new kernel is first validated on graph classification for molecular datasets, where the input graphs have a few tens of nodes. The efficiency of the SWWL kernel is then illustrated on graph regression in computational fluid dynamics and solid mechanics, where the input graphs are made up of tens of thousands of nodes.

Automated approach for source location in shallow waters

Jul 28, 2023Abstract:This paper proposes a fully automated method for recovering the location of a source and medium parameters in shallow waters. The scenario involves an unknown source emitting low-frequency sound waves in a shallow water environment, and a single hydrophone recording the signal. Firstly, theoretical tools are introduced to understand the robustness of the warping method and to propose and analyze an automated way to separate the modal components of the recorded signal. Secondly, using the spectrogram of each modal component, the paper investigates the best way to recover the modal travel times and provides stability estimates. Finally, a penalized minimization algorithm is presented to recover estimates of the source location and medium parameters. The proposed method is tested on experimental data of right whale gunshot and combustive sound sources, demonstrating its effectiveness in real-world scenarios.

Conditional score-based diffusion models for Bayesian inference in infinite dimensions

May 28, 2023Abstract:Since their first introduction, score-based diffusion models (SDMs) have been successfully applied to solve a variety of linear inverse problems in finite-dimensional vector spaces due to their ability to efficiently approximate the posterior distribution. However, using SDMs for inverse problems in infinite-dimensional function spaces has only been addressed recently and by learning the unconditional score. While this approach has some advantages, depending on the specific inverse problem at hand, in order to sample from the conditional distribution it needs to incorporate the information from the observed data with a proximal optimization step, solving an optimization problem numerous times. This may not be feasible in inverse problems with computationally costly forward operators. To address these limitations, in this work we propose a method to learn the posterior distribution in infinite-dimensional Bayesian linear inverse problems using amortized conditional SDMs. In particular, we prove that the conditional denoising estimator is a consistent estimator of the conditional score in infinite dimensions. We show that the extension of SDMs to the conditional setting requires some care because the conditional score typically blows up for small times contrarily to the unconditional score. We also discuss the robustness of the learned distribution against perturbations of the observations. We conclude by presenting numerical examples that validate our approach and provide additional insights.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge