José Zariffa

Detecting Activities of Daily Living in Egocentric Video to Contextualize Hand Use at Home in Outpatient Neurorehabilitation Settings

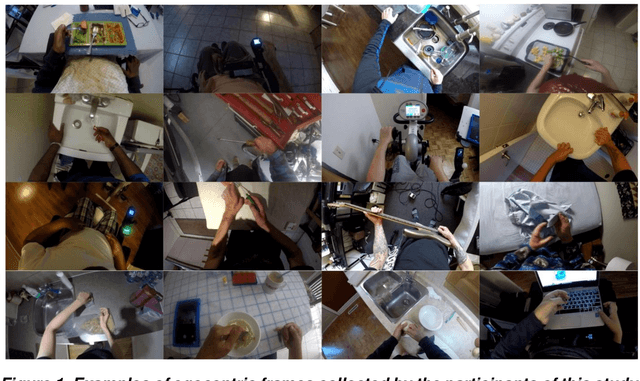

Dec 14, 2024Abstract:Wearable egocentric cameras and machine learning have the potential to provide clinicians with a more nuanced understanding of patient hand use at home after stroke and spinal cord injury (SCI). However, they require detailed contextual information (i.e., activities and object interactions) to effectively interpret metrics and meaningfully guide therapy planning. We demonstrate that an object-centric approach, focusing on what objects patients interact with rather than how they move, can effectively recognize Activities of Daily Living (ADL) in real-world rehabilitation settings. We evaluated our models on a complex dataset collected in the wild comprising 2261 minutes of egocentric video from 16 participants with impaired hand function. By leveraging pre-trained object detection and hand-object interaction models, our system achieves robust performance across different impairment levels and environments, with our best model achieving a mean weighted F1-score of 0.78 +/- 0.12 and maintaining an F1-score > 0.5 for all participants using leave-one-subject-out cross validation. Through qualitative analysis, we observe that this approach generates clinically interpretable information about functional object use while being robust to patient-specific movement variations, making it particularly suitable for rehabilitation contexts with prevalent upper limb impairment.

A Personalized Video-Based Hand Taxonomy: Application for Individuals with Spinal Cord Injury

Mar 26, 2024Abstract:Hand function is critical for our interactions and quality of life. Spinal cord injuries (SCI) can impair hand function, reducing independence. A comprehensive evaluation of function in home and community settings requires a hand grasp taxonomy for individuals with impaired hand function. Developing such a taxonomy is challenging due to unrepresented grasp types in standard taxonomies, uneven data distribution across injury levels, and limited data. This study aims to automatically identify the dominant distinct hand grasps in egocentric video using semantic clustering. Egocentric video recordings collected in the homes of 19 individual with cervical SCI were used to cluster grasping actions with semantic significance. A deep learning model integrating posture and appearance data was employed to create a personalized hand taxonomy. Quantitative analysis reveals a cluster purity of 67.6% +- 24.2% with with 18.0% +- 21.8% redundancy. Qualitative assessment revealed meaningful clusters in video content. This methodology provides a flexible and effective strategy to analyze hand function in the wild. It offers researchers and clinicians an efficient tool for evaluating hand function, aiding sensitive assessments and tailored intervention plans.

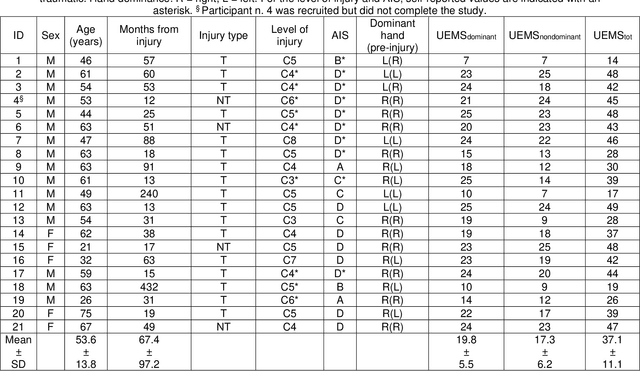

Measuring hand use in the home after cervical spinal cord injury using egocentric video

Mar 31, 2022

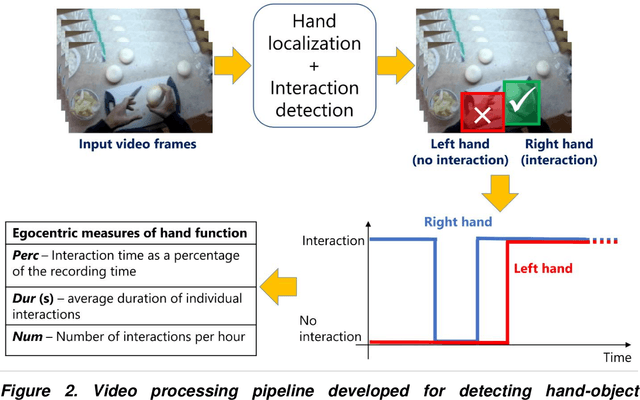

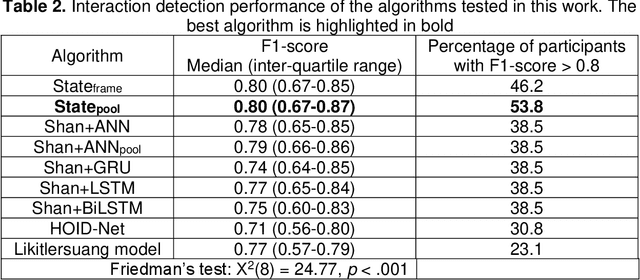

Abstract:Background: Egocentric video has recently emerged as a potential solution for monitoring hand function in individuals living with tetraplegia in the community, especially for its ability to detect functional use in the home environment. Objective: To develop and validate a wearable vision-based system for measuring hand use in the home among individuals living with tetraplegia. Methods: Several deep learning algorithms for detecting functional hand-object interactions were developed and compared. The most accurate algorithm was used to extract measures of hand function from 65 hours of unscripted video recorded at home by 20 participants with tetraplegia. These measures were: the percentage of interaction time over total recording time (Perc); the average duration of individual interactions (Dur); the number of interactions per hour (Num). To demonstrate the clinical validity of the technology, egocentric measures were correlated with validated clinical assessments of hand function and independence (Graded Redefined Assessment of Strength, Sensibility and Prehension - GRASSP, Upper Extremity Motor Score - UEMS, and Spinal Cord Independent Measure - SCIM). Results: Hand-object interactions were automatically detected with a median F1-score of 0.80 (0.67-0.87). Our results demonstrated that higher UEMS and better prehension were related to greater time spent interacting, whereas higher SCIM and better hand sensation resulted in a higher number of interactions performed during the egocentric video recordings. Conclusions: For the first time, measures of hand function automatically estimated in an unconstrained environment in individuals with tetraplegia have been validated against internationally accepted measures of hand function. Future work will necessitate a formal evaluation of the reliability and responsiveness of the egocentric-based performance measures for hand use.

Analysis of the hands in egocentric vision: A survey

Dec 23, 2019

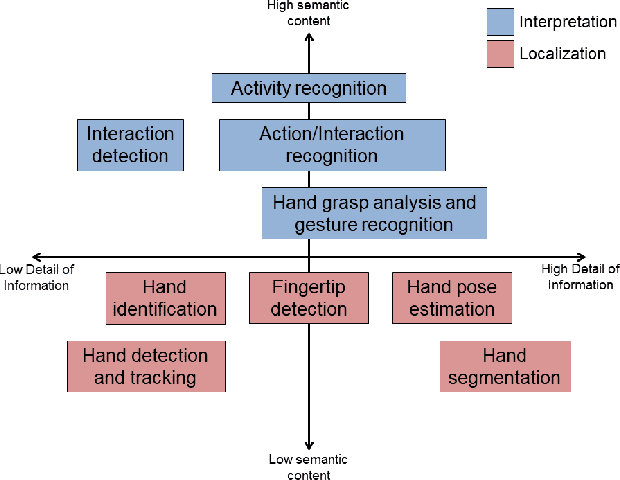

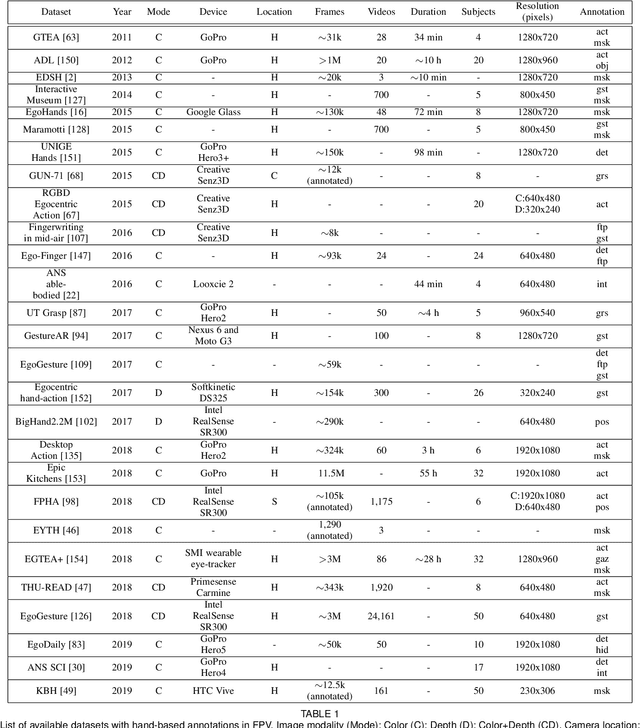

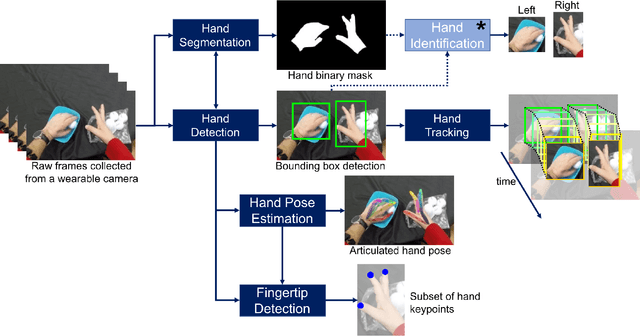

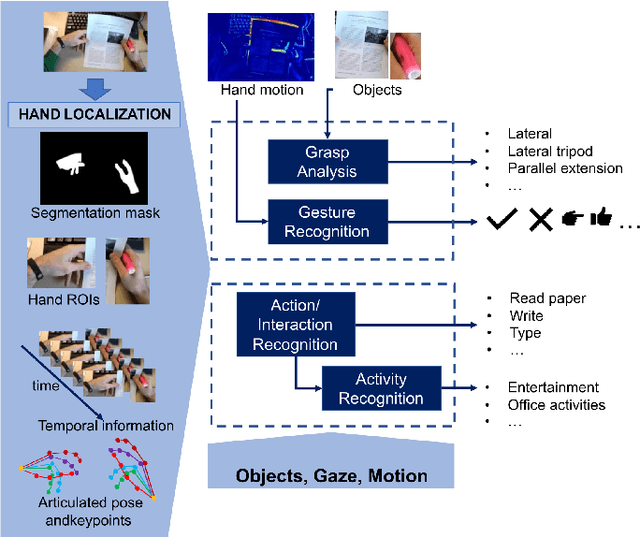

Abstract:Egocentric vision (a.k.a. first-person vision - FPV) applications have thrived over the past few years, thanks to the availability of affordable wearable cameras and large annotated datasets. The position of the wearable camera (usually mounted on the head) allows recording exactly what the camera wearers have in front of them, in particular hands and manipulated objects. This intrinsic advantage enables the study of the hands from multiple perspectives: localizing hands and their parts within the images; understanding what actions and activities the hands are involved in; and developing human-computer interfaces that rely on hand gestures. In this survey, we review the literature that focuses on the hands using egocentric vision, categorizing the existing approaches into: localization (where are the hands or part of them?); interpretation (what are the hands doing?); and application (e.g., systems that used egocentric hand cues for solving a specific problem). Moreover, a list of the most prominent datasets with hand-based annotations is provided.

An Effective and Efficient Method for Detecting Hands in Egocentric Videos for Rehabilitation Applications

Aug 27, 2019

Abstract:Objective: Individuals with spinal cord injury (SCI) report upper limb function as their top recovery priority. To accurately represent the true impact of new interventions on patient function and independence, evaluation should occur in a natural setting. Wearable cameras can be used to monitor hand function at home, using computer vision to automatically analyze the resulting videos (egocentric video). A key step in this process, hand detection, is difficult to do robustly and reliably, hindering deployment of a complete monitoring system in the home and community. We propose an accurate and efficient hand detection method that uses a simple combination of existing detection and tracking algorithms. Methods: Detection, tracking, and combination methods were evaluated on a new hand detection dataset, consisting of 167,622 frames of egocentric videos collected on 17 individuals with SCI performing activities of daily living in a home simulation laboratory. Results: The F1-scores for the best detector and tracker alone (SSD and Median Flow) were 0.90$\pm$0.07 and 0.42$\pm$0.18, respectively. The best combination method, in which a detector was used to initialize and reset a tracker, resulted in an F1-score of 0.87$\pm$0.07 while being two times faster than the fastest detector alone. Conclusion: The combination of the fastest detector and best tracker improved the accuracy over online trackers while improving the speed of detectors. Significance: The method proposed here, in combination with wearable cameras, will help clinicians directly measure hand function in a patient's daily life at home, enabling independence after SCI.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge