Measuring hand use in the home after cervical spinal cord injury using egocentric video

Paper and Code

Mar 31, 2022

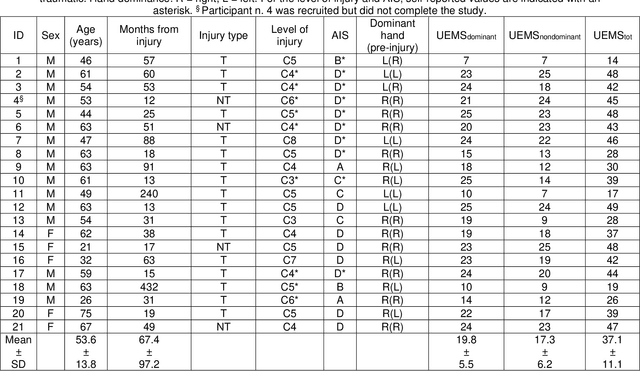

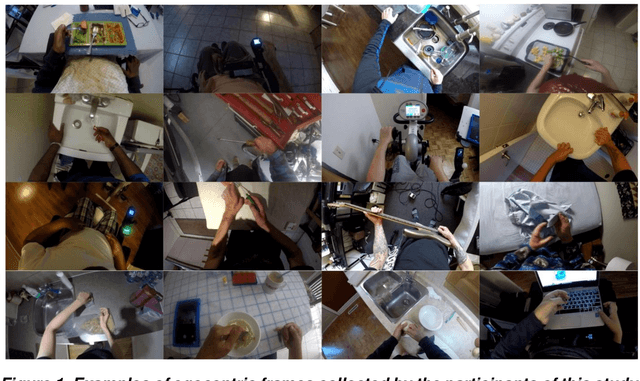

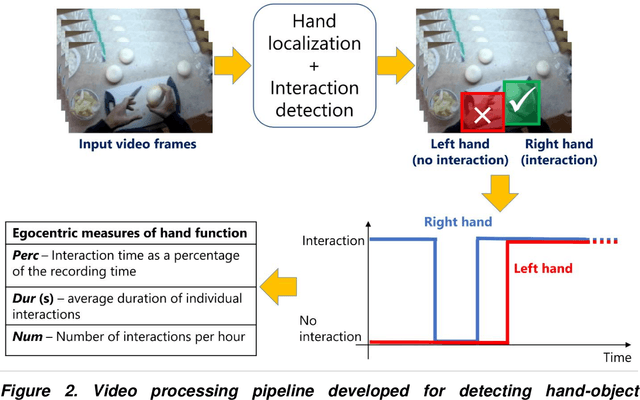

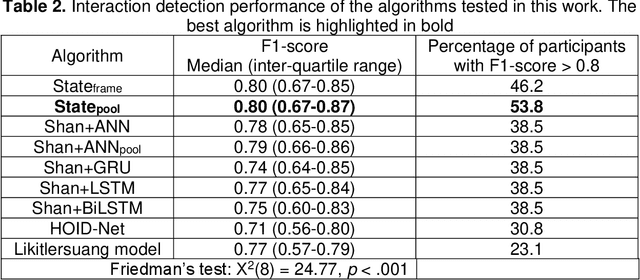

Background: Egocentric video has recently emerged as a potential solution for monitoring hand function in individuals living with tetraplegia in the community, especially for its ability to detect functional use in the home environment. Objective: To develop and validate a wearable vision-based system for measuring hand use in the home among individuals living with tetraplegia. Methods: Several deep learning algorithms for detecting functional hand-object interactions were developed and compared. The most accurate algorithm was used to extract measures of hand function from 65 hours of unscripted video recorded at home by 20 participants with tetraplegia. These measures were: the percentage of interaction time over total recording time (Perc); the average duration of individual interactions (Dur); the number of interactions per hour (Num). To demonstrate the clinical validity of the technology, egocentric measures were correlated with validated clinical assessments of hand function and independence (Graded Redefined Assessment of Strength, Sensibility and Prehension - GRASSP, Upper Extremity Motor Score - UEMS, and Spinal Cord Independent Measure - SCIM). Results: Hand-object interactions were automatically detected with a median F1-score of 0.80 (0.67-0.87). Our results demonstrated that higher UEMS and better prehension were related to greater time spent interacting, whereas higher SCIM and better hand sensation resulted in a higher number of interactions performed during the egocentric video recordings. Conclusions: For the first time, measures of hand function automatically estimated in an unconstrained environment in individuals with tetraplegia have been validated against internationally accepted measures of hand function. Future work will necessitate a formal evaluation of the reliability and responsiveness of the egocentric-based performance measures for hand use.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge