Jonathan Oliver

Carbon Filter: Real-time Alert Triage Using Large Scale Clustering and Fast Search

May 07, 2024Abstract:"Alert fatigue" is one of the biggest challenges faced by the Security Operations Center (SOC) today, with analysts spending more than half of their time reviewing false alerts. Endpoint detection products raise alerts by pattern matching on event telemetry against behavioral rules that describe potentially malicious behavior, but can suffer from high false positives that distract from actual attacks. While alert triage techniques based on data provenance may show promise, these techniques can take over a minute to inspect a single alert, while EDR customers may face tens of millions of alerts per day; the current reality is that these approaches aren't nearly scalable enough for production environments. We present Carbon Filter, a statistical learning based system that dramatically reduces the number of alerts analysts need to manually review. Our approach is based on the observation that false alert triggers can be efficiently identified and separated from suspicious behaviors by examining the process initiation context (e.g., the command line) that launched the responsible process. Through the use of fast-search algorithms for training and inference, our approach scales to millions of alerts per day. Through batching queries to the model, we observe a theoretical maximum throughput of 20 million alerts per hour. Based on the analysis of tens of million alerts from customer deployments, our solution resulted in a 6-fold improvement in the Signal-to-Noise ratio without compromising on alert triage performance.

On the Role of Similarity in Detecting Masquerading Files

Feb 17, 2024

Abstract:Similarity has been applied to a wide range of security applications, typically used in machine learning models. We examine the problem posed by masquerading samples; that is samples crafted by bad actors to be similar or near identical to legitimate samples. We find that these samples potentially create significant problems for machine learning solutions. The primary problem being that bad actors can circumvent machine learning solutions by using masquerading samples. We then examine the interplay between digital signatures and machine learning solutions. In particular, we focus on executable files and code signing. We offer a taxonomy for masquerading files. We use a combination of similarity and clustering to find masquerading files. We use the insights gathered in this process to offer improvements to similarity based and machine learning security solutions.

Lexical Access for Speech Understanding using Minimum Message Length Encoding

Feb 06, 2013

Abstract:The Lexical Access Problem consists of determining the intended sequence of words corresponding to an input sequence of phonemes (basic speech sounds) that come from a low-level phoneme recognizer. In this paper we present an information-theoretic approach based on the Minimum Message Length Criterion for solving the Lexical Access Problem. We model sentences using phoneme realizations seen in training, and word and part-of-speech information obtained from text corpora. We show results on multiple-speaker, continuous, read speech and discuss a heuristic using equivalence classes of similar sounding words which speeds up the recognition process without significant deterioration in recognition accuracy.

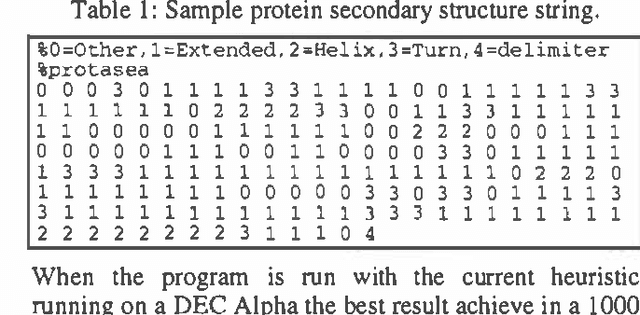

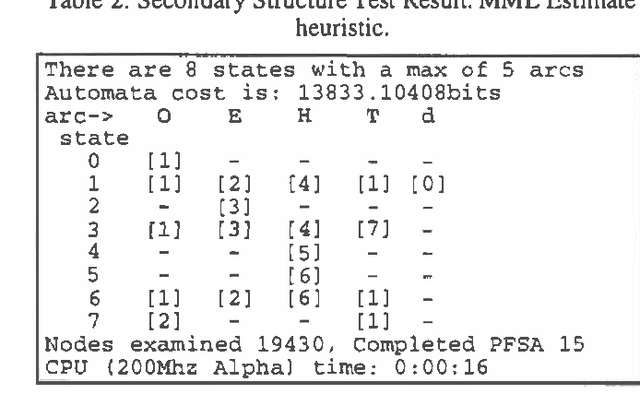

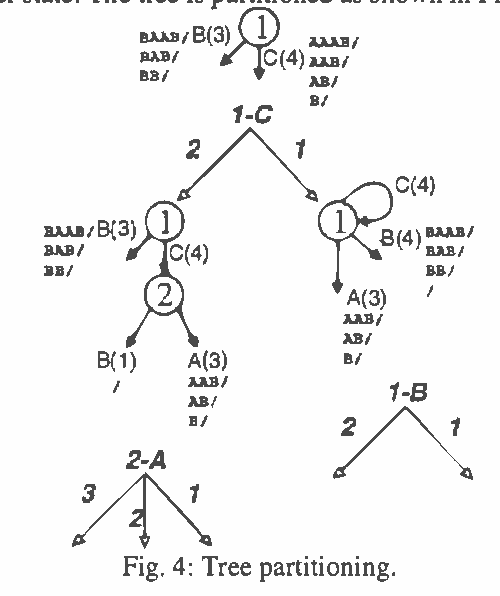

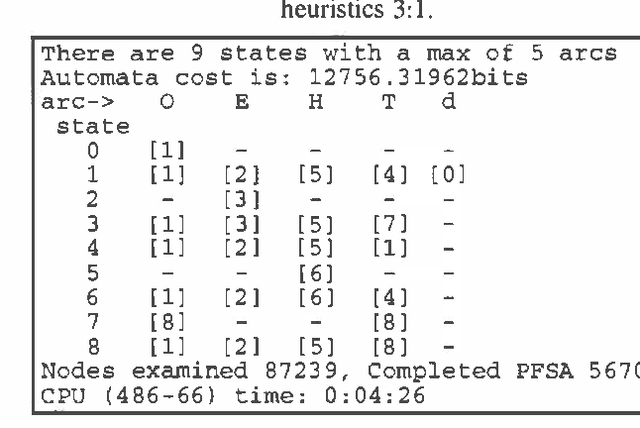

Efficient Induction of Finite State Automata

Feb 06, 2013

Abstract:This paper introduces a new algorithm for the induction if complex finite state automata from samples of behavior. The algorithm is based on information theoretic principles. The algorithm reduces the search space by many orders of magnitude over what was previously thought possible. We compare the algorithm with some existing induction techniques for finite state automata and show that the algorithm is much superior in both run time and quality of inductions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge