John Yen

Zooming Into the Darknet: Characterizing Internet Background Radiation and its Structural Changes

Aug 05, 2021

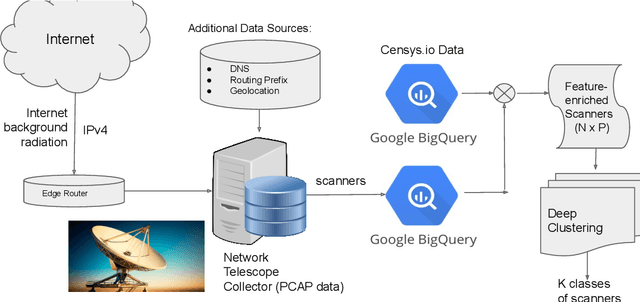

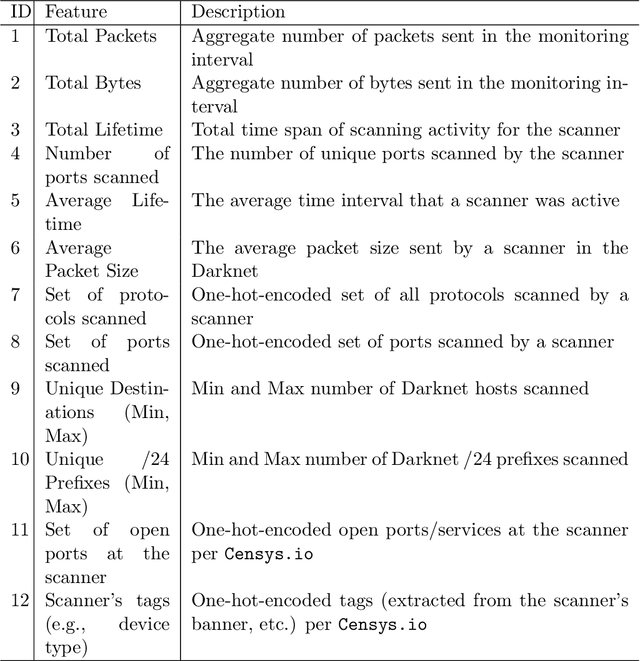

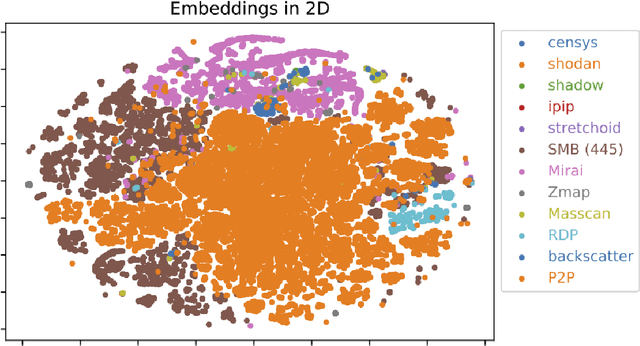

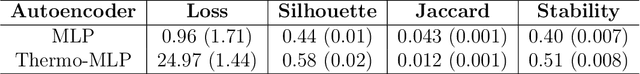

Abstract:Network telescopes or "Darknets" provide a unique window into Internet-wide malicious activities associated with malware propagation, denial of service attacks, scanning performed for network reconnaissance, and others. Analyses of the resulting data can provide actionable insights to security analysts that can be used to prevent or mitigate cyber-threats. Large Darknets, however, observe millions of nefarious events on a daily basis which makes the transformation of the captured information into meaningful insights challenging. We present a novel framework for characterizing Darknet behavior and its temporal evolution aiming to address this challenge. The proposed framework: (i) Extracts a high dimensional representation of Darknet events composed of features distilled from Darknet data and other external sources; (ii) Learns, in an unsupervised fashion, an information-preserving low-dimensional representation of these events (using deep representation learning) that is amenable to clustering; (iv) Performs clustering of the scanner data in the resulting representation space and provides interpretable insights using optimal decision trees; and (v) Utilizes the clustering outcomes as "signatures" that can be used to detect structural changes in the Darknet activities. We evaluate the proposed system on a large operational Network Telescope and demonstrate its ability to detect real-world, high-impact cybersecurity incidents.

Implementing Evidential Reasoning in Expert Systems

Mar 27, 2013

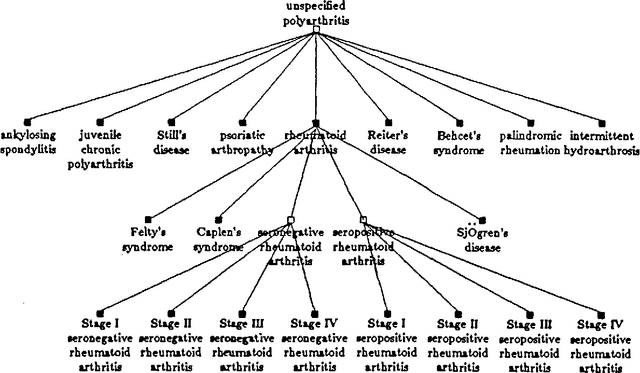

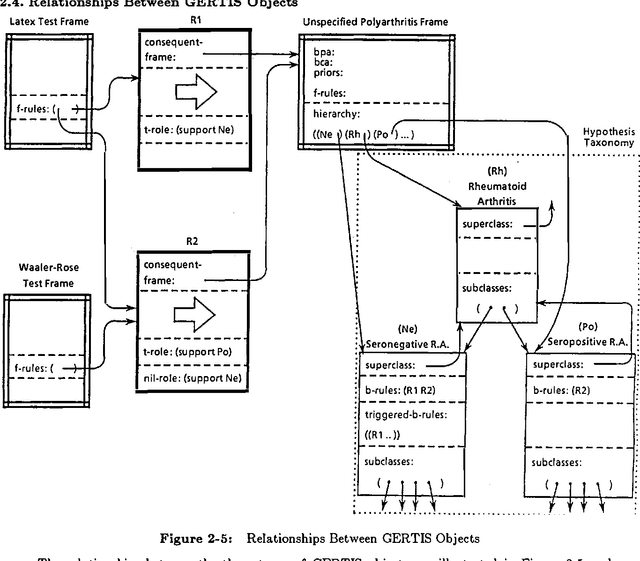

Abstract:The Dempster-Shafer theory has been extended recently for its application to expert systems. However, implementing the extended D-S reasoning model in rule-based systems greatly complicates the task of generating informative explanations. By implementing GERTIS, a prototype system for diagnosing rheumatoid arthritis, we show that two kinds of knowledge are essential for explanation generation: (l) taxonomic class relationships between hypotheses and (2) pointers to the rules that significantly contribute to belief in the hypothesis. As a result, the knowledge represented in GERTIS is richer and more complex than that of conventional rule-based systems. GERTIS not only demonstrates the feasibility of rule-based evidential-reasoning systems, but also suggests ways to generate better explanations, and to explicitly represent various useful relationships among hypotheses and rules.

Can Evidence Be Combined in the Dempster-Shafer Theory

Mar 27, 2013Abstract:Dempster's rule of combination has been the most controversial part of the Dempster-Shafer (D-S) theory. In particular, Zadeh has reached a conjecture on the noncombinability of evidence from a relational model of the D-S theory. In this paper, we will describe another relational model where D-S masses are represented as conditional granular distributions. By comparing it with Zadeh's relational model, we will show how Zadeh's conjecture on combinability does not affect the applicability of Dempster's rule in our model.

Generalizing the Dempster-Shafer Theory to Fuzzy Sets

Mar 27, 2013

Abstract:With the desire to apply the Dempster-Shafer theory to complex real world problems where the evidential strength is often imprecise and vague, several attempts have been made to generalize the theory. However, the important concept in the D-S theory that the belief and plausibility functions are lower and upper probabilities is no longer preserved in these generalizations. In this paper, we describe a generalized theory of evidence where the degree of belief in a fuzzy set is obtained by minimizing the probability of the fuzzy set under the constraints imposed by a basic probability assignment. To formulate the probabilistic constraint of a fuzzy focal element, we decompose it into a set of consonant non-fuzzy focal elements. By generalizing the compatibility relation to a possibility theory, we are able to justify our generalization to Dempster's rule based on possibility distribution. Our generalization not only extends the application of the D-S theory but also illustrates a way that probability theory and fuzzy set theory can be combined to deal with different kinds of uncertain information in AI systems.

Extending Term Subsumption systems for Uncertainty Management

Mar 27, 2013

Abstract:A major difficulty in developing and maintaining very large knowledge bases originates from the variety of forms in which knowledge is made available to the KB builder. The objective of this research is to bring together two complementary knowledge representation schemes: term subsumption languages, which represent and reason about defining characteristics of concepts, and proximate reasoning models, which deal with uncertain knowledge and data in expert systems. Previous works in this area have primarily focused on probabilistic inheritance. In this paper, we address two other important issues regarding the integration of term subsumption-based systems and approximate reasoning models. First, we outline a general architecture that specifies the interactions between the deductive reasoner of a term subsumption system and an approximate reasoner. Second, we generalize the semantics of terminological language so that terminological knowledge can be used to make plausible inferences. The architecture, combined with the generalized semantics, forms the foundation of a synergistic tight integration of term subsumption systems and approximate reasoning models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge