John Hopfield

Dense Associative Memories with Analog Circuits

Dec 17, 2025Abstract:The increasing computational demands of modern AI systems have exposed fundamental limitations of digital hardware, driving interest in alternative paradigms for efficient large-scale inference. Dense Associative Memory (DenseAM) is a family of models that offers a flexible framework for representing many contemporary neural architectures, such as transformers and diffusion models, by casting them as dynamical systems evolving on an energy landscape. In this work, we propose a general method for building analog accelerators for DenseAMs and implementing them using electronic RC circuits, crossbar arrays, and amplifiers. We find that our analog DenseAM hardware performs inference in constant time independent of model size. This result highlights an asymptotic advantage of analog DenseAMs over digital numerical solvers that scale at least linearly with the model size. We consider three settings of progressively increasing complexity: XOR, the Hamming (7,4) code, and a simple language model defined on binary variables. We propose analog implementations of these three models and analyze the scaling of inference time, energy consumption, and hardware. Finally, we estimate lower bounds on the achievable time constants imposed by amplifier specifications, suggesting that even conservative existing analog technology can enable inference times on the order of tens to hundreds of nanoseconds. By harnessing the intrinsic parallelism and continuous-time operation of analog circuits, our DenseAM-based accelerator design offers a new avenue for fast and scalable AI hardware.

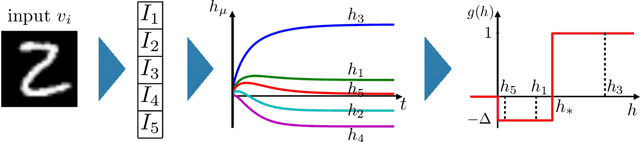

Large Associative Memory Problem in Neurobiology and Machine Learning

Aug 16, 2020

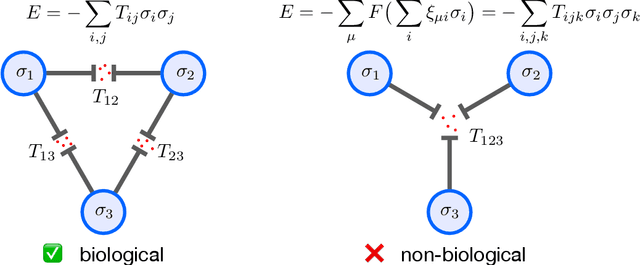

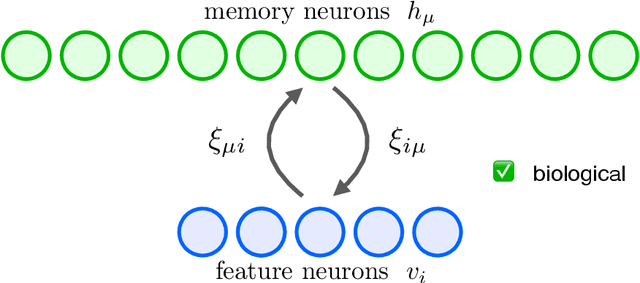

Abstract:Dense Associative Memories or modern Hopfield networks permit storage and reliable retrieval of an exponentially large (in the dimension of feature space) number of memories. At the same time, their naive implementation is non-biological, since it seemingly requires the existence of many-body synaptic junctions between the neurons. We show that these models are effective descriptions of a more microscopic (written in terms of biological degrees of freedom) theory that has additional (hidden) neurons and only requires two-body interactions between them. For this reason our proposed microscopic theory is a valid model of large associative memory with a degree of biological plausibility. The dynamics of our network and its reduced dimensional equivalent both minimize energy (Lyapunov) functions. When certain dynamical variables (hidden neurons) are integrated out from our microscopic theory, one can recover many of the models that were previously discussed in the literature, e.g. the model presented in ''Hopfield Networks is All You Need'' paper. We also provide an alternative derivation of the energy function and the update rule proposed in the aforementioned paper and clarify the relationships between various models of this class.

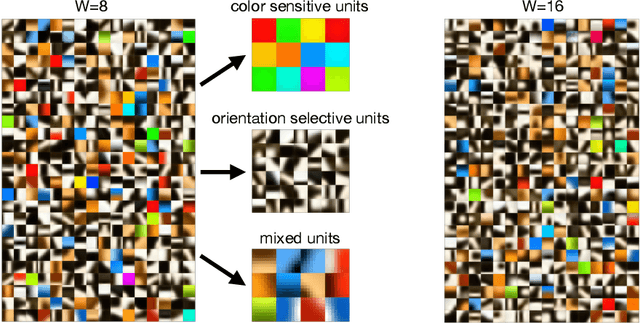

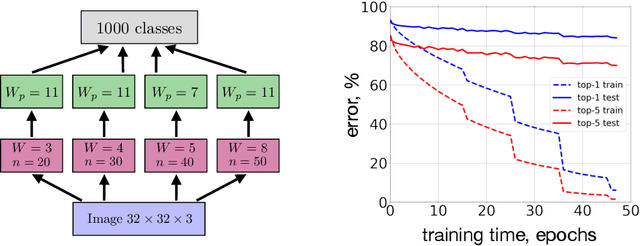

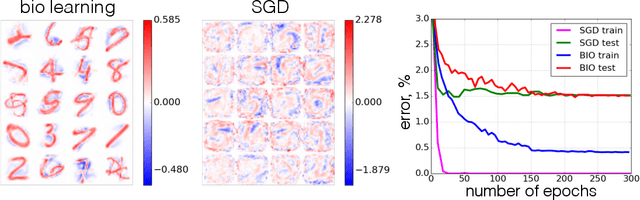

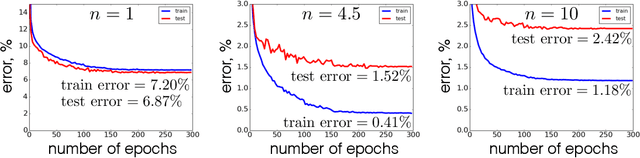

Local Unsupervised Learning for Image Analysis

Aug 14, 2019

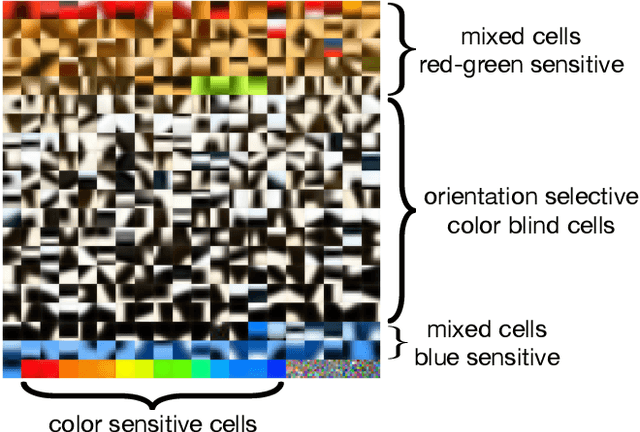

Abstract:Local Hebbian learning is believed to be inferior in performance to end-to-end training using a backpropagation algorithm. We question this popular belief by designing a local algorithm that can learn convolutional filters at scale on large image datasets. These filters combined with patch normalization and very steep non-linearities result in a good classification accuracy for shallow networks trained locally, as opposed to end-to-end. The filters learned by our algorithm contain both orientation selective units and unoriented color units, resembling the responses of pyramidal neurons located in the cytochrome oxidase 'interblob' and 'blob' regions in the primary visual cortex of primates. It is shown that convolutional networks with patch normalization significantly outperform standard convolutional networks on the task of recovering the original classes when shadows are superimposed on top of standard CIFAR-10 images. Patch normalization approximates the retinal adaptation to the mean light intensity, important for human vision. We also demonstrate a successful transfer of learned representations between CIFAR-10 and ImageNet 32x32 datasets. All these results taken together hint at the possibility that local unsupervised training might be a powerful tool for learning general representations (without specifying the task) directly from unlabeled data.

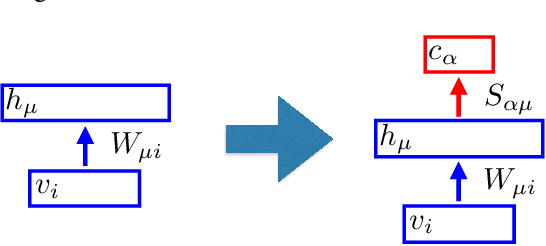

Unsupervised Learning by Competing Hidden Units

Jun 26, 2018

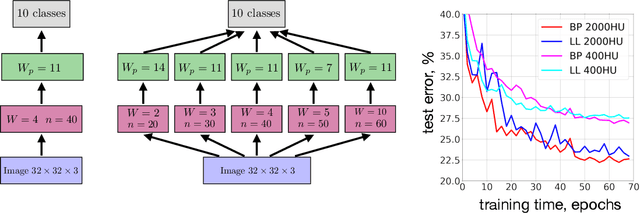

Abstract:It is widely believed that the backpropagation algorithm is essential for learning good feature detectors in early layers of artificial neural networks, so that these detectors are useful for the task performed by the higher layers of that neural network. At the same time, the traditional form of backpropagation is biologically implausible. In the present paper we propose an unusual learning rule, which has a degree of biological plausibility, and which is motivated by Hebb's idea that change of the synapse strength should be local - i.e. should depend only on the activities of the pre and post synaptic neurons. We design a learning algorithm that utilizes global inhibition in the hidden layer, and is capable of learning early feature detectors in a completely unsupervised way. These learned lower layer feature detectors can be used to train higher layer weights in a usual supervised way so that the performance of the full network is comparable to the performance of standard feedforward networks trained end-to-end with a backpropagation algorithm.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge