Johannes Meuer

Field-Space Autoencoder for Scalable Climate Emulators

Jan 21, 2026Abstract:Kilometer-scale Earth system models are essential for capturing local climate change. However, these models are computationally expensive and produce petabyte-scale outputs, which limits their utility for applications such as probabilistic risk assessment. Here, we present the Field-Space Autoencoder, a scalable climate emulation framework based on a spherical compression model that overcomes these challenges. By utilizing Field-Space Attention, the model efficiently operates on native climate model output and therefore avoids geometric distortions caused by forcing spherical data onto Euclidean grids. This approach preserves physical structures significantly better than convolutional baselines. By producing a structured compressed field, it serves as a good baseline for downstream generative emulation. In addition, the model can perform zero-shot super-resolution that maps low-resolution large ensembles and scarce high-resolution data into a shared representation. We train a generative diffusion model on these compressed fields. The model can simultaneously learn internal variability from abundant low-resolution data and fine-scale physics from sparse high-resolution data. Our work bridges the gap between the high volume of low-resolution ensemble statistics and the scarcity of high-resolution physical detail.

Field-Space Attention for Structure-Preserving Earth System Transformers

Dec 23, 2025Abstract:Accurate and physically consistent modeling of Earth system dynamics requires machine-learning architectures that operate directly on continuous geophysical fields and preserve their underlying geometric structure. Here we introduce Field-Space attention, a mechanism for Earth system Transformers that computes attention in the physical domain rather than in a learned latent space. By maintaining all intermediate representations as continuous fields on the sphere, the architecture enables interpretable internal states and facilitates the enforcement of scientific constraints. The model employs a fixed, non-learned multiscale decomposition and learns structure-preserving deformations of the input field, allowing coherent integration of coarse and fine-scale information while avoiding the optimization instabilities characteristic of standard single-scale Vision Transformers. Applied to global temperature super-resolution on a HEALPix grid, Field-Space Transformers converge more rapidly and stably than conventional Vision Transformers and U-Net baselines, while requiring substantially fewer parameters. The explicit preservation of field structure throughout the network allows physical and statistical priors to be embedded directly into the architecture, yielding improved fidelity and reliability in data-driven Earth system modeling. These results position Field-Space Attention as a compact, interpretable, and physically grounded building block for next-generation Earth system prediction and generative modeling frameworks.

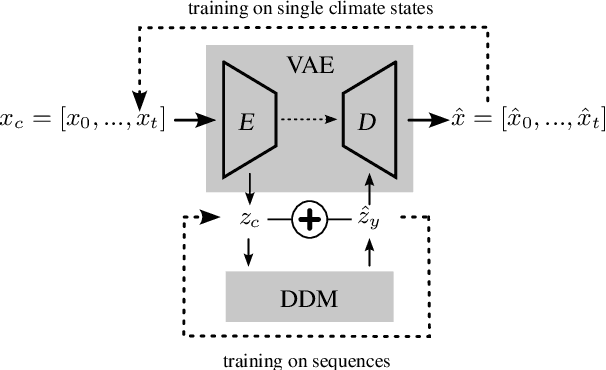

Latent Diffusion Model for Generating Ensembles of Climate Simulations

Jul 02, 2024

Abstract:Obtaining accurate estimates of uncertainty in climate scenarios often requires generating large ensembles of high-resolution climate simulations, a computationally expensive and memory intensive process. To address this challenge, we train a novel generative deep learning approach on extensive sets of climate simulations. The model consists of two components: a variational autoencoder for dimensionality reduction and a denoising diffusion probabilistic model that generates multiple ensemble members. We validate our model on the Max Planck Institute Grand Ensemble and show that it achieves good agreement with the original ensemble in terms of variability. By leveraging the latent space representation, our model can rapidly generate large ensembles on-the-fly with minimal memory requirements, which can significantly improve the efficiency of uncertainty quantification in climate simulations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge