Johannes Kopp

Label-Efficient Semantic Segmentation of LiDAR Point Clouds in Adverse Weather Conditions

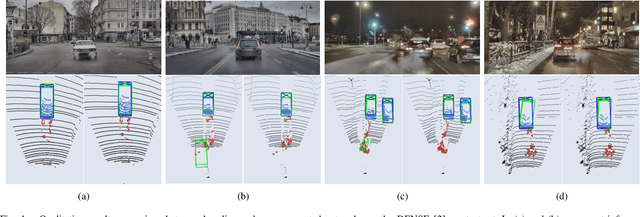

Jun 14, 2024Abstract:Adverse weather conditions can severely affect the performance of LiDAR sensors by introducing unwanted noise in the measurements. Therefore, differentiating between noise and valid points is crucial for the reliable use of these sensors. Current approaches for detecting adverse weather points require large amounts of labeled data, which can be difficult and expensive to obtain. This paper proposes a label-efficient approach to segment LiDAR point clouds in adverse weather. We develop a framework that uses few-shot semantic segmentation to learn to segment adverse weather points from only a few labeled examples. Then, we use a semi-supervised learning approach to generate pseudo-labels for unlabelled point clouds, significantly increasing the amount of training data without requiring any additional labeling. We also integrate good weather data in our training pipeline, allowing for high performance in both good and adverse weather conditions. Results on real and synthetic datasets show that our method performs well in detecting snow, fog, and spray. Furthermore, we achieve competitive performance against fully supervised methods while using only a fraction of labeled data.

SemanticSpray++: A Multimodal Dataset for Autonomous Driving in Wet Surface Conditions

Jun 14, 2024

Abstract:Autonomous vehicles rely on camera, LiDAR, and radar sensors to navigate the environment. Adverse weather conditions like snow, rain, and fog are known to be problematic for both camera and LiDAR-based perception systems. Currently, it is difficult to evaluate the performance of these methods due to the lack of publicly available datasets containing multimodal labeled data. To address this limitation, we propose the SemanticSpray++ dataset, which provides labels for camera, LiDAR, and radar data of highway-like scenarios in wet surface conditions. In particular, we provide 2D bounding boxes for the camera image, 3D bounding boxes for the LiDAR point cloud, and semantic labels for the radar targets. By labeling all three sensor modalities, the SemanticSpray++ dataset offers a comprehensive test bed for analyzing the performance of different perception methods when vehicles travel on wet surface conditions. Together with comprehensive label statistics, we also evaluate multiple baseline methods across different tasks and analyze their performances. The dataset will be available at https://semantic-spray-dataset.github.io .

Simultaneous Clutter Detection and Semantic Segmentation of Moving Objects for Automotive Radar Data

Nov 14, 2023

Abstract:The unique properties of radar sensors, such as their robustness to adverse weather conditions, make them an important part of the environment perception system of autonomous vehicles. One of the first steps during the processing of radar point clouds is often the detection of clutter, i.e. erroneous points that do not correspond to real objects. Another common objective is the semantic segmentation of moving road users. These two problems are handled strictly separate from each other in literature. The employed neural networks are always focused entirely on only one of the tasks. In contrast to this, we examine ways to solve both tasks at the same time with a single jointly used model. In addition to a new augmented multi-head architecture, we also devise a method to represent a network's predictions for the two tasks with only one output value. This novel approach allows us to solve the tasks simultaneously with the same inference time as a conventional task-specific model. In an extensive evaluation, we show that our setup is highly effective and outperforms every existing network for semantic segmentation on the RadarScenes dataset.

Towards Robust 3D Object Detection In Rainy Conditions

Oct 05, 2023Abstract:LiDAR sensors are used in autonomous driving applications to accurately perceive the environment. However, they are affected by adverse weather conditions such as snow, fog, and rain. These everyday phenomena introduce unwanted noise into the measurements, severely degrading the performance of LiDAR-based perception systems. In this work, we propose a framework for improving the robustness of LiDAR-based 3D object detectors against road spray. Our approach uses a state-of-the-art adverse weather detection network to filter out spray from the LiDAR point cloud, which is then used as input for the object detector. In this way, the detected objects are less affected by the adverse weather in the scene, resulting in a more accurate perception of the environment. In addition to adverse weather filtering, we explore the use of radar targets to further filter false positive detections. Tests on real-world data show that our approach improves the robustness to road spray of several popular 3D object detectors.

LS-VOS: Identifying Outliers in 3D Object Detections Using Latent Space Virtual Outlier Synthesis

Oct 02, 2023

Abstract:LiDAR-based 3D object detectors have achieved unprecedented speed and accuracy in autonomous driving applications. However, similar to other neural networks, they are often biased toward high-confidence predictions or return detections where no real object is present. These types of detections can lead to a less reliable environment perception, severely affecting the functionality and safety of autonomous vehicles. We address this problem by proposing LS-VOS, a framework for identifying outliers in 3D object detections. Our approach builds on the idea of Virtual Outlier Synthesis (VOS), which incorporates outlier knowledge during training, enabling the model to learn more compact decision boundaries. In particular, we propose a new synthesis approach that relies on the latent space of an auto-encoder network to generate outlier features with a parametrizable degree of similarity to in-distribution features. In extensive experiments, we show that our approach improves the outlier detection capabilities of a state-of-the-art object detector while maintaining high 3D object detection performance.

Energy-based Detection of Adverse Weather Effects in LiDAR Data

May 26, 2023

Abstract:Autonomous vehicles rely on LiDAR sensors to perceive the environment. Adverse weather conditions like rain, snow, and fog negatively affect these sensors, reducing their reliability by introducing unwanted noise in the measurements. In this work, we tackle this problem by proposing a novel approach for detecting adverse weather effects in LiDAR data. We reformulate this problem as an outlier detection task and use an energy-based framework to detect outliers in point clouds. More specifically, our method learns to associate low energy scores with inlier points and high energy scores with outliers allowing for robust detection of adverse weather effects. In extensive experiments, we show that our method performs better in adverse weather detection and has higher robustness to unseen weather effects than previous state-of-the-art methods. Furthermore, we show how our method can be used to perform simultaneous outlier detection and semantic segmentation. Finally, to help expand the research field of LiDAR perception in adverse weather, we release the SemanticSpray dataset, which contains labeled vehicle spray data in highway-like scenarios. The dataset is available at http://dx.doi.org/10.18725/OPARU-48815 .

Tackling Clutter in Radar Data -- Label Generation and Detection Using PointNet++

Mar 16, 2023Abstract:Radar sensors employed for environment perception, e.g. in autonomous vehicles, output a lot of unwanted clutter. These points, for which no corresponding real objects exist, are a major source of errors in following processing steps like object detection or tracking. We therefore present two novel neural network setups for identifying clutter. The input data, network architectures and training configuration are adjusted specifically for this task. Special attention is paid to the downsampling of point clouds composed of multiple sensor scans. In an extensive evaluation, the new setups display substantially better performance than existing approaches. Because there is no suitable public data set in which clutter is annotated, we design a method to automatically generate the respective labels. By applying it to existing data with object annotations and releasing its code, we effectively create the first freely available radar clutter data set representing real-world driving scenarios. Code and instructions are accessible at www.github.com/kopp-j/clutter-ds.

Detection of Condensed Vehicle Gas Exhaust in LiDAR Point Clouds

Jul 11, 2022

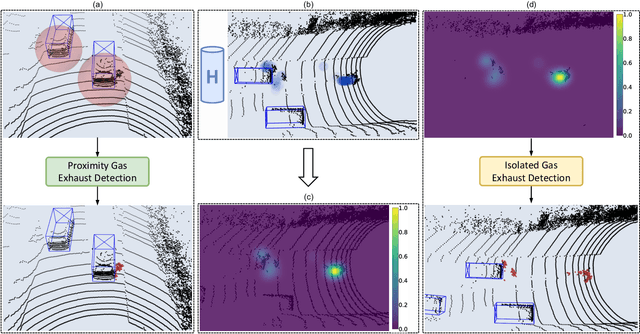

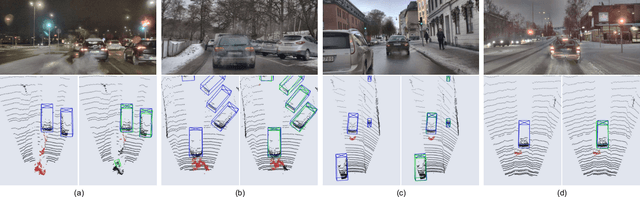

Abstract:LiDAR sensors used in autonomous driving applications are negatively affected by adverse weather conditions. One common, but understudied effect, is the condensation of vehicle gas exhaust in cold weather. This everyday phenomenon can severely impact the quality of LiDAR measurements, resulting in a less accurate environment perception by creating artifacts like ghost object detections. In the literature, the semantic segmentation of adverse weather effects like rain and fog is achieved using learning-based approaches. However, such methods require large sets of labeled data, which can be extremely expensive and laborious to get. We address this problem by presenting a two-step approach for the detection of condensed vehicle gas exhaust. First, we identify for each vehicle in a scene its emission area and detect gas exhaust if present. Then, isolated clouds are detected by modeling through time the regions of space where gas exhaust is likely to be present. We test our method on real urban data, showing that our approach can reliably detect gas exhaust in different scenarios, making it appealing for offline pre-labeling and online applications such as ghost object detection.

Robust 3D Object Detection in Cold Weather Conditions

May 24, 2022

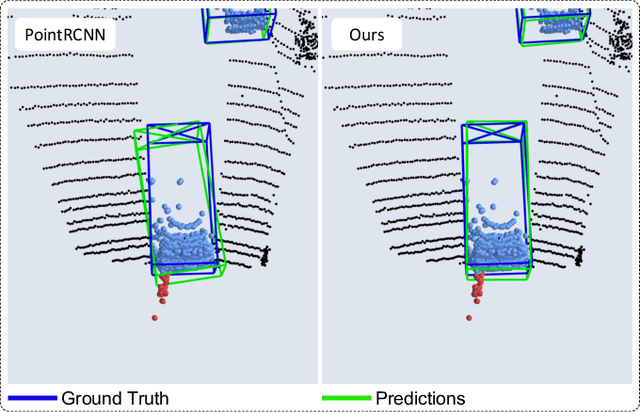

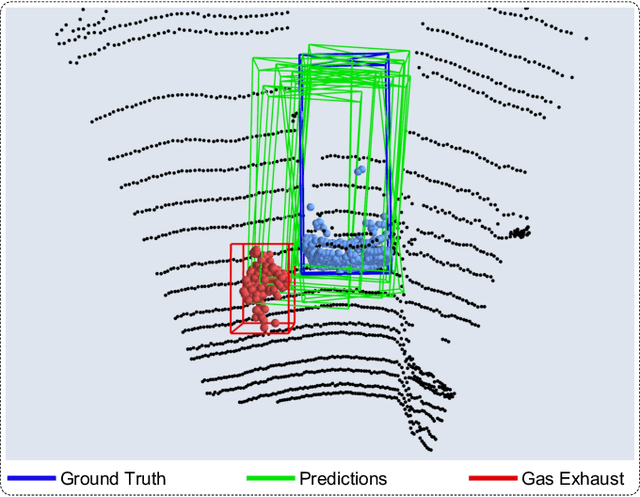

Abstract:Adverse weather conditions can negatively affect LiDAR-based object detectors. In this work, we focus on the phenomenon of vehicle gas exhaust condensation in cold weather conditions. This everyday effect can influence the estimation of object sizes, orientations and introduce ghost object detections, compromising the reliability of the state of the art object detectors. We propose to solve this problem by using data augmentation and a novel training loss term. To effectively train deep neural networks, a large set of labeled data is needed. In case of adverse weather conditions, this process can be extremely laborious and expensive. We address this issue in two steps: First, we present a gas exhaust data generation method based on 3D surface reconstruction and sampling which allows us to generate large sets of gas exhaust clouds from a small pool of labeled data. Second, we introduce a point cloud augmentation process that can be used to add gas exhaust to datasets recorded in good weather conditions. Finally, we formulate a new training loss term that leverages the augmented point cloud to increase object detection robustness by penalizing predictions that include noise. In contrast to other works, our method can be used with both grid-based and point-based detectors. Moreover, since our approach does not require any network architecture changes, inference times remain unchanged. Experimental results on real data show that our proposed method greatly increases robustness to gas exhaust and noisy data.

Fast Rule-Based Clutter Detection in Automotive Radar Data

Aug 27, 2021

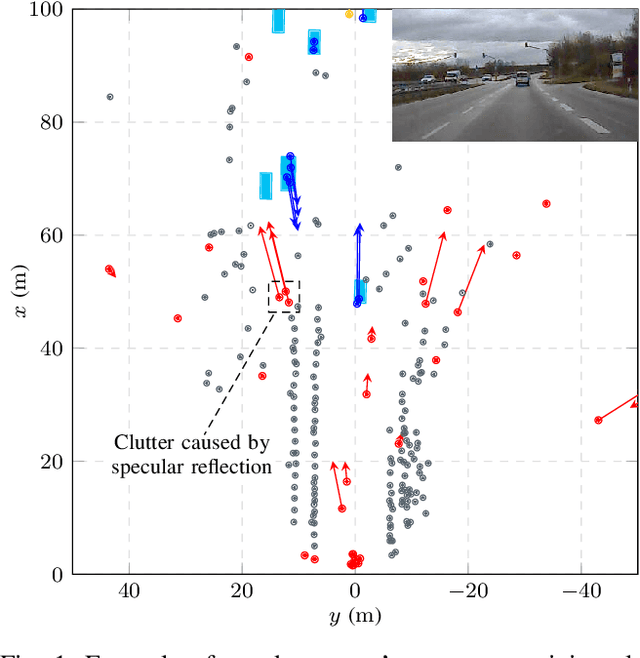

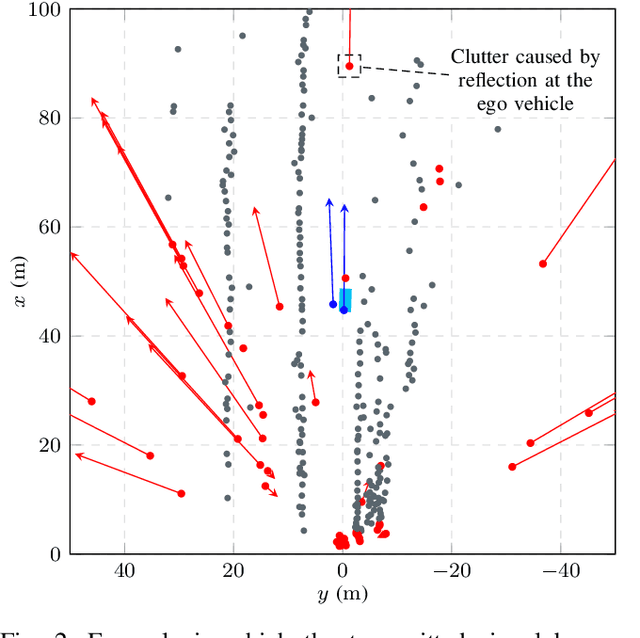

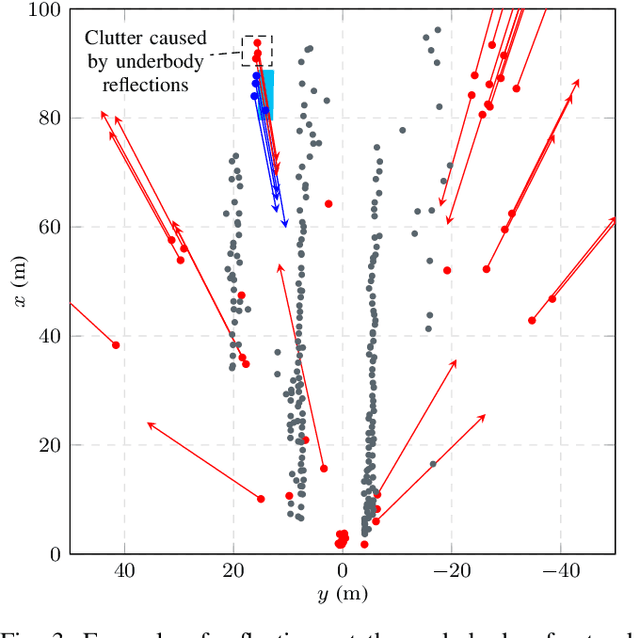

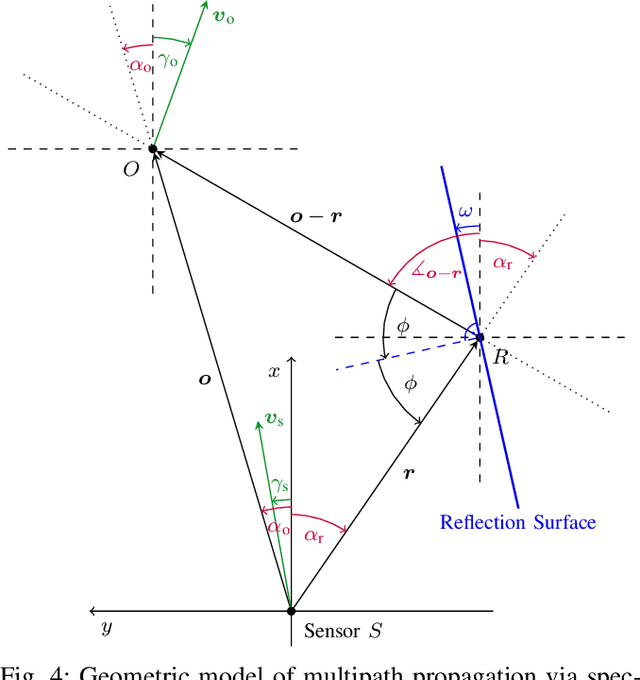

Abstract:Automotive radar sensors output a lot of unwanted clutter or ghost detections, whose position and velocity do not correspond to any real object in the sensor's field of view. This poses a substantial challenge for environment perception methods like object detection or tracking. Especially problematic are clutter detections that occur in groups or at similar locations in multiple consecutive measurements. In this paper, a new algorithm for identifying such erroneous detections is presented. It is mainly based on the modeling of specific commonly occurring wave propagation paths that lead to clutter. In particular, the three effects explicitly covered are reflections at the underbody of a car or truck, signals traveling back and forth between the vehicle on which the sensor is mounted and another object, and multipath propagation via specular reflection. The latter often occurs near guardrails, concrete walls or similar reflective surfaces. Each of these effects is described both theoretically and regarding a method for identifying the corresponding clutter detections. Identification is done by analyzing detections generated from a single sensor measurement only. The final algorithm is evaluated on recordings of real extra-urban traffic. For labeling, a semi-automatic process is employed. The results are promising, both in terms of performance and regarding the very low execution time. Typically, a large part of clutter is found, while only a small ratio of detections corresponding to real objects are falsely classified by the algorithm.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge