Joel Ikels

Robotic Control Using Model Based Meta Adaption

Oct 07, 2022

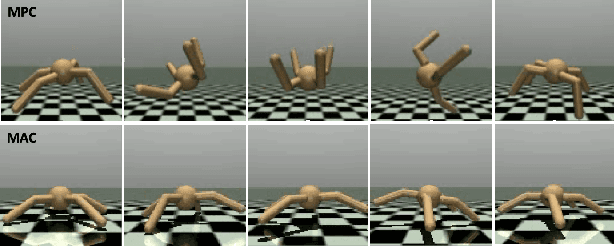

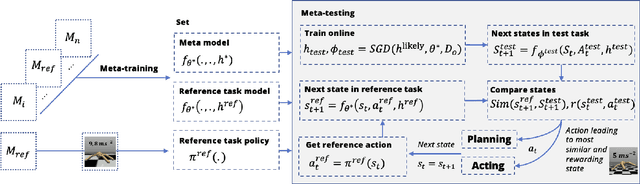

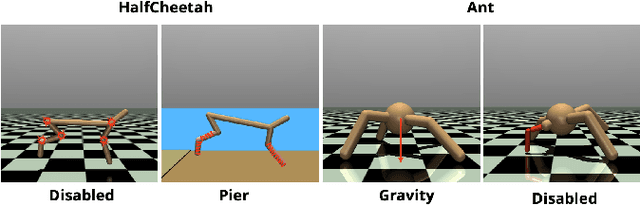

Abstract:In machine learning, meta-learning methods aim for fast adaptability to unknown tasks using prior knowledge. Model-based meta-reinforcement learning combines reinforcement learning via world models with Meta Reinforcement Learning (MRL) for increased sample efficiency. However, adaption to unknown tasks does not always result in preferable agent behavior. This paper introduces a new Meta Adaptation Controller (MAC) that employs MRL to apply a preferred robot behavior from one task to many similar tasks. To do this, MAC aims to find actions an agent has to take in a new task to reach a similar outcome as in a learned task. As a result, the agent will adapt quickly to the change in the dynamic and behave appropriately without the need to construct a reward function that enforces the preferred behavior.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge