Joanna Komorniczak

Synthetic Non-stationary Data Streams for Recognition of the Unknown

May 19, 2025Abstract:The problem of data non-stationarity is commonly addressed in data stream processing. In a dynamic environment, methods should continuously be ready to analyze time-varying data -- hence, they should enable incremental training and respond to concept drifts. An equally important variability typical for non-stationary data stream environments is the emergence of new, previously unknown classes. Often, methods focus on one of these two phenomena -- detection of concept drifts or detection of novel classes -- while both difficulties can be observed in data streams. Additionally, concerning previously unknown observations, the topic of open set of classes has become particularly important in recent years, where the goal of methods is to efficiently classify within known classes and recognize objects outside the model competence. This article presents a strategy for synthetic data stream generation in which both concept drifts and the emergence of new classes representing unknown objects occur. The presented research shows how unsupervised drift detectors address the task of detecting novelty and concept drifts and demonstrates how the generated data streams can be utilized in the open set recognition task.

Describing Nonstationary Data Streams in Frequency Domain

Feb 07, 2025

Abstract:Concept drift is among the primary challenges faced by the data stream processing methods. The drift detection strategies, designed to counteract the negative consequences of such changes, often rely on analyzing the problem metafeatures. This work presents the Frequency Filtering Metadescriptor -- a tool for characterizing the data stream that searches for the informative frequency components visible in the sample's feature vector. The frequencies are filtered according to their variance across all available data batches. The presented solution is capable of generating a metadescription of the data stream, separating chunks into groups describing specific concepts on its basis, and visualizing the frequencies in the original spatial domain. The experimental analysis compared the proposed solution with two state-of-the-art strategies and with the PCA baseline in the post-hoc concept identification task. The research is followed by the identification of concepts in the real-world data streams. The generalization in the frequency domain adapted in the proposed solution allows to capture the complex feature dependencies as a reduced number of frequency components, while maintaining the semantic meaning of data.

Structuring the Processing Frameworks for Data Stream Evaluation and Application

Nov 11, 2024Abstract:The following work addresses the problem of frameworks for data stream processing that can be used to evaluate the solutions in an environment that resembles real-world applications. The definition of structured frameworks stems from a need to reliably evaluate the data stream classification methods, considering the constraints of delayed and limited label access. The current experimental evaluation often boundlessly exploits the assumption of their complete and immediate access to monitor the recognition quality and to adapt the methods to the changing concepts. The problem is leveraged by reviewing currently described methods and techniques for data stream processing and verifying their outcomes in simulated environment. The effect of the work is a proposed taxonomy of data stream processing frameworks, showing the linkage between drift detection and classification methods considering a natural phenomenon of label delay.

Unsupervised Concept Drift Detection based on Parallel Activations of Neural Network

Apr 11, 2024Abstract:Practical applications of artificial intelligence increasingly often have to deal with the streaming properties of real data, which, considering the time factor, are subject to phenomena such as periodicity and more or less chaotic degeneration - resulting directly in the concept drifts. The modern concept drift detectors almost always assume immediate access to labels, which due to their cost, limited availability and possible delay has been shown to be unrealistic. This work proposes an unsupervised Parallel Activations Drift Detector, utilizing the outputs of an untrained neural network, presenting its key design elements, intuitions about processing properties, and a pool of computer experiments demonstrating its competitiveness with state-of-the-art methods.

Taking Class Imbalance Into Account in Open Set Recognition Evaluation

Feb 09, 2024Abstract:In recent years Deep Neural Network-based systems are not only increasing in popularity but also receive growing user trust. However, due to the closed-world assumption of such systems, they cannot recognize samples from unknown classes and often induce an incorrect label with high confidence. Presented work looks at the evaluation of methods for Open Set Recognition, focusing on the impact of class imbalance, especially in the dichotomy between known and unknown samples. As an outcome of problem analysis, we present a set of guidelines for evaluation of methods in this field.

torchosr -- a PyTorch extension package for Open Set Recognition models evaluation in Python

May 16, 2023Abstract:The article presents the torchosr package - a Python package compatible with PyTorch library - offering tools and methods dedicated to Open Set Recognition in Deep Neural Networks. The package offers two state-of-the-art methods in the field, a set of functions for handling base sets and generation of derived sets for the Open Set Recognition task (where some classes are considered unknown and used only in the testing process) and additional tools to handle datasets and methods. The main goal of the package proposal is to simplify and promote the correct experimental evaluation, where experiments are carried out on a large number of derivative sets with various Openness and class-to-category assignments. The authors hope that state-of-the-art methods available in the package will become a source of a correct and open-source implementation of the relevant solutions in the domain.

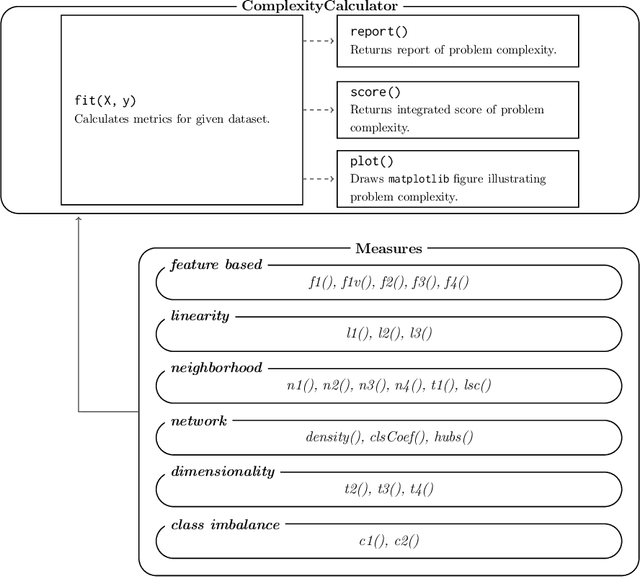

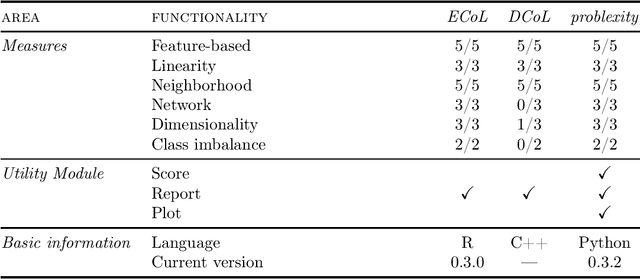

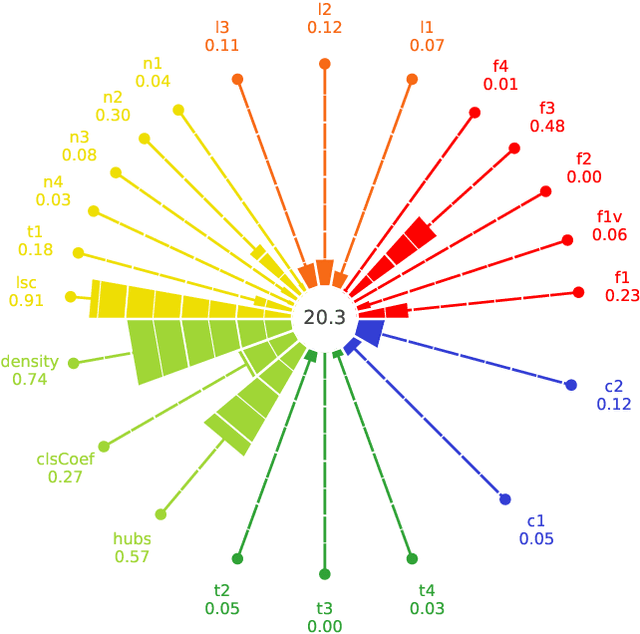

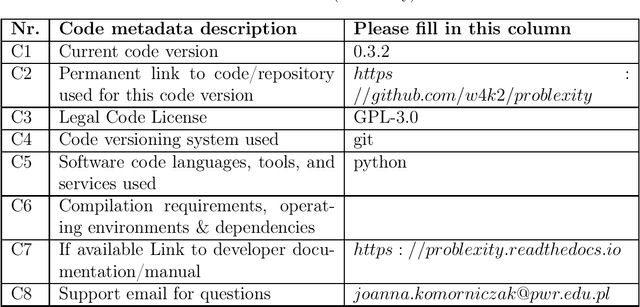

problexity -- an open-source Python library for binary classification problem complexity assessment

Jul 14, 2022

Abstract:The classification problem's complexity assessment is an essential element of many topics in the supervised learning domain. It plays a significant role in meta-learning -- becoming the basis for determining meta-attributes or multi-criteria optimization -- allowing the evaluation of the training set resampling without needing to rebuild the recognition model. The tools currently available for the academic community, which would enable the calculation of problem complexity measures, are available only as libraries of the C++ and R languages. This paper describes the software module that allows for the estimation of 22 complexity measures for the Python language -- compatible with the scikit-learn programming interface -- allowing for the implementation of research using them in the most popular programming environment of the machine learning community.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge