João Ascenso

Fine-Grained HDR Image Quality Assessment From Noticeably Distorted to Very High Fidelity

Jun 14, 2025Abstract:High dynamic range (HDR) and wide color gamut (WCG) technologies significantly improve color reproduction compared to standard dynamic range (SDR) and standard color gamuts, resulting in more accurate, richer, and more immersive images. However, HDR increases data demands, posing challenges for bandwidth efficiency and compression techniques. Advances in compression and display technologies require more precise image quality assessment, particularly in the high-fidelity range where perceptual differences are subtle. To address this gap, we introduce AIC-HDR2025, the first such HDR dataset, comprising 100 test images generated from five HDR sources, each compressed using four codecs at five compression levels. It covers the high-fidelity range, from visible distortions to compression levels below the visually lossless threshold. A subjective study was conducted using the JPEG AIC-3 test methodology, combining plain and boosted triplet comparisons. In total, 34,560 ratings were collected from 151 participants across four fully controlled labs. The results confirm that AIC-3 enables precise HDR quality estimation, with 95\% confidence intervals averaging a width of 0.27 at 1 JND. In addition, several recently proposed objective metrics were evaluated based on their correlation with subjective ratings. The dataset is publicly available.

GS-QA: Comprehensive Quality Assessment Benchmark for Gaussian Splatting View Synthesis

Feb 18, 2025Abstract:Gaussian Splatting (GS) offers a promising alternative to Neural Radiance Fields (NeRF) for real-time 3D scene rendering. Using a set of 3D Gaussians to represent complex geometry and appearance, GS achieves faster rendering times and reduced memory consumption compared to the neural network approach used in NeRF. However, quality assessment of GS-generated static content is not yet explored in-depth. This paper describes a subjective quality assessment study that aims to evaluate synthesized videos obtained with several static GS state-of-the-art methods. The methods were applied to diverse visual scenes, covering both 360-degree and forward-facing (FF) camera trajectories. Moreover, the performance of 18 objective quality metrics was analyzed using the scores resulting from the subjective study, providing insights into their strengths, limitations, and alignment with human perception. All videos and scores are made available providing a comprehensive database that can be used as benchmark on GS view synthesis and objective quality metrics.

Evaluation of strategies for efficient rate-distortion NeRF streaming

Oct 25, 2024

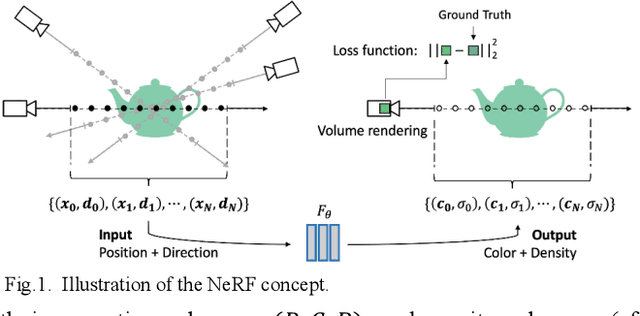

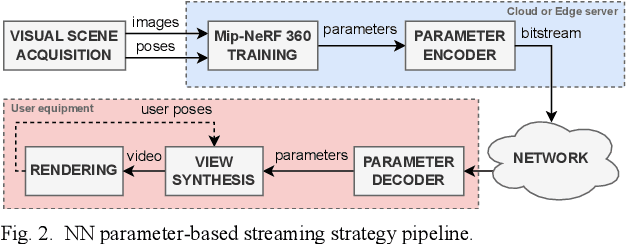

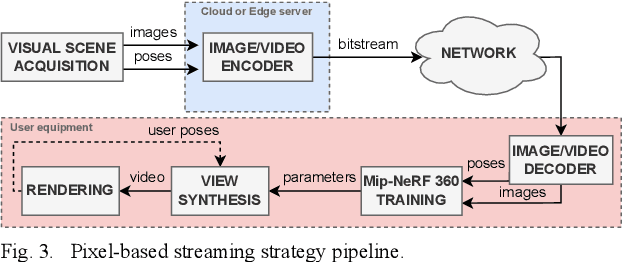

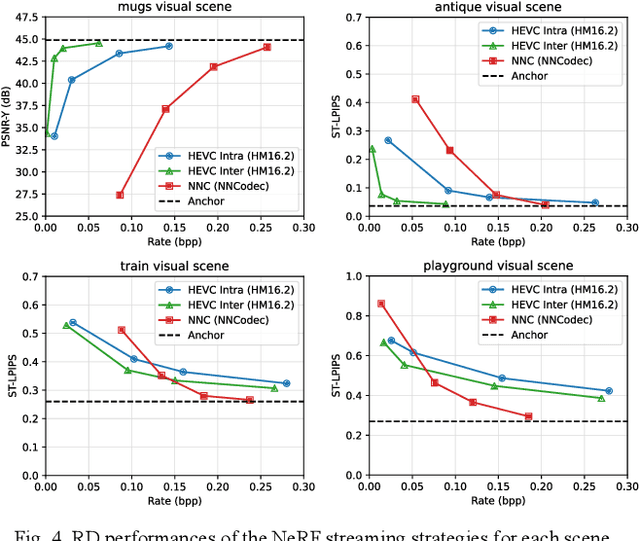

Abstract:Neural Radiance Fields (NeRF) have revolutionized the field of 3D visual representation by enabling highly realistic and detailed scene reconstructions from a sparse set of images. NeRF uses a volumetric functional representation that maps 3D points to their corresponding colors and opacities, allowing for photorealistic view synthesis from arbitrary viewpoints. Despite its advancements, the efficient streaming of NeRF content remains a significant challenge due to the large amount of data involved. This paper investigates the rate-distortion performance of two NeRF streaming strategies: pixel-based and neural network (NN) parameter-based streaming. While in the former, images are coded and then transmitted throughout the network, in the latter, the respective NeRF model parameters are coded and transmitted instead. This work also highlights the trade-offs in complexity and performance, demonstrating that the NN parameter-based strategy generally offers superior efficiency, making it suitable for one-to-many streaming scenarios.

Neuromorphic Vision Data Coding: Classifying and Reviewing

May 11, 2024

Abstract:In recent years, visual sensors have been quickly improving towards mimicking the visual information acquisition process of human brain, by responding to illumination changes as they occur in time rather than at fixed time intervals. In this context, the so-called neuromorphic vision sensors depart from the conventional frame-based image sensors by adopting a paradigm shift in the way visual information is acquired. This new way of visual information acquisition enables faster and asynchronous per-pixel responses/recordings driven by the scene dynamics with a very high dynamic range and low power consumption. However, the huge amount of data outputted by the emerging neuromorphic vision sensors critically demands highly efficient coding solutions in order applications may take full advantage of these new, attractive sensors' capabilities. For this reason, considerable research efforts have been invested in recent years towards developing increasingly efficient neuromorphic vision data coding (NVDC) solutions. In this context, the main objective of this paper is to provide a comprehensive overview of NVDC solutions in the literature, guided by a novel classification taxonomy, which allows better organizing this emerging field. In this way, more solid conclusions can be drawn about the current NVDC status quo, thus allowing to better drive future research and standardization developments in this emerging technical area.

Joint Geometry and Color Projection-based Point Cloud Quality Metric

Aug 05, 2021

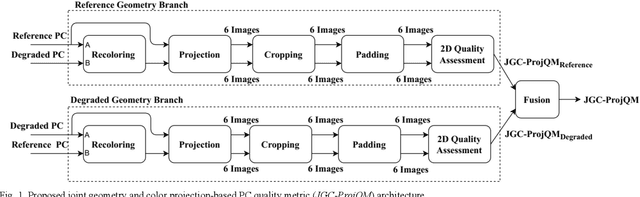

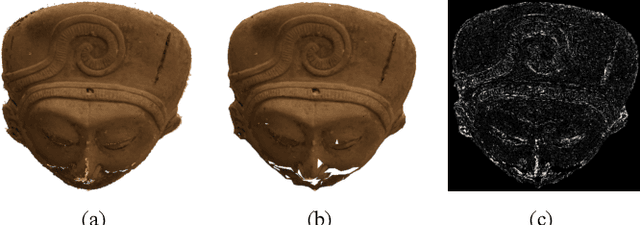

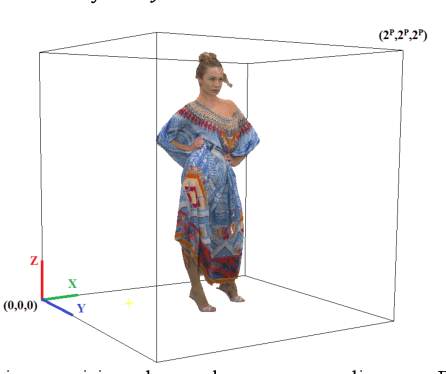

Abstract:Point cloud coding solutions have been recently standardized to address the needs of multiple application scenarios. The design and assessment of point cloud coding methods require reliable objective quality metrics to evaluate the level of degradation introduced by compression or any other type of processing. Several point cloud objective quality metrics has been recently proposed to reliable estimate human perceived quality, including the so-called projection-based metrics. In this context, this paper proposes a joint geometry and color projection-based point cloud objective quality metric which solves the critical weakness of this type of quality metrics, i.e., the misalignment between the reference and degraded projected images. Moreover, the proposed point cloud quality metric exploits the best performing 2D quality metrics in the literature to assess the quality of the projected images. The experimental results show that the proposed projection-based quality metric offers the best subjective-objective correlation performance in comparison with other metrics in the literature. The Pearson correlation gains regarding D1-PSNR and D2-PSNR metrics are 17% and 14.2 when data with all coding degradations is considered.

A Point-to-Distribution Joint Geometry and Color Metric for Point Cloud Quality Assessment

Jul 30, 2021

Abstract:Point clouds (PCs) are a powerful 3D visual representation paradigm for many emerging application domains, especially virtual and augmented reality, and autonomous vehicles. However, the large amount of PC data required for highly immersive and realistic experiences requires the availability of efficient, lossy PC coding solutions are critical. Recently, two MPEG PC coding standards have been developed to address the relevant application requirements and further developments are expected in the future. In this context, the assessment of PC quality, notably for decoded PCs, is critical and asks for the design of efficient objective PC quality metrics. In this paper, a novel point-to-distribution metric is proposed for PC quality assessment considering both the geometry and texture. This new quality metric exploits the scale-invariance property of the Mahalanobis distance to assess first the geometry and color point-to-distribution distortions, which are after fused to obtain a joint geometry and color quality metric. The proposed quality metric significantly outperforms the best PC quality assessment metrics in the literature.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge