Evaluation of strategies for efficient rate-distortion NeRF streaming

Paper and Code

Oct 25, 2024

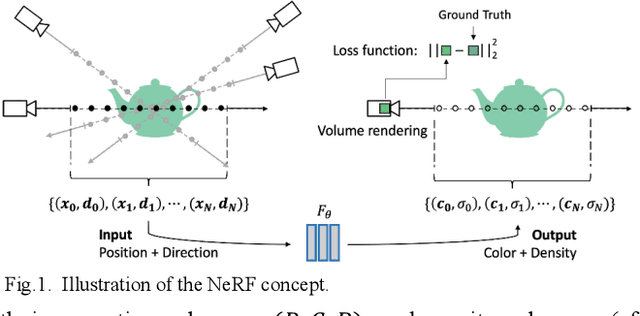

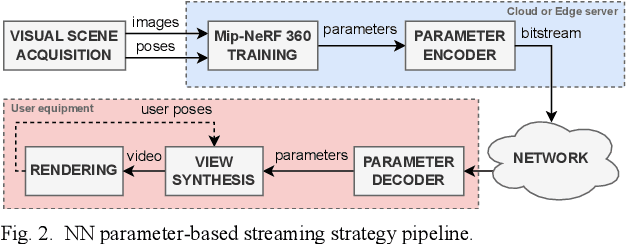

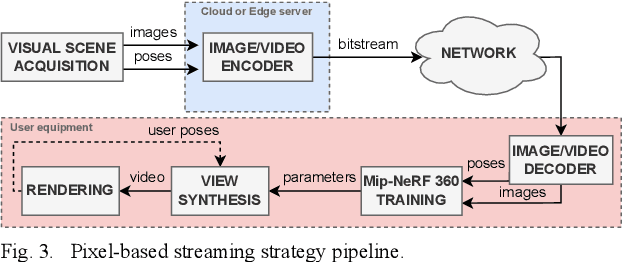

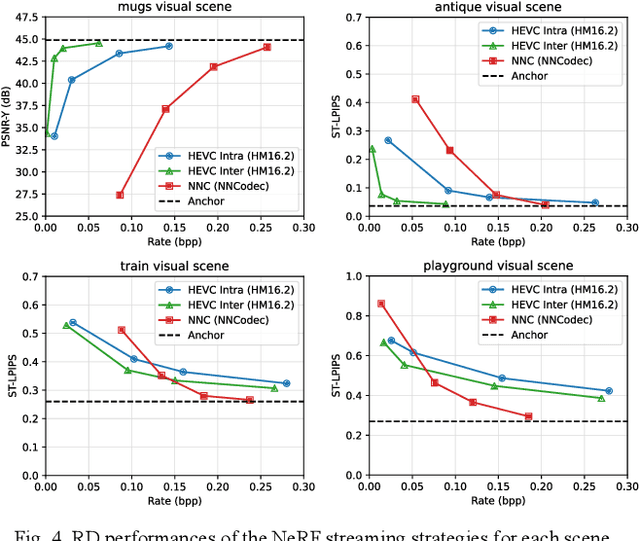

Neural Radiance Fields (NeRF) have revolutionized the field of 3D visual representation by enabling highly realistic and detailed scene reconstructions from a sparse set of images. NeRF uses a volumetric functional representation that maps 3D points to their corresponding colors and opacities, allowing for photorealistic view synthesis from arbitrary viewpoints. Despite its advancements, the efficient streaming of NeRF content remains a significant challenge due to the large amount of data involved. This paper investigates the rate-distortion performance of two NeRF streaming strategies: pixel-based and neural network (NN) parameter-based streaming. While in the former, images are coded and then transmitted throughout the network, in the latter, the respective NeRF model parameters are coded and transmitted instead. This work also highlights the trade-offs in complexity and performance, demonstrating that the NN parameter-based strategy generally offers superior efficiency, making it suitable for one-to-many streaming scenarios.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge