Jinho Yi

Data-Driven Subsampling in the Presence of an Adversarial Actor

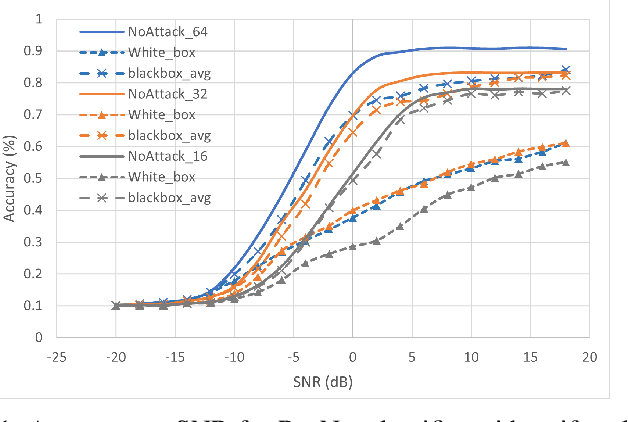

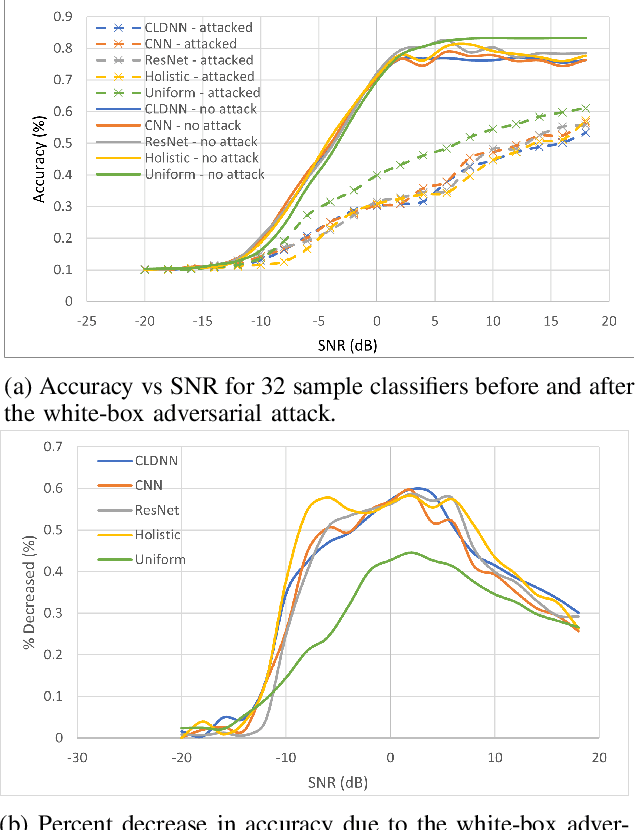

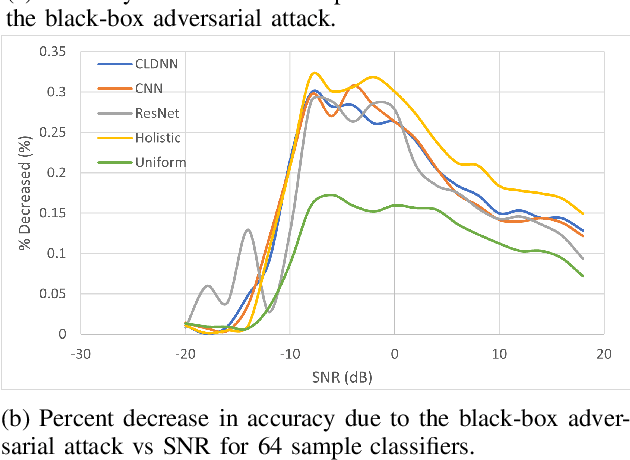

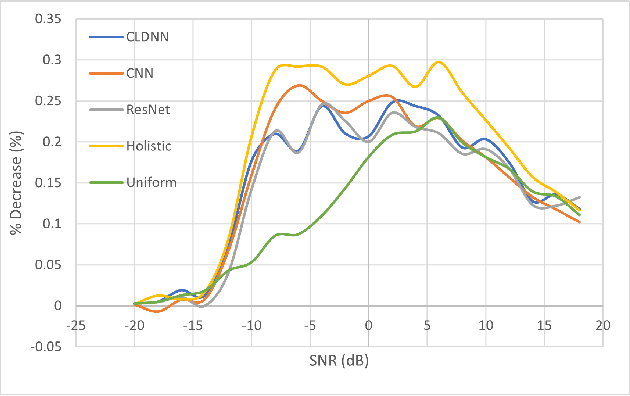

Jan 07, 2024Abstract:Deep learning based automatic modulation classification (AMC) has received significant attention owing to its potential applications in both military and civilian use cases. Recently, data-driven subsampling techniques have been utilized to overcome the challenges associated with computational complexity and training time for AMC. Beyond these direct advantages of data-driven subsampling, these methods also have regularizing properties that may improve the adversarial robustness of the modulation classifier. In this paper, we investigate the effects of an adversarial attack on an AMC system that employs deep learning models both for AMC and for subsampling. Our analysis shows that subsampling itself is an effective deterrent to adversarial attacks. We also uncover the most efficient subsampling strategy when an adversarial attack on both the classifier and the subsampler is anticipated.

Gradient-based Adversarial Deep Modulation Classification with Data-driven Subsampling

Apr 03, 2021

Abstract:Automatic modulation classification can be a core component for intelligent spectrally efficient wireless communication networks, and deep learning techniques have recently been shown to deliver superior performance to conventional model-based strategies, particularly when distinguishing between a large number of modulation types. However, such deep learning techniques have also been recently shown to be vulnerable to gradient-based adversarial attacks that rely on subtle input perturbations, which would be particularly feasible in a wireless setting via jamming. One such potent attack is the one known as the Carlini-Wagner attack, which we consider in this work. We further consider a data-driven subsampling setting, where several recently introduced deep-learning-based algorithms are employed to select a subset of samples that lead to reducing the final classifier's training time with minimal loss in accuracy. In this setting, the attacker has to make an assumption about the employed subsampling strategy, in order to calculate the loss gradient. Based on state of the art techniques available to both the attacker and defender, we evaluate best strategies under various assumptions on the knowledge of the other party's strategy. Interestingly, in presence of knowledgeable attackers, we identify computational cost reduction opportunities for the defender with no or minimal loss in performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge