Jiaying Guo

AVARS -- Alleviating Unexpected Urban Road Traffic Congestion using UAVs

Sep 10, 2023

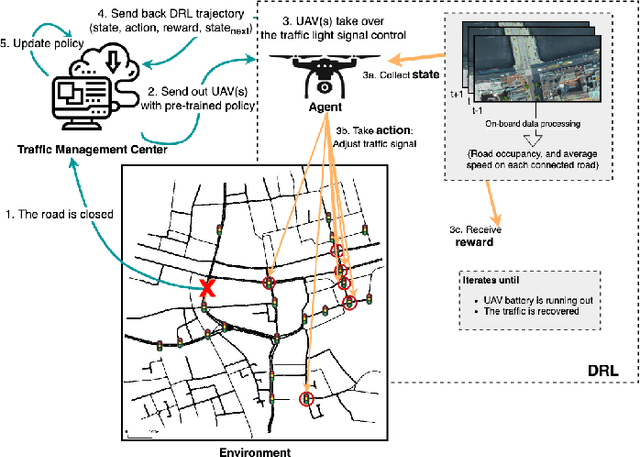

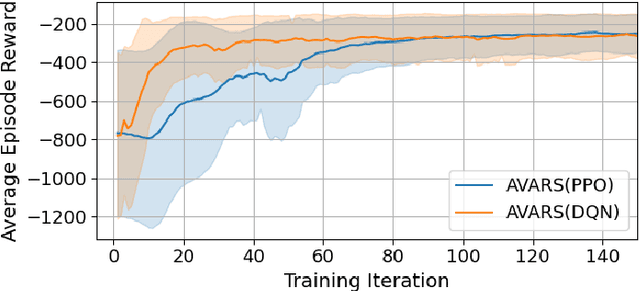

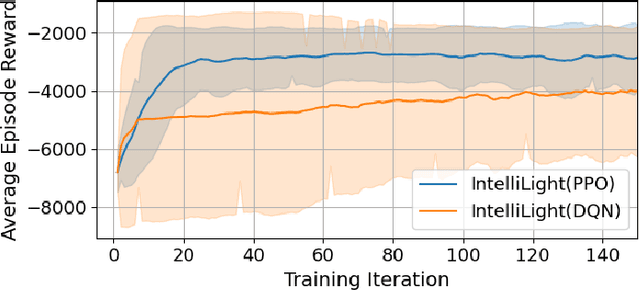

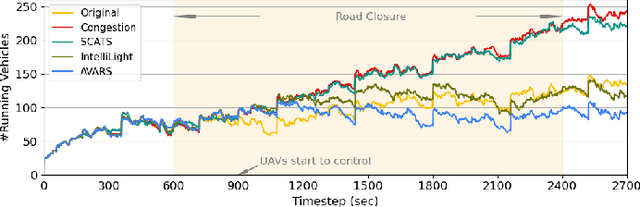

Abstract:Reducing unexpected urban traffic congestion caused by en-route events (e.g., road closures, car crashes, etc.) often requires fast and accurate reactions to choose the best-fit traffic signals. Traditional traffic light control systems, such as SCATS and SCOOT, are not efficient as their traffic data provided by induction loops has a low update frequency (i.e., longer than 1 minute). Moreover, the traffic light signal plans used by these systems are selected from a limited set of candidate plans pre-programmed prior to unexpected events' occurrence. Recent research demonstrates that camera-based traffic light systems controlled by deep reinforcement learning (DRL) algorithms are more effective in reducing traffic congestion, in which the cameras can provide high-frequency high-resolution traffic data. However, these systems are costly to deploy in big cities due to the excessive potential upgrades required to road infrastructure. In this paper, we argue that Unmanned Aerial Vehicles (UAVs) can play a crucial role in dealing with unexpected traffic congestion because UAVs with onboard cameras can be economically deployed when and where unexpected congestion occurs. Then, we propose a system called "AVARS" that explores the potential of using UAVs to reduce unexpected urban traffic congestion using DRL-based traffic light signal control. This approach is validated on a widely used open-source traffic simulator with practical UAV settings, including its traffic monitoring ranges and battery lifetime. Our simulation results show that AVARS can effectively recover the unexpected traffic congestion in Dublin, Ireland, back to its original un-congested level within the typical battery life duration of a UAV.

CoTV: Cooperative Control for Traffic Light Signals and Connected Autonomous Vehicles using Deep Reinforcement Learning

Jan 31, 2022Abstract:The target of reducing travel time only is insufficient to support the development of future smart transportation systems. To align with the United Nations Sustainable Development Goals (UN-SDG), a further reduction of fuel and emissions, improvements of traffic safety, and the ease of infrastructure deployment and maintenance should also be considered. Different from existing work focusing on the optimization of the control in either traffic light signal (to improve the intersection throughput), or vehicle speed (to stabilize the traffic), this paper presents a multi-agent deep reinforcement learning (DRL) system called CoTV, which Cooperatively controls both Traffic light signals and connected autonomous Vehicles (CAV). Therefore, our CoTV can well balance the achievement of the reduction of travel time, fuel, and emission. In the meantime, CoTV can also be easy to deploy by cooperating with only one CAV that is the nearest to the traffic light controller on each incoming road. This enables more efficient coordination between traffic light controllers and CAV, thus leading to the convergence of training CoTV under the large-scale multi-agent scenario that is traditionally difficult to converge. We give the detailed system design of CoTV, and demonstrate its effectiveness in a simulation study using SUMO under various grid maps and realistic urban scenarios with mixed-autonomy traffic.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge