Jianheng Li

Weakly Supervised Tracklet Person Re-Identification by Deep Feature-wise Mutual Learning

Oct 31, 2019

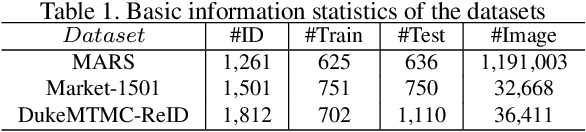

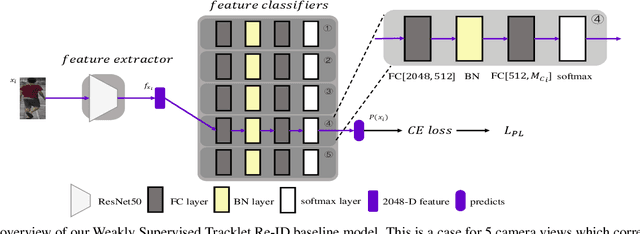

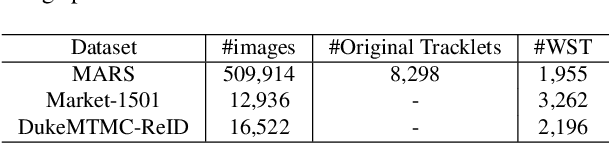

Abstract:The scalability problem caused by the difficulty in annotating Person Re-identification(Re-ID) datasets has become a crucial bottleneck in the development of Re-ID.To address this problem, many unsupervised Re-ID methods have recently been proposed.Nevertheless, most of these models require transfer from another auxiliary fully supervised dataset, which is still expensive to obtain.In this work, we propose a Re-ID model based on Weakly Supervised Tracklets(WST) data from various camera views, which can be inexpensively acquired by combining the fragmented tracklets of the same person in the same camera view over a period of time.We formulate our weakly supervised tracklets Re-ID model by a novel method, named deep feature-wise mutual learning(DFML), which consists of Mutual Learning on Feature Extractors (MLFE) and Mutual Learning on Feature Classifiers (MLFC).We propose MLFE by leveraging two feature extractors to learn from each other to extract more robust and discriminative features.On the other hand, we propose MLFC by adapting discriminative features from various camera views to each classifier. Extensive experiments demonstrate the superiority of our proposed DFML over the state-of-the-art unsupervised models and even some supervised models on three Re-ID benchmark datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge