Jessica Cooper

Hoechst Is All You Need: Lymphocyte Classification with Deep Learning

Jul 16, 2021

Abstract:Multiplex immunofluorescence and immunohistochemistry benefit patients by allowing cancer pathologists to identify several proteins expressed on the surface of cells, enabling cell classification, better understanding of the tumour micro-environment, more accurate diagnoses, prognoses, and tailored immunotherapy based on the immune status of individual patients. However, they are expensive and time consuming processes which require complex staining and imaging techniques by expert technicians. Hoechst staining is much cheaper and easier to perform, but is not typically used in this case as it binds to DNA rather than to the proteins targeted by immunofluorescent techniques, and it was not previously thought possible to differentiate cells expressing these proteins based only on DNA morphology. In this work we show otherwise, training a deep convolutional neural network to identify cells expressing three proteins (T lymphocyte markers CD3 and CD8, and the B lymphocyte marker CD20) with greater than 90% precision and recall, from Hoechst 33342 stained tissue only. Our model learns previously unknown morphological features associated with expression of these proteins which can be used to accurately differentiate lymphocyte subtypes for use in key prognostic metrics such as assessment of immune cell infiltration,and thereby predict and improve patient outcomes without the need for costly multiplex immunofluorescence.

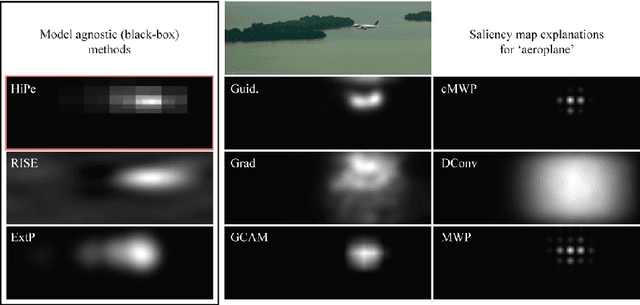

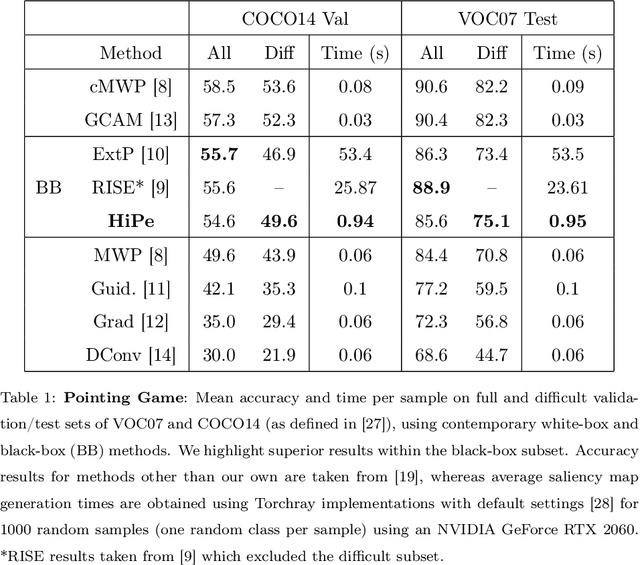

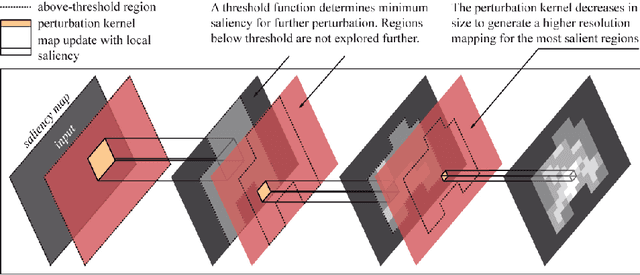

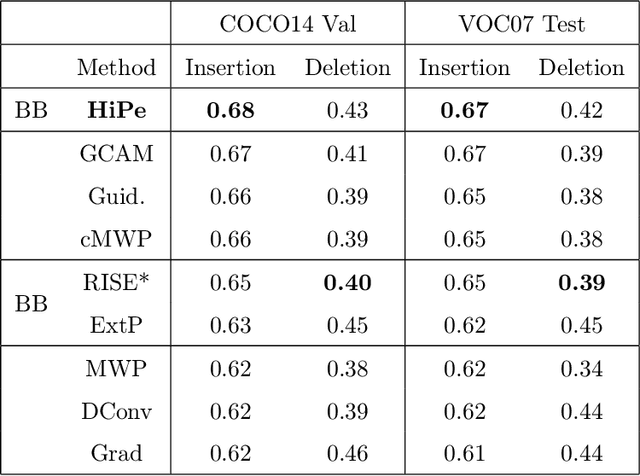

Believe The HiPe: Hierarchical Perturbation for Fast and Robust Explanation of Black Box Models

Feb 22, 2021

Abstract:Understanding the predictions made by Artificial Intelligence (AI) systems is becoming more and more important as deep learning models are used for increasingly complex and high-stakes tasks. Saliency mapping - an easily interpretable visual attribution method - is one important tool for this, but existing formulations are limited by either computational cost or architectural constraints. We therefore propose Hierarchical Perturbation, a very fast and completely model-agnostic method for explaining model predictions with robust saliency maps. Using standard benchmarks and datasets, we show that our saliency maps are of competitive or superior quality to those generated by existing black-box methods - and are over 20x faster to compute.

Understanding Ancient Coin Images

Mar 13, 2019

Abstract:In recent years, a range of problems within the broad umbrella of automatic, computer vision based analysis of ancient coins has been attracting an increasing amount of attention. Notwithstanding this research effort, the results achieved by the state of the art in the published literature remain poor and far from sufficiently well performing for any practical purpose. In the present paper we present a series of contributions which we believe will benefit the interested community. Firstly, we explain that the approach of visual matching of coins, universally adopted in all existing published papers on the topic, is not of practical interest because the number of ancient coin types exceeds by far the number of those types which have been imaged, be it in digital form (e.g. online) or otherwise (traditional film, in print, etc.). Rather, we argue that the focus should be on the understanding of the semantic content of coins. Hence, we describe a novel method which uses real-world multimodal input to extract and associate semantic concepts with the correct coin images and then using a novel convolutional neural network learn the appearance of these concepts. Empirical evidence on a real-world and by far the largest data set of ancient coins, we demonstrate highly promising results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge