Jeremy J. Dahl

Ultrasound Autofocusing: Common Midpoint Phase Error Optimization via Differentiable Beamforming

Oct 03, 2024

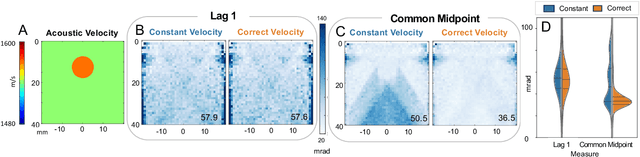

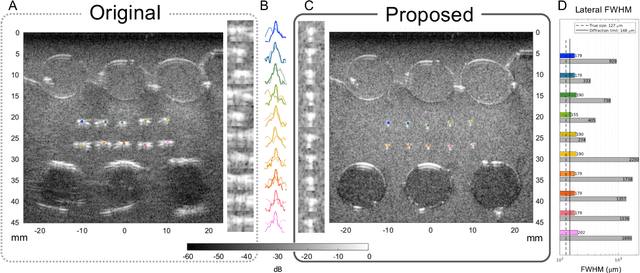

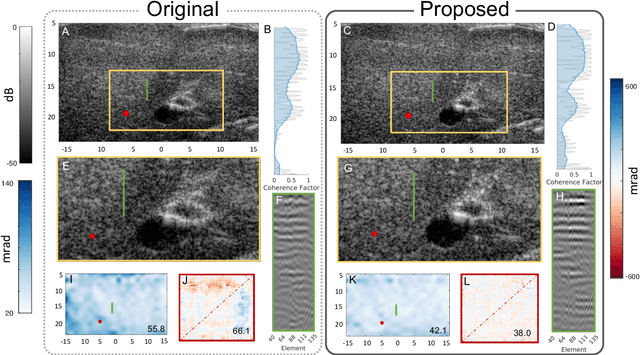

Abstract:Wavefield imaging reconstructs physical properties from wavefield measurements across an aperture, using modalities like radar, optics, sonar, seismic, and ultrasound imaging. Propagation of a wavefront from unknown sources through heterogeneous media causes phase aberrations that degrade the coherence of the wavefront leading to reduced image resolution and contrast. Adaptive imaging techniques attempt to correct phase aberration and restore coherence leading to improved focus. We propose an autofocusing paradigm for aberration correction in ultrasound imaging by fitting an acoustic velocity field to pressure measurements, via optimization of the common midpoint phase error (CMPE), using a straight-ray wave propagation model for beamforming in diffusely scattering media. We show that CMPE induced by heterogeneous acoustic velocity is a robust measure of phase aberration that can be used for acoustic autofocusing. CMPE is optimized iteratively using a differentiable beamforming approach to simultaneously improve the image focus while estimating the acoustic velocity field of the interrogated medium. The approach relies solely on wavefield measurements using a straight-ray integral solution of the two-way time-of-flight without explicit numerical time-stepping models of wave propagation. We demonstrate method performance through in silico simulations, in vitro phantom measurements, and in vivo mammalian models, showing practical applications in distributed aberration quantification, correction, and velocity estimation for medical ultrasound autofocusing.

Investigating Pulse-Echo Sound Speed Estimation in Breast Ultrasound with Deep Learning

Feb 06, 2023

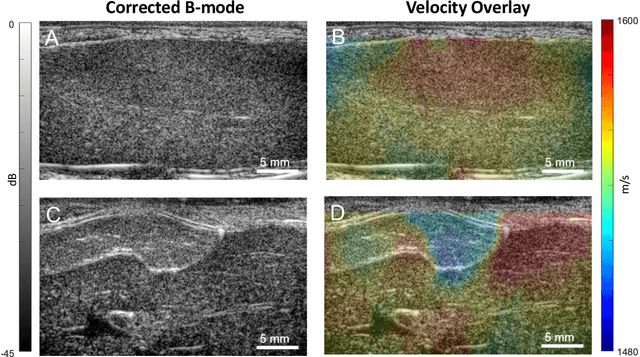

Abstract:Ultrasound is an adjunct tool to mammography that can quickly and safely aid physicians with diagnosing breast abnormalities. Clinical ultrasound often assumes a constant sound speed to form B-mode images for diagnosis. However, the various types of breast tissue, such as glandular, fat, and lesions, differ in sound speed. These differences can degrade the image reconstruction process. Alternatively, sound speed can be a powerful tool for identifying disease. To this end, we propose a deep-learning approach for sound speed estimation from in-phase and quadrature ultrasound signals. First, we develop a large-scale simulated ultrasound dataset that generates quasi-realistic breast tissue by modeling breast gland, skin, and lesions with varying echogenicity and sound speed. We developed a fully convolutional neural network architecture trained on a simulated dataset to produce an estimated sound speed map from inputting three complex-value in-phase and quadrature ultrasound images formed from plane-wave transmissions at separate angles. Furthermore, thermal noise augmentation is used during model optimization to enhance generalizability to real ultrasound data. We evaluate the model on simulated, phantom, and in-vivo breast ultrasound data, demonstrating its ability to accurately estimate sound speeds consistent with previously reported values in the literature. Our simulated dataset and model will be publicly available to provide a step towards accurate and generalizable sound speed estimation for pulse-echo ultrasound imaging.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge