Jean-Marc Fellous

NeuroAI and Beyond

Jan 27, 2026Abstract:Neuroscience and Artificial Intelligence (AI) have made significant progress in the past few years but have only been loosely inter-connected. Based on a workshop held in August 2025, we identify current and future areas of synergism between these two fields. We focus on the subareas of embodiment, language and communication, robotics, learning in humans and machines and Neuromorphic engineering to take stock of the progress made so far, and possible promising new future avenues. Overall, we advocate for the development of NeuroAI, a type of Neuroscience-informed Artificial Intelligence that, we argue, has the potential for significantly improving the scope and efficiency of AI algorithms while simultaneously changing the way we understand biological neural computations. We include personal statements from several leading researchers on their diverse views of NeuroAI. Two Strength-Weakness-Opportunities-Threat (SWOT) analyses by researchers and trainees are appended that describe the benefits and risks offered by NeuroAI.

Sparsity-Aware Hardware-Software Co-Design of Spiking Neural Networks: An Overview

Aug 26, 2024Abstract:Spiking Neural Networks (SNNs) are inspired by the sparse and event-driven nature of biological neural processing, and offer the potential for ultra-low-power artificial intelligence. However, realizing their efficiency benefits requires specialized hardware and a co-design approach that effectively leverages sparsity. We explore the hardware-software co-design of sparse SNNs, examining how sparsity representation, hardware architectures, and training techniques influence hardware efficiency. We analyze the impact of static and dynamic sparsity, discuss the implications of different neuron models and encoding schemes, and investigate the need for adaptability in hardware designs. Our work aims to illuminate the path towards embedded neuromorphic systems that fully exploit the computational advantages of sparse SNNs.

Dopamine modulation of prefrontal delay activity-reverberatory activity and sharpness of tuning curves

Aug 16, 2016

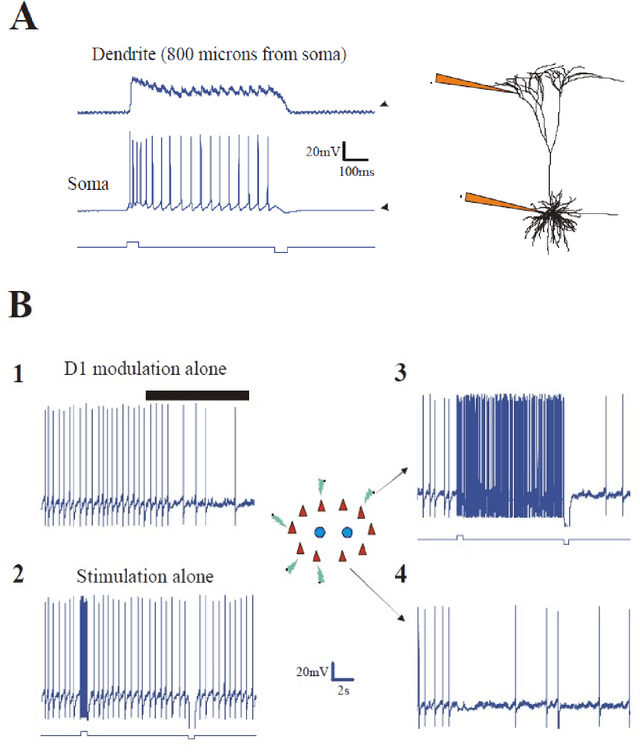

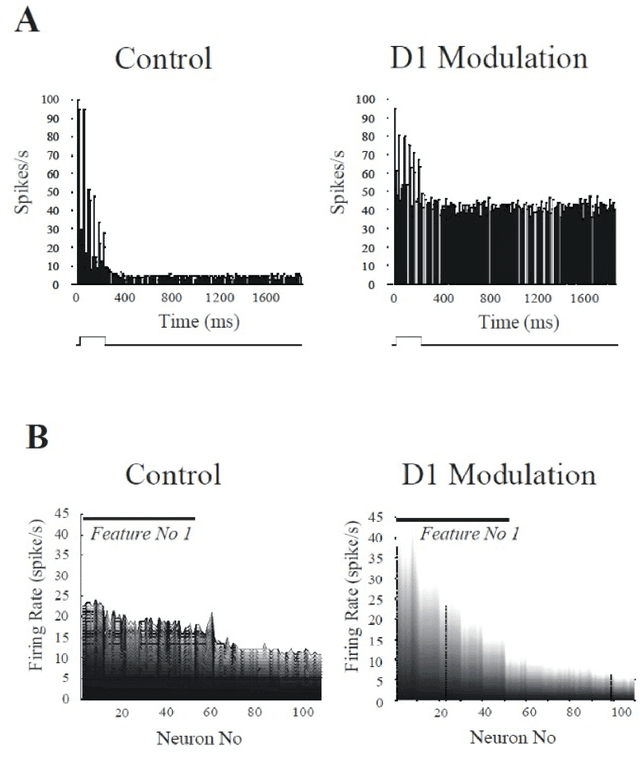

Abstract:Recent electrophysiological experiments have shown that dopamine (D1) modulation of pyramidal cells in prefrontal cortex reduces spike frequency adaptation and enhances NMDA transmission. Using four models, from multicompartmental to integrate and fire, we examine the effects of these modulations on sustained (delay) activity in a reverberatory network. We find that D1 modulation may enable robust network bistability yielding selective reverberation among cells that code for a particular item or location. We further show that the tuning curve of such cells is sharpened, and that signal-to-noise ratio is increased. We postulate that D1 modulation affects the tuning of "memory fields" and yield efficient distributed dynamic representations.

* CNS Conference 2001; 2 figures

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge