Gabriele Scheler

Institut fur Informatik, TU Munchen

Sketch of a novel approach to a neural model

Sep 14, 2022

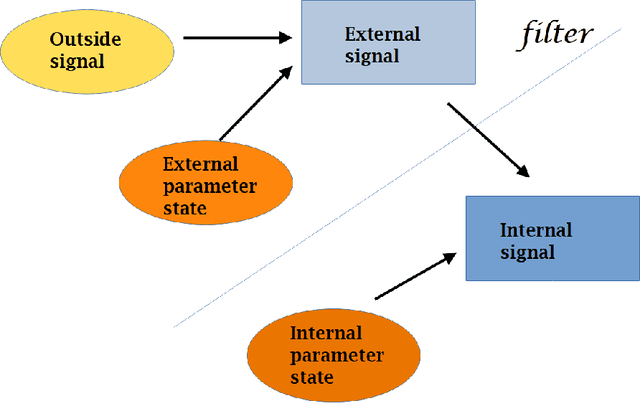

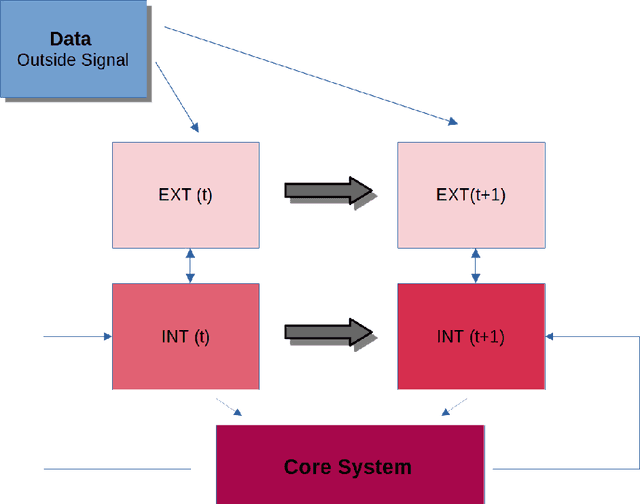

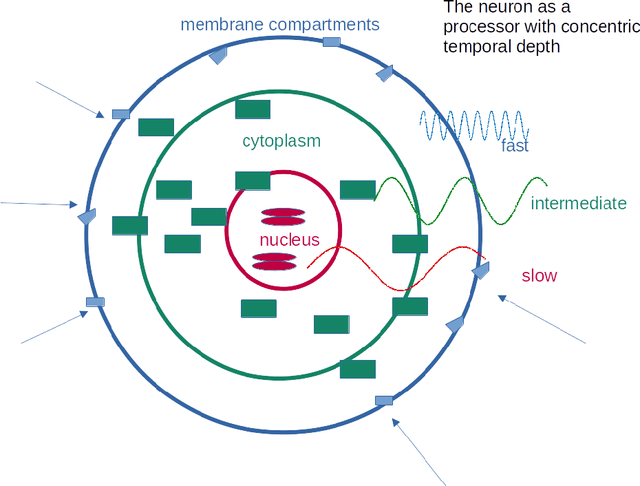

Abstract:In this paper, we lay out a novel model of neuroplasticity in the form of a horizontal-vertical integration model of neural processing. We believe a new approach to neural modeling will benefit the 3rd wave of AI. The horizontal plane consists of an adaptive network of neurons connected by transmission links which generates spatio-temporal spike patterns. This fits with standard computational neuroscience approaches. Additionally for each individual neuron there is a vertical part consisting of internal adaptive parameters steering the external membrane-expressed parameters which are involved in neural transmission. Each neuron has a vertical modular system of parameters corresponding to (a) external parameters at the membrane layer, divided into compartments (spines, boutons) (b) internal parameters in the submembrane zone and the cytoplasm with its protein signaling network and (c) core parameters in the nucleus for genetic and epigenetic information. In such models, each node (=neuron) in the horizontal network has its own internal memory. Neural transmission and information storage are systematically separated, an important conceptual advance over synaptic weight models. We discuss the membrane-based (external) filtering and selection of outside signals for processing vs. signal loss by fast fluctuations and the neuron-internal computing strategies from intracellular protein signaling to the nucleus as the core system. We want to show that the individual neuron has an important role in the computation of signals and that many assumptions derived from the synaptic weight adjustment hypothesis of memory may not hold in a real brain. Not every transmission event leaves a trace and the neuron is a self-programming device, rather than passively determined by current input. Ultimately we strive to build a flexible memory system that processes facts and events automatically.

Neuromodulation Influences Synchronization and Intrinsic Read-out

Jan 23, 2018

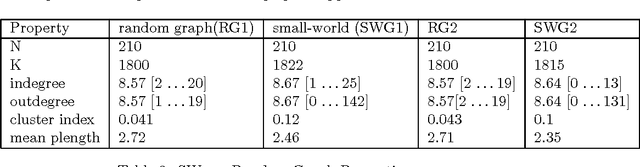

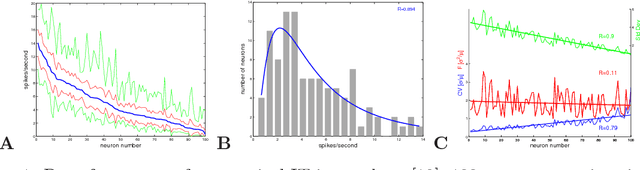

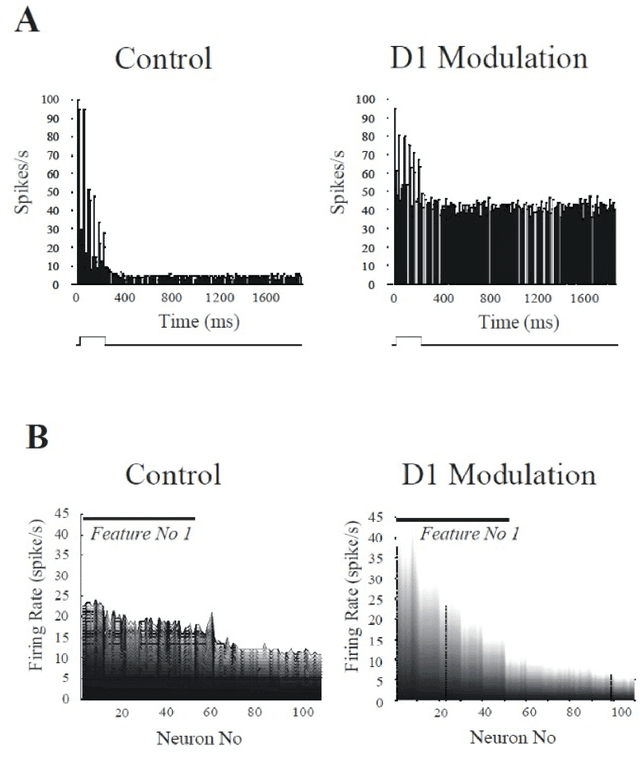

Abstract:The roles of neuromodulation in a neural network, such as in a cortical microcolumn, are still incompletely understood. Neuromodulation influences neural processing by presynaptic and postsynaptic regulation of synaptic efficacy. Synaptic efficacy modulation can be an effective way to rapidly alter network density and topology. We show that altering network topology, together with density, will affect its synchronization. Fast synaptic efficacy modulation may therefore influence the amount of correlated spiking in a network. Neuromodulation also affects ion channel regulation for intrinsic excitability, which alters the neuron's activation function. We show that synchronization in a network influences the read-out of these intrinsic properties. Highly synchronous input drives neurons, such that differences in intrinsic properties disappear, while asynchronous input lets intrinsic properties determine output behavior. Thus, altering network topology can alter the balance between intrinsically vs. synaptically driven network activity. We conclude that neuromodulation may allow a network to shift between a more synchronized transmission mode and a more asynchronous intrinsic read-out mode.

Logarithmic distributions prove that intrinsic learning is Hebbian

Dec 13, 2017

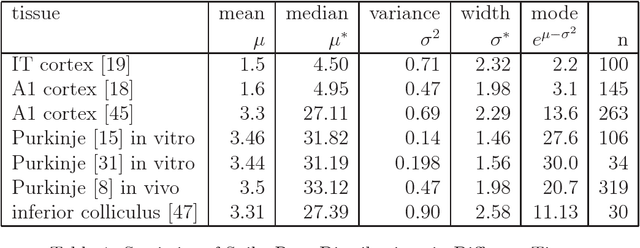

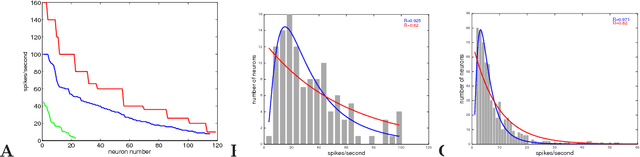

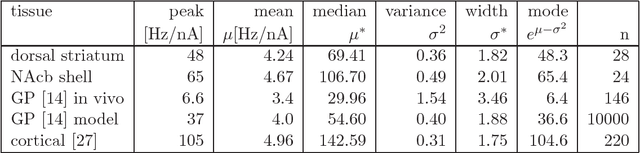

Abstract:In this paper, we present data for the lognormal distributions of spike rates, synaptic weights and intrinsic excitability (gain) for neurons in various brain areas, such as auditory or visual cortex, hippocampus, cerebellum, striatum, midbrain nuclei. We find a remarkable consistency of heavy-tailed, specifically lognormal, distributions for rates, weights and gains in all brain areas examined. The difference between strongly recurrent and feed-forward connectivity (cortex vs. striatum and cerebellum), neurotransmitter (GABA (striatum) or glutamate (cortex)) or the level of activation (low in cortex, high in Purkinje cells and midbrain nuclei) turns out to be irrelevant for this feature. Logarithmic scale distribution of weights and gains appears to be a general, functional property in all cases analyzed. We then created a generic neural model to investigate adaptive learning rules that create and maintain lognormal distributions. We conclusively demonstrate that not only weights, but also intrinsic gains, need to have strong Hebbian learning in order to produce and maintain the experimentally attested distributions. This provides a solution to the long-standing question about the type of plasticity exhibited by intrinsic excitability.

Dopamine modulation of prefrontal delay activity-reverberatory activity and sharpness of tuning curves

Aug 16, 2016

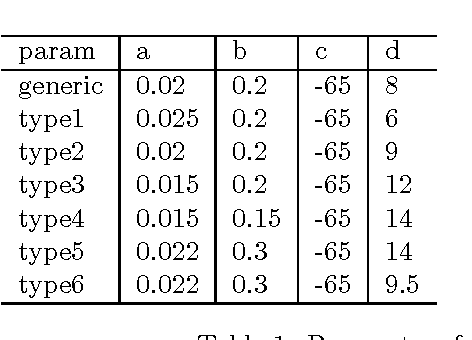

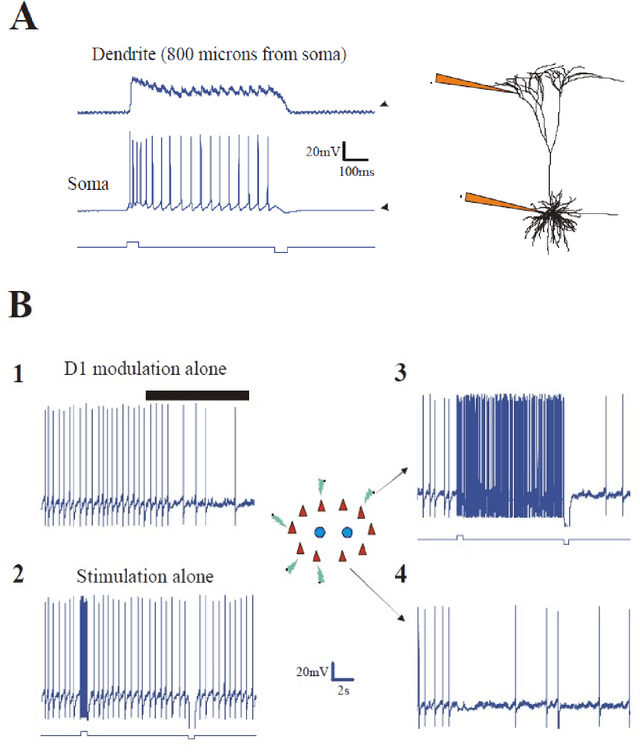

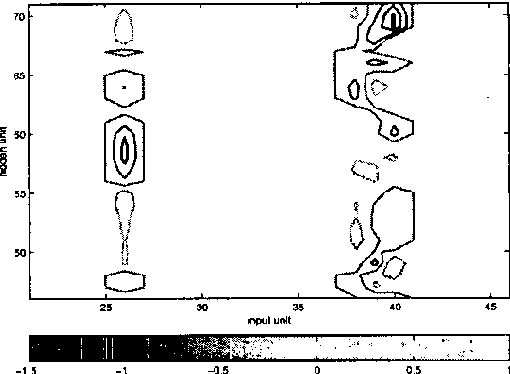

Abstract:Recent electrophysiological experiments have shown that dopamine (D1) modulation of pyramidal cells in prefrontal cortex reduces spike frequency adaptation and enhances NMDA transmission. Using four models, from multicompartmental to integrate and fire, we examine the effects of these modulations on sustained (delay) activity in a reverberatory network. We find that D1 modulation may enable robust network bistability yielding selective reverberation among cells that code for a particular item or location. We further show that the tuning curve of such cells is sharpened, and that signal-to-noise ratio is increased. We postulate that D1 modulation affects the tuning of "memory fields" and yield efficient distributed dynamic representations.

* CNS Conference 2001; 2 figures

Learning intrinsic excitability in medium spiny neurons

Dec 13, 2012

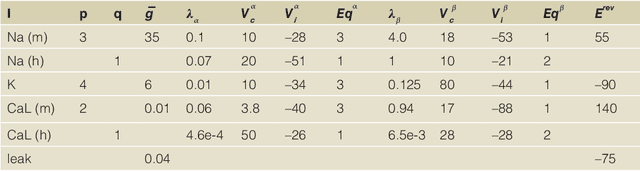

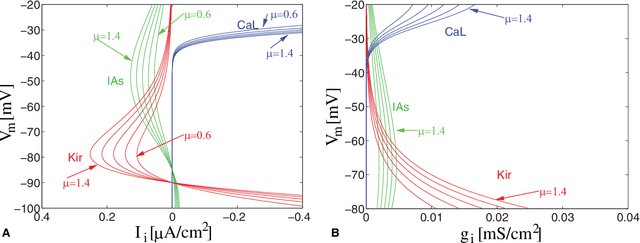

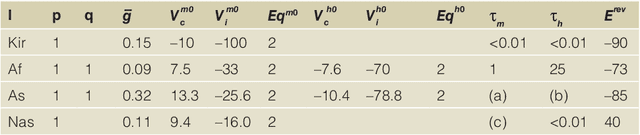

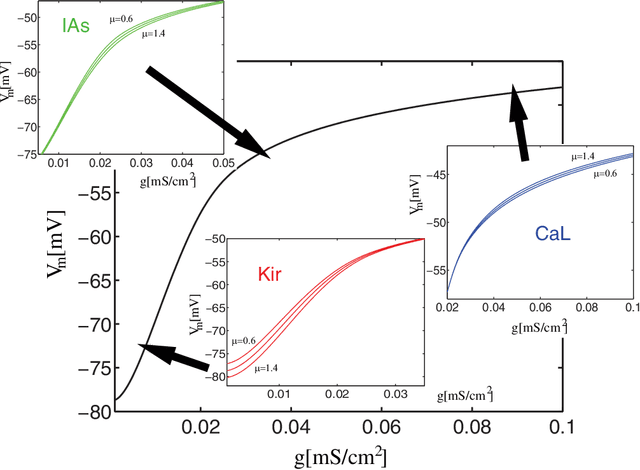

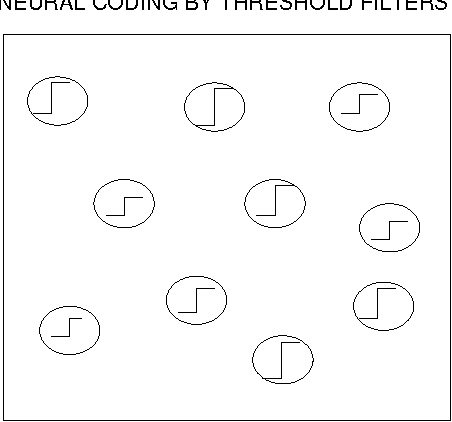

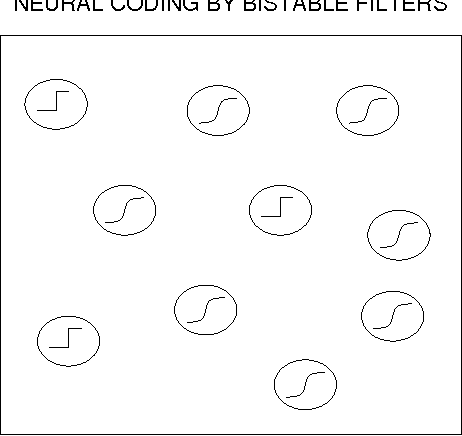

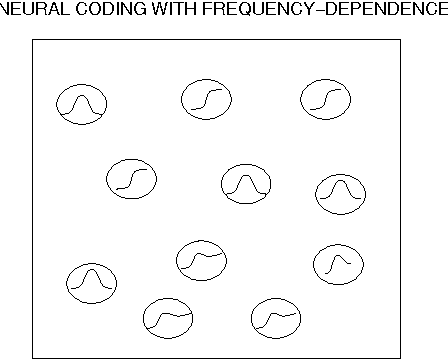

Abstract:We present an unsupervised, local activation-dependent learning rule for intrinsic plasticity (IP) which affects the composition of ion channel conductances for single neurons in a use-dependent way. We use a single-compartment conductance-based model for medium spiny striatal neurons in order to show the effects of parametrization of individual ion channels on the neuronal activation function. We show that parameter changes within the physiological ranges are sufficient to create an ensemble of neurons with significantly different activation functions. We emphasize that the effects of intrinsic neuronal variability on spiking behavior require a distributed mode of synaptic input and can be eliminated by strongly correlated input. We show how variability and adaptivity in ion channel conductances can be utilized to store patterns without an additional contribution by synaptic plasticity (SP). The adaptation of the spike response may result in either "positive" or "negative" pattern learning. However, read-out of stored information depends on a distributed pattern of synaptic activity to let intrinsic variability determine spike response. We briefly discuss the implications of this conditional memory on learning and addiction.

* 20 pages, 8 figures

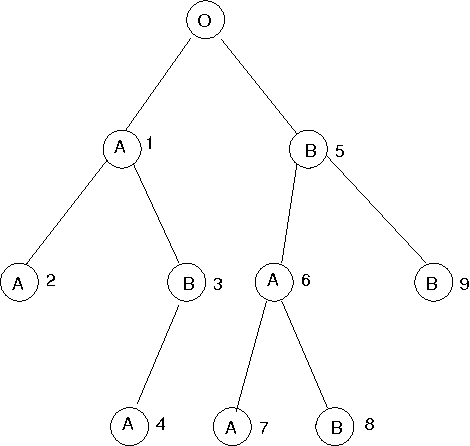

Memorization in a neural network with adjustable transfer function and conditional gating

Mar 07, 2004

Abstract:The main problem about replacing LTP as a memory mechanism has been to find other highly abstract, easily understandable principles for induced plasticity. In this paper we attempt to lay out such a basic mechanism, namely intrinsic plasticity. Important empirical observations with theoretical significance are time-layering of neural plasticity mediated by additional constraints to enter into later stages, various manifestations of intrinsic neural properties, and conditional gating of synaptic connections. An important consequence of the proposed mechanism is that it can explain the usually latent nature of memories.

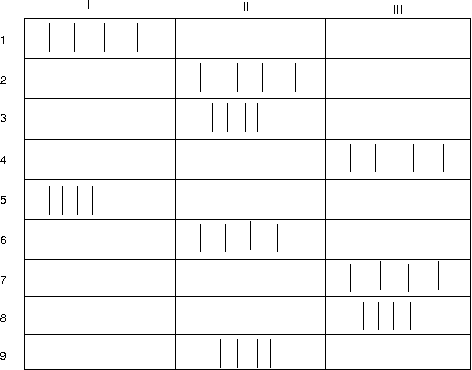

Presynaptic modulation as fast synaptic switching: state-dependent modulation of task performance

Jan 25, 2004

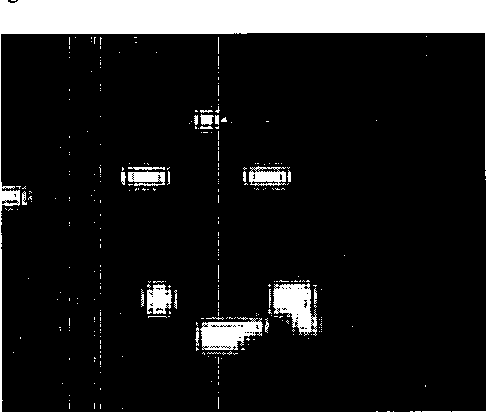

Abstract:Neuromodulatory receptors in presynaptic position have the ability to suppress synaptic transmission for seconds to minutes when fully engaged. This effectively alters the synaptic strength of a connection. Much work on neuromodulation has rested on the assumption that these effects are uniform at every neuron. However, there is considerable evidence to suggest that presynaptic regulation may be in effect synapse-specific. This would define a second "weight modulation" matrix, which reflects presynaptic receptor efficacy at a given site. Here we explore functional consequences of this hypothesis. By analyzing and comparing the weight matrices of networks trained on different aspects of a task, we identify the potential for a low complexity "modulation matrix", which allows to switch between differently trained subtasks while retaining general performance characteristics for the task. This means that a given network can adapt itself to different task demands by regulating its release of neuromodulators. Specifically, we suggest that (a) a network can provide optimized responses for related classification tasks without the need to train entirely separate networks and (b) a network can blend a "memory mode" which aims at reproducing memorized patterns and a "novelty mode" which aims to facilitate classification of new patterns. We relate this work to the known effects of neuromodulators on brain-state dependent processing.

* 6 pages, 13 figures

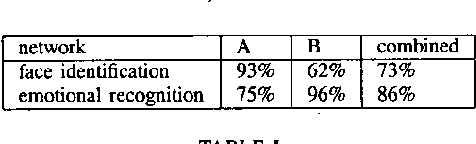

With raised eyebrows or the eyebrows raised ? A Neural Network Approach to Grammar Checking for Definiteness

Jun 14, 1996

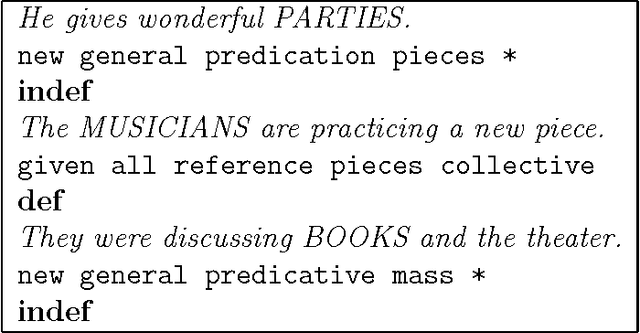

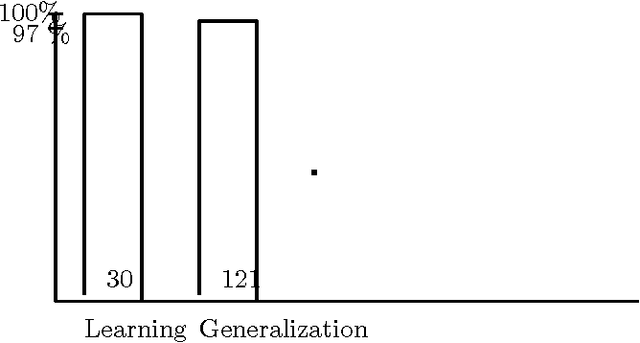

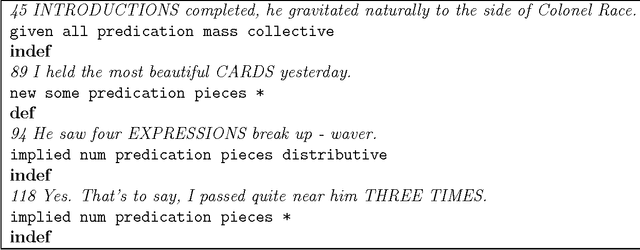

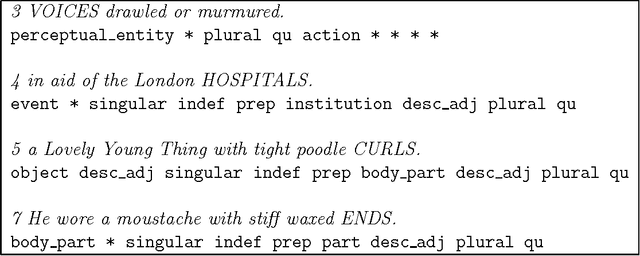

Abstract:In this paper, we use a feature model of the semantics of plural determiners to present an approach to grammar checking for definiteness. Using neural network techniques, a semantics -- morphological category mapping was learned. We then applied a textual encoding technique to the 125 occurences of the relevant category in a 10 000 word narrative text and learned a surface -- semantics mapping. By applying the learned generation function to the newly generated representations, we achieved a correct category assignment in many cases (87 %). These results are considerably better than a direct surface categorization approach (54 %), with a baseline (always guessing the dominant category) of 60 %. It is discussed, how these results could be used in multilingual NLP applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge