Jason Zutty

LLM-Guided Evolution: An Autonomous Model Optimization for Object Detection

Apr 03, 2025Abstract:In machine learning, Neural Architecture Search (NAS) requires domain knowledge of model design and a large amount of trial-and-error to achieve promising performance. Meanwhile, evolutionary algorithms have traditionally relied on fixed rules and pre-defined building blocks. The Large Language Model (LLM)-Guided Evolution (GE) framework transformed this approach by incorporating LLMs to directly modify model source code for image classification algorithms on CIFAR data and intelligently guide mutations and crossovers. A key element of LLM-GE is the "Evolution of Thought" (EoT) technique, which establishes feedback loops, allowing LLMs to refine their decisions iteratively based on how previous operations performed. In this study, we perform NAS for object detection by improving LLM-GE to modify the architecture of You Only Look Once (YOLO) models to enhance performance on the KITTI dataset. Our approach intelligently adjusts the design and settings of YOLO to find the optimal algorithms against objective such as detection accuracy and speed. We show that LLM-GE produced variants with significant performance improvements, such as an increase in Mean Average Precision from 92.5% to 94.5%. This result highlights the flexibility and effectiveness of LLM-GE on real-world challenges, offering a novel paradigm for automated machine learning that combines LLM-driven reasoning with evolutionary strategies.

LLM Guided Evolution -- The Automation of Models Advancing Models

Mar 18, 2024

Abstract:In the realm of machine learning, traditional model development and automated approaches like AutoML typically rely on layers of abstraction, such as tree-based or Cartesian genetic programming. Our study introduces "Guided Evolution" (GE), a novel framework that diverges from these methods by utilizing Large Language Models (LLMs) to directly modify code. GE leverages LLMs for a more intelligent, supervised evolutionary process, guiding mutations and crossovers. Our unique "Evolution of Thought" (EoT) technique further enhances GE by enabling LLMs to reflect on and learn from the outcomes of previous mutations. This results in a self-sustaining feedback loop that augments decision-making in model evolution. GE maintains genetic diversity, crucial for evolutionary algorithms, by leveraging LLMs' capability to generate diverse responses from expertly crafted prompts and modulate model temperature. This not only accelerates the evolution process but also injects expert like creativity and insight into the process. Our application of GE in evolving the ExquisiteNetV2 model demonstrates its efficacy: the LLM-driven GE autonomously produced variants with improved accuracy, increasing from 92.52% to 93.34%, without compromising model compactness. This underscores the potential of LLMs to accelerate the traditional model design pipeline, enabling models to autonomously evolve and enhance their own designs.

Concurrent Neural Tree and Data Preprocessing AutoML for Image Classification

May 25, 2022

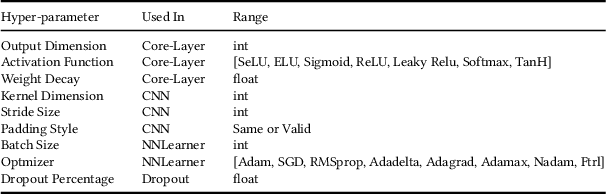

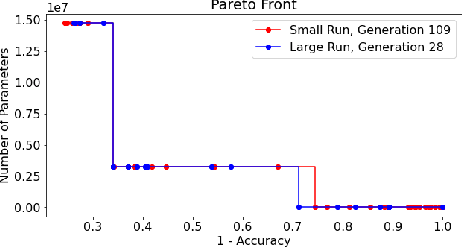

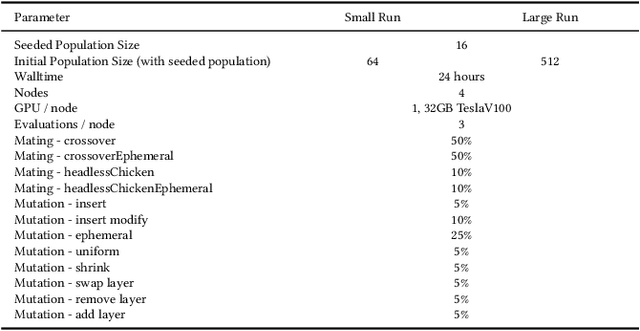

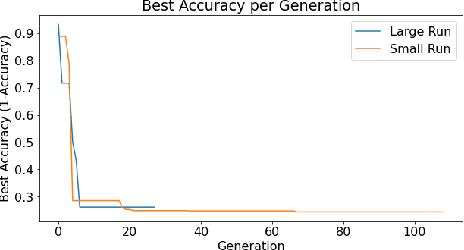

Abstract:Deep Neural Networks (DNN's) are a widely-used solution for a variety of machine learning problems. However, it is often necessary to invest a significant amount of a data scientist's time to pre-process input data, test different neural network architectures, and tune hyper-parameters for optimal performance. Automated machine learning (autoML) methods automatically search the architecture and hyper-parameter space for optimal neural networks. However, current state-of-the-art (SOTA) methods do not include traditional methods for manipulating input data as part of the algorithmic search space. We adapt the Evolutionary Multi-objective Algorithm Design Engine (EMADE), a multi-objective evolutionary search framework for traditional machine learning methods, to perform neural architecture search. We also integrate EMADE's signal processing and image processing primitives. These primitives allow EMADE to manipulate input data before ingestion into the simultaneously evolved DNN. We show that including these methods as part of the search space shows potential to provide benefits to performance on the CIFAR-10 image classification benchmark dataset.

Evolving SimGANs to Improve Abnormal Electrocardiogram Classification

May 12, 2022

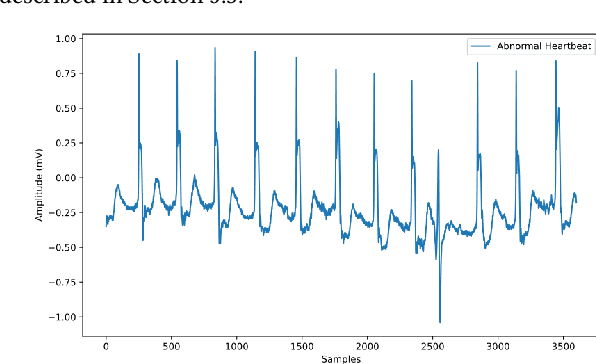

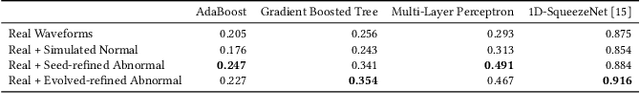

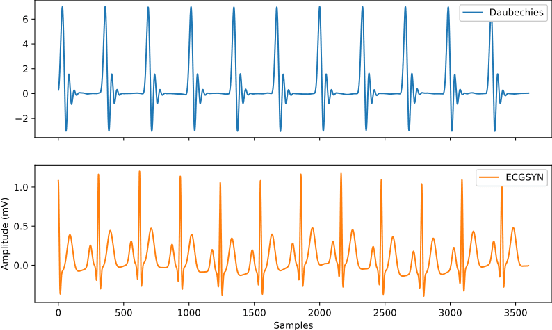

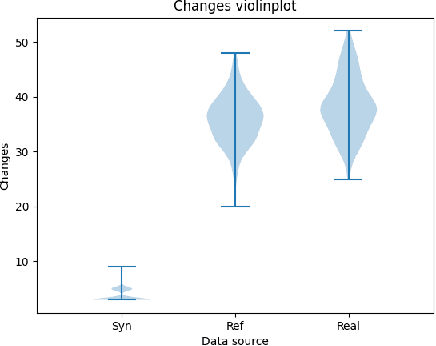

Abstract:Machine Learning models are used in a wide variety of domains. However, machine learning methods often require a large amount of data in order to be successful. This is especially troublesome in domains where collecting real-world data is difficult and/or expensive. Data simulators do exist for many of these domains, but they do not sufficiently reflect the real world data due to factors such as a lack of real-world noise. Recently generative adversarial networks (GANs) have been modified to refine simulated image data into data that better fits the real world distribution, using the SimGAN method. While evolutionary computing has been used for GAN evolution, there are currently no frameworks that can evolve a SimGAN. In this paper we (1) extend the SimGAN method to refine one-dimensional data, (2) modify Easy Cartesian Genetic Programming (ezCGP), an evolutionary computing framework, to create SimGANs that more accurately refine simulated data, and (3) create new feature-based quantitative metrics to evaluate refined data. We also use our framework to augment an electrocardiogram (ECG) dataset, a domain that suffers from the issues previously mentioned. In particular, while healthy ECGs can be simulated there are no current simulators of abnormal ECGs. We show that by using an evolved SimGAN to refine simulated healthy ECG data to mimic real-world abnormal ECGs, we can improve the accuracy of abnormal ECG classifiers.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge