Jason Rife

A Probabilistic Formulation of LiDAR Mapping with Neural Radiance Fields

Nov 04, 2024

Abstract:In this paper we reexamine the process through which a Neural Radiance Field (NeRF) can be trained to produce novel LiDAR views of a scene. Unlike image applications where camera pixels integrate light over time, LiDAR pulses arrive at specific times. As such, multiple LiDAR returns are possible for any given detector and the classification of these returns is inherently probabilistic. Applying a traditional NeRF training routine can result in the network learning phantom surfaces in free space between conflicting range measurements, similar to how floater aberrations may be produced by an image model. We show that by formulating loss as an integral of probability (rather than as an integral of optical density) the network can learn multiple peaks for a given ray, allowing the sampling of first, nth, or strongest returns from a single output channel. Code is available at https://github.com/mcdermatt/PLINK

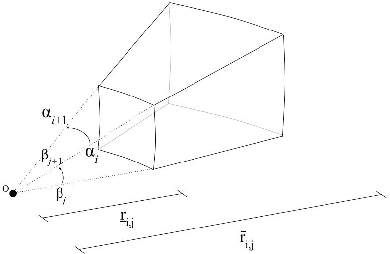

Characterizing Perspective Error in Voxel-Based Lidar Scan Matching

Jan 24, 2024Abstract:This paper quantifies an error source that limits the accuracy of lidar scan matching, particularly for voxel-based methods. Lidar scan matching, which is used in dead reckoning (also known as lidar odometry) and mapping, computes the rotation and translation that best align a pair of point clouds. Perspective errors occur when a scene is viewed from different angles, with different surfaces becoming visible or occluded from each viewpoint. To explain perspective anomalies observed in data, this paper models perspective errors for two objects representative of urban landscapes: a cylindrical column and a dual-wall corner. For each object, we provide an analytical model of the perspective error for voxel-based lidar scan matching. We then analyze how perspective errors accumulate as a lidar-equipped vehicle moves past these objects.

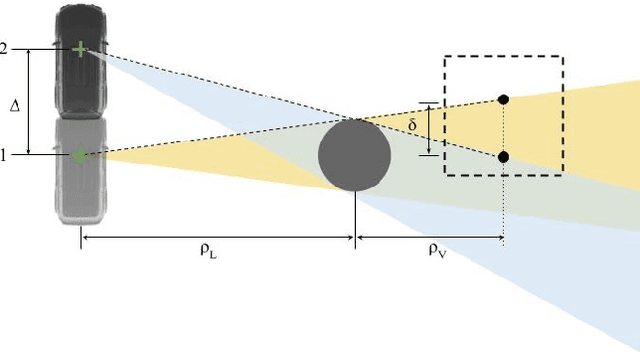

Correcting Motion Distortion for LIDAR HD-Map Localization

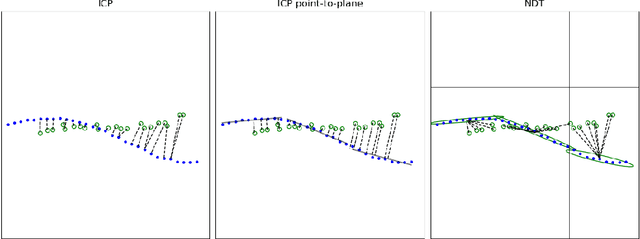

Aug 25, 2023Abstract:Because scanning-LIDAR sensors require finite time to create a point cloud, sensor motion during a scan warps the resulting image, a phenomenon known as motion distortion or rolling shutter. Motion-distortion correction methods exist, but they rely on external measurements or Bayesian filtering over multiple LIDAR scans. In this paper we propose a novel algorithm that performs snapshot processing to obtain a motion-distortion correction. Snapshot processing, which registers a current LIDAR scan to a reference image without using external sensors or Bayesian filtering, is particularly relevant for localization to a high-definition (HD) map. Our approach, which we call Velocity-corrected Iterative Compact Ellipsoidal Transformation (VICET), extends the well-known Normal Distributions Transform (NDT) algorithm to solve jointly for both a 6 Degree-of-Freedom (DOF) rigid transform between two LIDAR scans and a set of 6DOF motion states that describe distortion within the current LIDAR scan. Using experiments, we show that VICET achieves significantly higher accuracy than NDT or Iterative Closest Point (ICP) algorithms when localizing a distorted raw LIDAR scan against an undistorted HD Map. We recommend the reader explore our open-source code and visualizations at https://github.com/mcdermatt/VICET, which supplements this manuscript.

ICET Online Accuracy Characterization for Geometry-Based Laser Scan Matching

Jun 14, 2023Abstract:Distribution-to-Distribution (D2D) point cloud registration algorithms are fast, interpretable, and perform well in unstructured environments. Unfortunately, existing strategies for predicting solution error for these methods are overly optimistic, particularly in regions containing large or extended physical objects. In this paper we introduce the Iterative Closest Ellipsoidal Transform (ICET), a novel 3D LIDAR scan-matching algorithm that re-envisions NDT in order to provide robust accuracy prediction from first principles. Like NDT, ICET subdivides a LIDAR scan into voxels in order to analyze complex scenes by considering many smaller local point distributions, however, ICET assesses the voxel distribution to distinguish random noise from deterministic structure. ICET then uses a weighted least-squares formulation to incorporate this noise/structure distinction into computing a localization solution and predicting the solution-error covariance. In order to demonstrate the reasonableness of our accuracy predictions, we verify 3D ICET in three LIDAR tests involving real-world automotive data, high-fidelity simulated trajectories, and simulated corner-case scenes. For each test, ICET consistently performs scan matching with sub-centimeter accuracy. This level of accuracy, combined with the fact that the algorithm is fully interpretable, make it well suited for safety-critical transportation applications. Code is available at https://github.com/mcdermatt/ICET

Multipath Effects on Frequency-Locked Loops (FLLs) and FLL-derived Doppler Measurements

Mar 05, 2023Abstract:This paper investigates the impact of non-line-of-sight (NLOS) and multipath signals on frequency-locked loops (FLLs), which are commonly used to obtain Doppler shift measurements for velocity estimation in radio navigation. A salient result is that, in the absence of a direct signal, a single NLOS signal does not corrupt Doppler observables if the angle-of-arrival (AOA) is known. Another striking result is that, in a multipath environment involving two signals, the arctangent discriminator (averaged over the beat frequency) tracks only the higher amplitude signal. Our investigation has particular significance for radio navigation in urban environments, either using global navigation satellite system (GNSS) signals or ground-based cellular signals.

DNN Filter for Bias Reduction in Distribution-to-Distribution Scan Matching

Nov 18, 2022Abstract:Distribution-to-distribution (D2D) point cloud registration techniques such as the Normal Distributions Transform (NDT) can align point clouds sampled from unstructured scenes and provide accurate bounds of their own solution error covariance -- an important feature for safety-of-life navigation tasks. D2D methods rely on the assumption of a static scene and are therefore susceptible to bias from range-shadowing, self-occlusion, moving objects, and distortion artifacts as the recording device moves between frames. Deep Learning-based approaches can achieve higher accuracy in dynamic scenes by relaxing these constraints, however, DNNs produce uninterpretable solutions which can be problematic from a safety perspective. In this paper, we propose a method of down-sampling LIDAR point clouds to exclude voxels that violate the assumption of a static scene and introduce error to the D2D scan matching process. Our approach uses a solution consistency filter -- identifying and suppressing voxels where D2D contributions disagree with local estimates from a PointNet-based registration network. Our results show that this technique provides significant benefits in registration accuracy, and is particularly useful in scenes containing dense foliage.

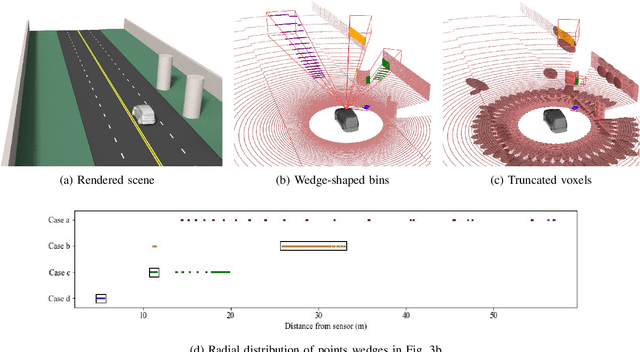

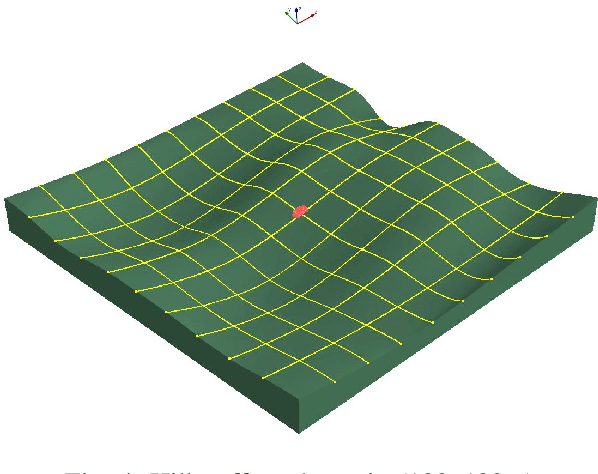

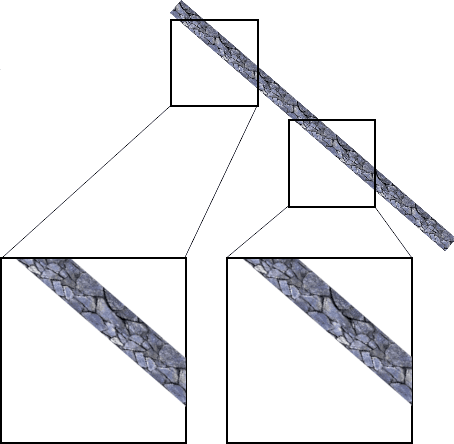

Mitigating Shadows in Lidar Scan Matching using Spherical Voxels

Aug 01, 2022

Abstract:In this paper we propose an approach to mitigate shadowing errors in Lidar scan matching, by introducing a preprocessing step based on spherical gridding. Because the grid aligns with the Lidar beam, it is relatively easy to eliminate shadow edges which cause systematic errors in Lidar scan matching. As we show through simulation, our proposed algorithm provides better results than ground-plane removal, the most common existing strategy for shadow mitigation. Unlike ground plane removal, our method applies to arbitrary terrains (e.g. shadows on urban walls, shadows in hilly terrain) while retaining key Lidar points on the ground that are critical for estimating changes in height, pitch, and roll. Our preprocessing algorithm can be used with a range of scan-matching methods; however, for voxel-based scan matching methods, it provides additional benefits by reducing computation costs and more evenly distributing Lidar points among voxels.

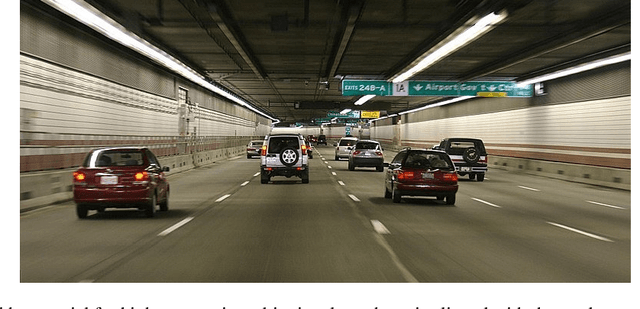

Enhanced Laser-Scan Matching with Online Error Estimation for Highway and Tunnel Driving

Jul 29, 2022

Abstract:Lidar data can be used to generate point clouds for the navigation of autonomous vehicles or mobile robotics platforms. Scan matching, the process of estimating the rigid transformation that best aligns two point clouds, is the basis for lidar odometry, a form of dead reckoning. Lidar odometry is particularly useful when absolute sensors, like GPS, are not available. Here we propose the Iterative Closest Ellipsoidal Transform (ICET), a scan matching algorithm which provides two novel improvements over the current state-of-the-art Normal Distributions Transform (NDT). Like NDT, ICET decomposes lidar data into voxels and fits a Gaussian distribution to the points within each voxel. The first innovation of ICET reduces geometric ambiguity along large flat surfaces by suppressing the solution along those directions. The second innovation of ICET is to infer the output error covariance associated with the position and orientation transformation between successive point clouds; the error covariance is particularly useful when ICET is incorporated into a state-estimation routine such as an extended Kalman filter. We constructed a simulation to compare the performance of ICET and NDT in 2D space both with and without geometric ambiguity and found that ICET produces superior estimates while accurately predicting solution accuracy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge