Jannik Franzen

Arctique: An artificial histopathological dataset unifying realism and controllability for uncertainty quantification

Nov 11, 2024

Abstract:Uncertainty Quantification (UQ) is crucial for reliable image segmentation. Yet, while the field sees continual development of novel methods, a lack of agreed-upon benchmarks limits their systematic comparison and evaluation: Current UQ methods are typically tested either on overly simplistic toy datasets or on complex real-world datasets that do not allow to discern true uncertainty. To unify both controllability and complexity, we introduce Arctique, a procedurally generated dataset modeled after histopathological colon images. We chose histopathological images for two reasons: 1) their complexity in terms of intricate object structures and highly variable appearance, which yields challenging segmentation problems, and 2) their broad prevalence for medical diagnosis and respective relevance of high-quality UQ. To generate Arctique, we established a Blender-based framework for 3D scene creation with intrinsic noise manipulation. Arctique contains 50,000 rendered images with precise masks as well as noisy label simulations. We show that by independently controlling the uncertainty in both images and labels, we can effectively study the performance of several commonly used UQ methods. Hence, Arctique serves as a critical resource for benchmarking and advancing UQ techniques and other methodologies in complex, multi-object environments, bridging the gap between realism and controllability. All code is publicly available, allowing re-creation and controlled manipulations of our shipped images as well as creation and rendering of new scenes.

Model Guidance via Explanations Turns Image Classifiers into Segmentation Models

Jul 03, 2024

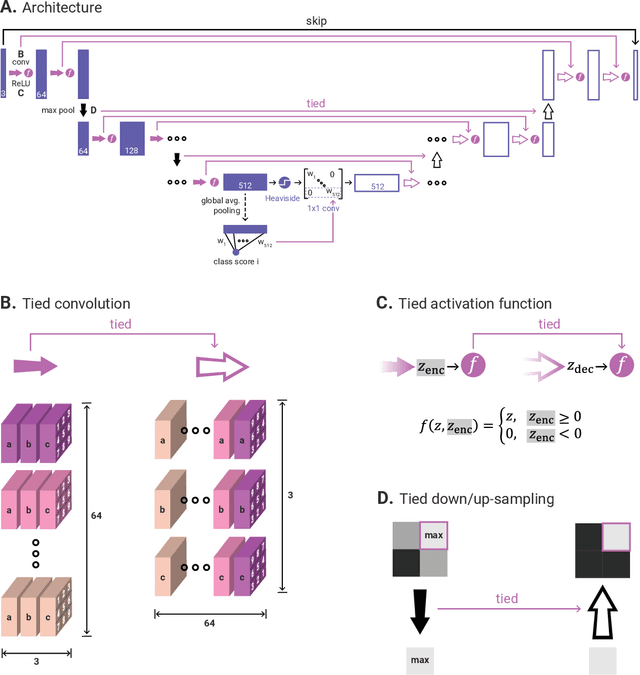

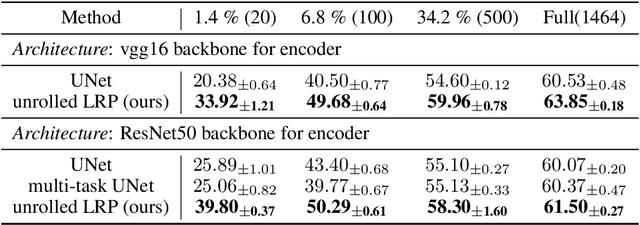

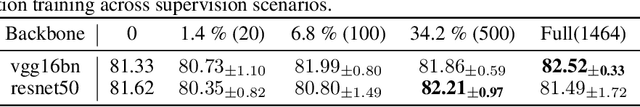

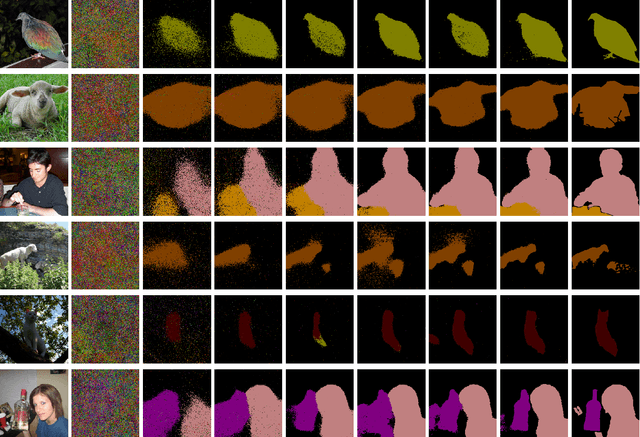

Abstract:Heatmaps generated on inputs of image classification networks via explainable AI methods like Grad-CAM and LRP have been observed to resemble segmentations of input images in many cases. Consequently, heatmaps have also been leveraged for achieving weakly supervised segmentation with image-level supervision. On the other hand, losses can be imposed on differentiable heatmaps, which has been shown to serve for (1)~improving heatmaps to be more human-interpretable, (2)~regularization of networks towards better generalization, (3)~training diverse ensembles of networks, and (4)~for explicitly ignoring confounding input features. Due to the latter use case, the paradigm of imposing losses on heatmaps is often referred to as "Right for the right reasons". We unify these two lines of research by investigating semi-supervised segmentation as a novel use case for the Right for the Right Reasons paradigm. First, we show formal parallels between differentiable heatmap architectures and standard encoder-decoder architectures for image segmentation. Second, we show that such differentiable heatmap architectures yield competitive results when trained with standard segmentation losses. Third, we show that such architectures allow for training with weak supervision in the form of image-level labels and small numbers of pixel-level labels, outperforming comparable encoder-decoder models. Code is available at \url{https://github.com/Kainmueller-Lab/TW-autoencoder}.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge