Janis Stolzenwald

Reach Out and Help: Assisted Remote Collaboration through a Handheld Robot

Oct 04, 2019

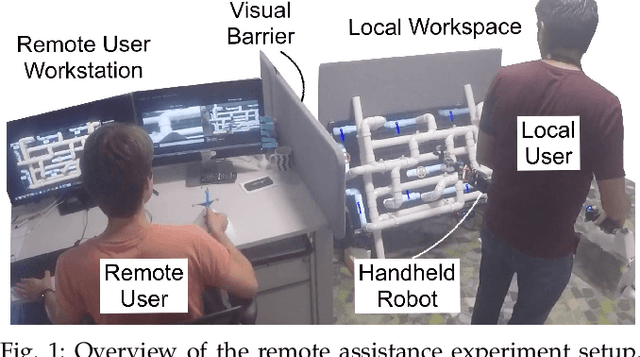

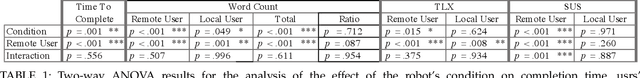

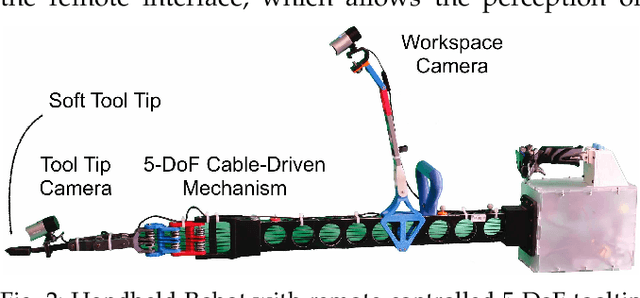

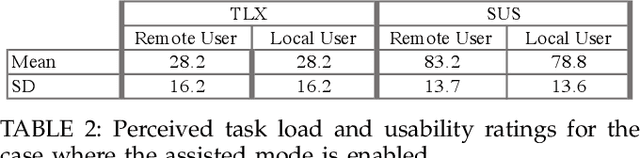

Abstract:We explore a remote collaboration setup, which involves three parties: a local worker, a remote helper and a handheld robot carried by the local worker. We propose a system that allows a remote user to assist the local user through diagnosis, guidance and physical interaction as a novel aspect with the handheld robot providing task knowledge and enhanced motion and accuracy capabilities. Through experimental studies, we assess the proposed system in two different configurations: with and without the robot's assistance in terms of object interactions and task knowledge. We show that the handheld robot can mediate the helper's instructions and remote object interactions while the robot's semi-autonomous features improve task performance by 24%, reduce the workload for the remote user and decrease required communication bandwidth between both users. This study is a first attempt to evaluate how this new type of collaborative robot works in a remote assistance scenario, a setup that we believe is important to leverage current robot constraints and existing communication technologies.

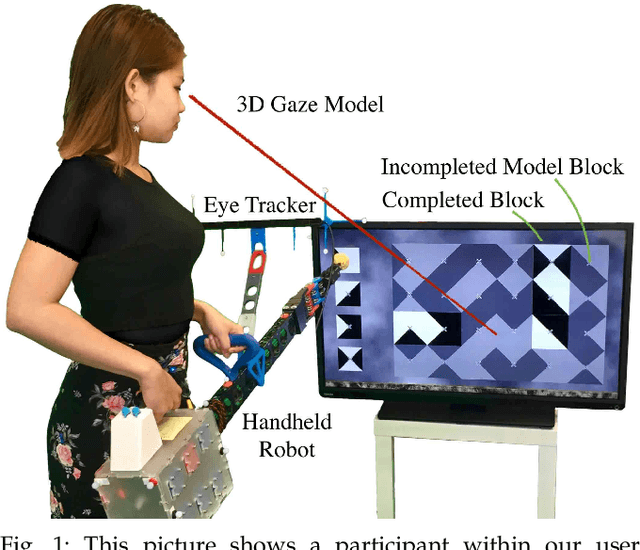

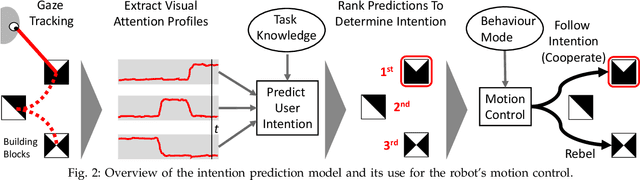

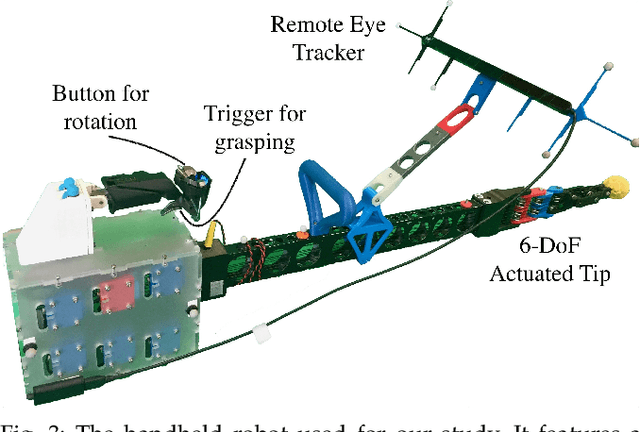

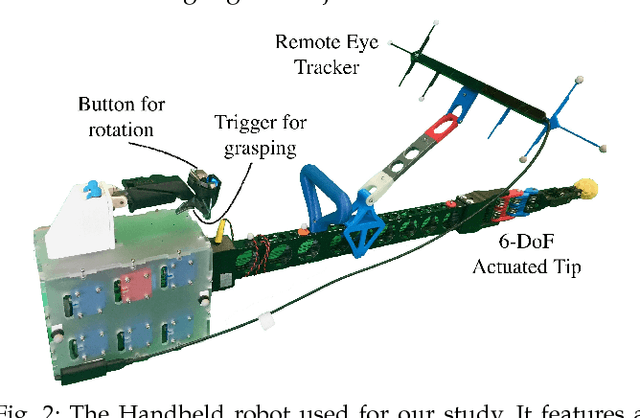

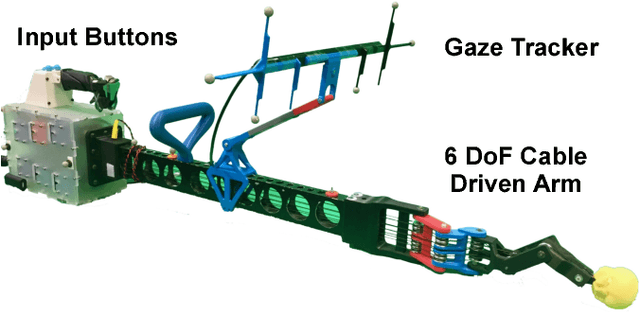

Rebellion and Obedience: The Effects of Intention Prediction in Cooperative Handheld Robots

Mar 19, 2019

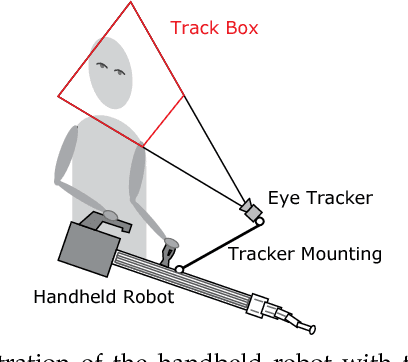

Abstract:Within this work, we explore intention inference for user actions in the context of a handheld robot setup. Handheld robots share the shape and properties of handheld tools while being able to process task information and aid manipulation. Here, we propose an intention prediction model to enhance cooperative task solving. The model derives intention from the user's gaze pattern which is captured using a robot-mounted remote eye tracker. The proposed model yields real-time capabilities and reliable accuracy up to 1.5s prior to predicted actions being executed. We assess the model in an assisted pick and place task and show how the robot's intention obedience or rebellion affects the cooperation with the robot.

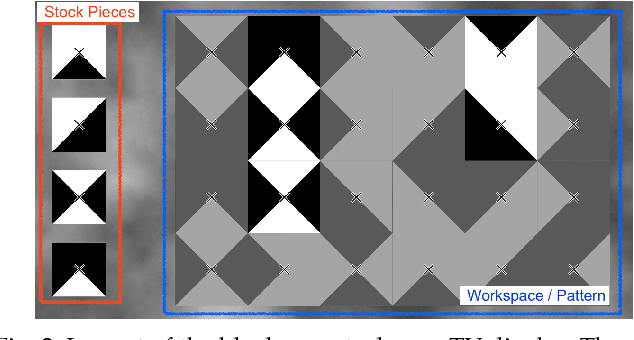

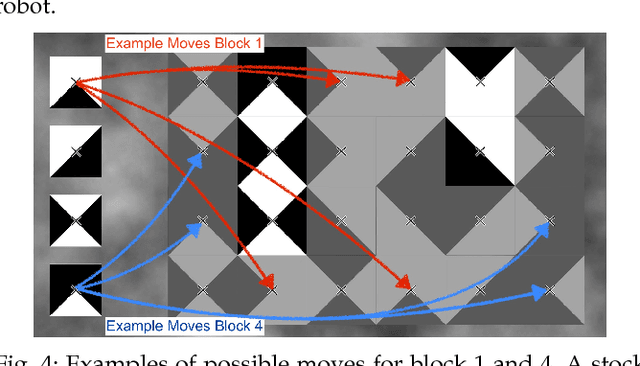

Towards Intention Prediction for Handheld Robots: a Case of Simulated Block Copying

Oct 15, 2018

Abstract:Within this work, we explore intention inference for user actions in the context of a handheld robot setup. Handheld robots share the shape and properties of handheld tools while being able to process task information and aid manipulation. Here, we propose an intention prediction model to enhance cooperative task solving. Within a block copy task, we collect eye gaze data using a robot-mounted remote eye tracker which is used to create a profile of visual attention for task-relevant objects in the workspace scene. These profiles are used to make predictions about user actions i.e. which block will be picked up next and where it will be placed. Our results show that our proposed model can predict user actions well in advance with an accuracy of 87.94% (500ms prior) for picking and 93.25% (1500 ms prior) for placing actions.

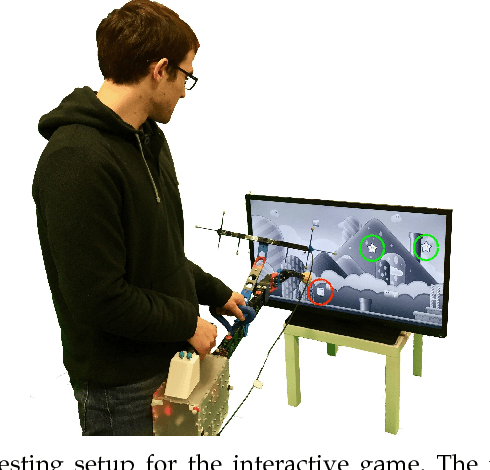

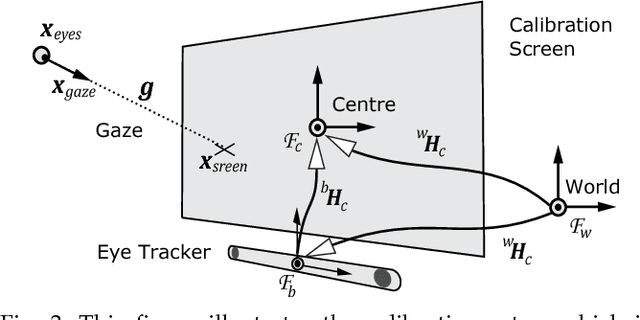

I Can See Your Aim: Estimating User Attention From Gaze For Handheld Robot Collaboration

Oct 15, 2018

Abstract:This paper explores the estimation of user attention in the setting of a cooperative handheld robot: a robot designed to behave as a handheld tool but that has levels of task knowledge. We use a tool-mounted gaze tracking system, which, after modelling via a pilot study, we use as a proxy for estimating the attention of the user. This information is then used for cooperation with users in a task of selecting and engaging with objects on a dynamic screen. Via a video game setup, we test various degrees of robot autonomy from fully autonomous, where the robot knows what it has to do and acts, to no autonomy where the user is in full control of the task. Our results measure performance and subjective metrics and show how the attention model benefits the interaction and preference of users.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge