Jan Wietrzykowski

On the descriptive power of LiDAR intensity images for segment-based loop closing in 3-D SLAM

Aug 03, 2021

Abstract:We propose an extension to the segment-based global localization method for LiDAR SLAM using descriptors learned considering the visual context of the segments. A new architecture of the deep neural network is presented that learns the visual context acquired from synthetic LiDAR intensity images. This approach allows a single multi-beam LiDAR to produce rich and highly descriptive location signatures. The method is tested on two public datasets, demonstrating an improved descriptiveness of the new descriptors, and more reliable loop closure detection in SLAM. Attention analysis of the network is used to show the importance of focusing on the broader context rather than only on the 3-D segment.

Low-effort place recognition with WiFi fingerprints using deep learning

Apr 28, 2017

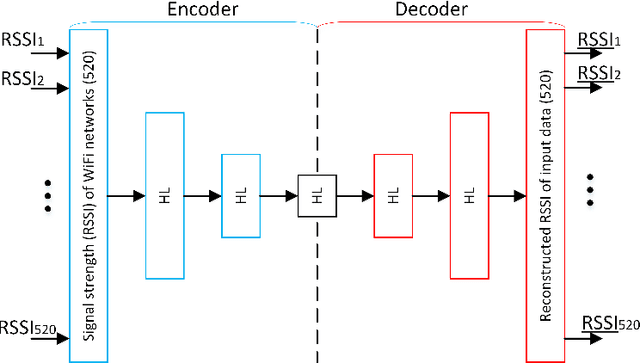

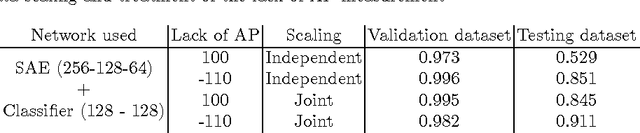

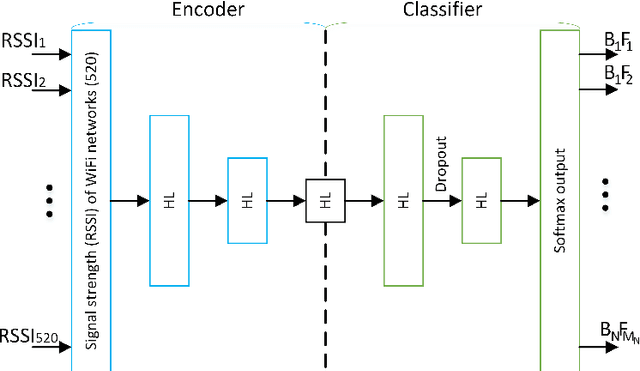

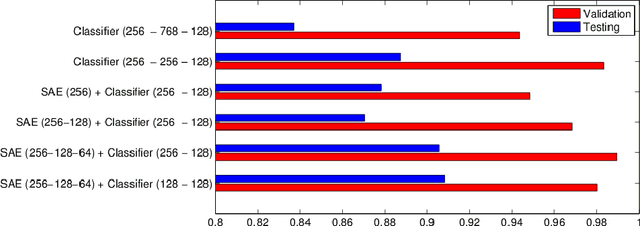

Abstract:Using WiFi signals for indoor localization is the main localization modality of the existing personal indoor localization systems operating on mobile devices. WiFi fingerprinting is also used for mobile robots, as WiFi signals are usually available indoors and can provide rough initial position estimate or can be used together with other positioning systems. Currently, the best solutions rely on filtering, manual data analysis, and time-consuming parameter tuning to achieve reliable and accurate localization. In this work, we propose to use deep neural networks to significantly lower the work-force burden of the localization system design, while still achieving satisfactory results. Assuming the state-of-the-art hierarchical approach, we employ the DNN system for building/floor classification. We show that stacked autoencoders allow to efficiently reduce the feature space in order to achieve robust and precise classification. The proposed architecture is verified on the publicly available UJIIndoorLoc dataset and the results are compared with other solutions.

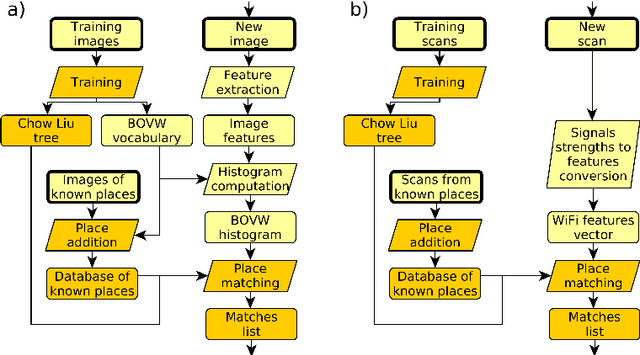

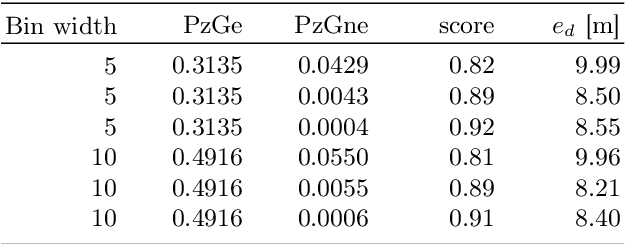

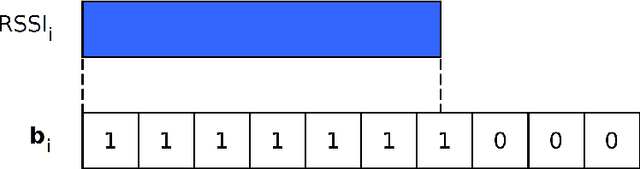

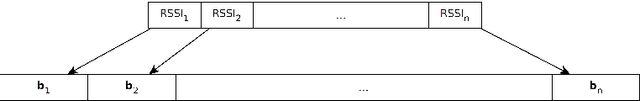

Adopting the FAB-MAP algorithm for indoor localization with WiFi fingerprints

Apr 28, 2017

Abstract:Personal indoor localization is usually accomplished by fusing information from various sensors. A common choice is to use the WiFi adapter that provides information about Access Points that can be found in the vicinity. Unfortunately, state-of-the-art approaches to WiFi-based localization often employ very dense maps of the WiFi signal distribution, and require a time-consuming process of parameter selection. On the other hand, camera images are commonly used for visual place recognition, detecting whenever the user observes a scene similar to the one already recorded in a database. Visual place recognition algorithms can work with sparse databases of recorded scenes and are in general simple to parametrize. Therefore, we propose a WiFi-based global localization method employing the structure of the well-known FAB-MAP visual place recognition algorithm. Similarly to FAB-MAP our method uses Chow-Liu trees to estimate a joint probability distribution of re-observation of a place given a set of features extracted at places visited so far. However, we are the first who apply this idea to recorded WiFi scans instead of visual words. The new method is evaluated on the UJIIndoorLoc dataset used in the EvAAL competition, allowing fair comparison with other solutions.

Real-Time Visual Place Recognition for Personal Localization on a Mobile Device

Apr 27, 2017

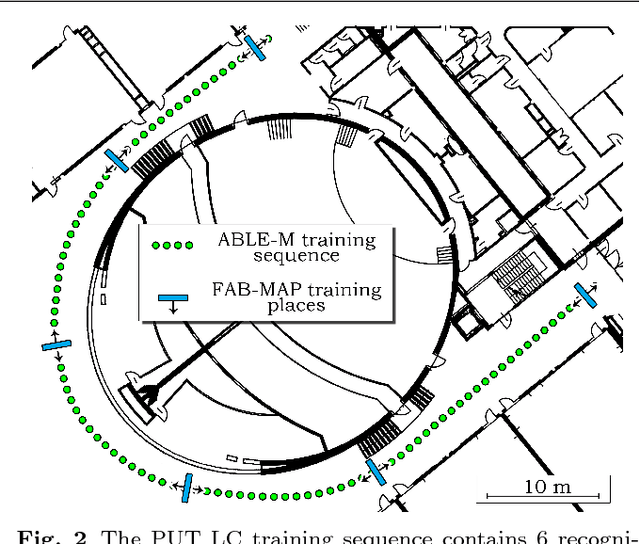

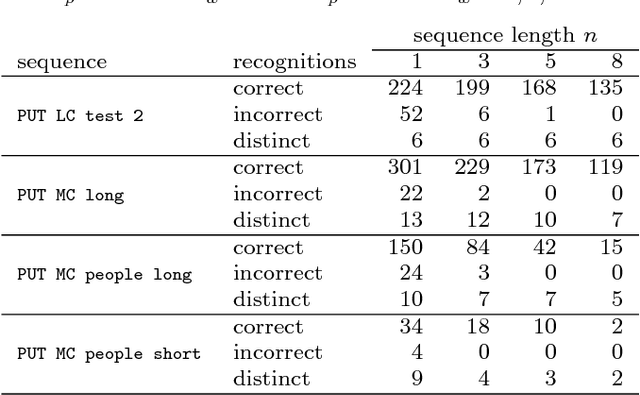

Abstract:The paper presents an approach to indoor personal localization on a mobile device based on visual place recognition. We implemented on a smartphone two state-of-the-art algorithms that are representative to two different approaches to visual place recognition: FAB-MAP that recognizes places using individual images, and ABLE-M that utilizes sequences of images. These algorithms are evaluated in environments of different structure, focusing on problems commonly encountered when a mobile device camera is used. The conclusions drawn from this evaluation are guidelines to design the FastABLE system, which is based on the ABLE-M algorithm, but introduces major modifications to the concept of image matching. The improvements radically cut down the processing time and improve scalability, making it possible to localize the user in long image sequences with the limited computing power of a mobile device. The resulting place recognition system compares favorably to both the ABLE-M and the FAB-MAP solutions in the context of real-time personal localization.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge