James Marien

Audio-guided Album Cover Art Generation with Genetic Algorithms

Jul 14, 2022

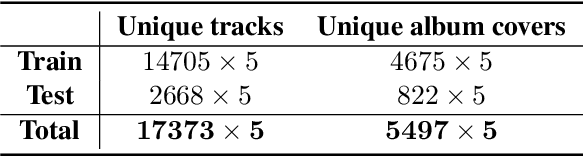

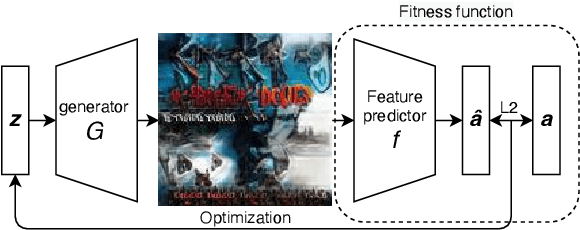

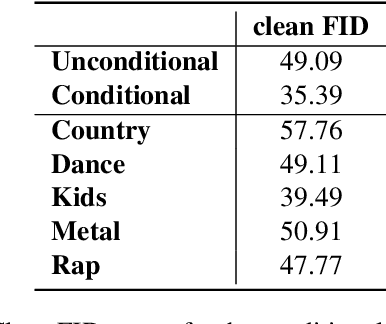

Abstract:Over 60,000 songs are released on Spotify every day, and the competition for the listener's attention is immense. In that regard, the importance of captivating and inviting cover art cannot be underestimated, because it is deeply entangled with a song's character and the artist's identity, and remains one of the most important gateways to lead people to discover music. However, designing cover art is a highly creative, lengthy and sometimes expensive process that can be daunting, especially for non-professional artists. For this reason, we propose a novel deep-learning framework to generate cover art guided by audio features. Inspired by VQGAN-CLIP, our approach is highly flexible because individual components can easily be replaced without the need for any retraining. This paper outlines the architectural details of our models and discusses the optimization challenges that emerge from them. More specifically, we will exploit genetic algorithms to overcome bad local minima and adversarial examples. We find that our framework can generate suitable cover art for most genres, and that the visual features adapt themselves to audio feature changes. Given these results, we believe that our framework paves the road for extensions and more advanced applications in audio-guided visual generation tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge