James K. Howes IV

Covert Message Passing over Public Internet Platforms Using Model-Based Format-Transforming Encryption

Oct 13, 2021

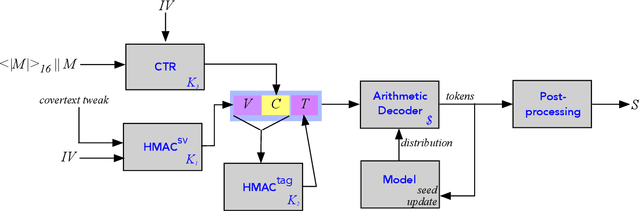

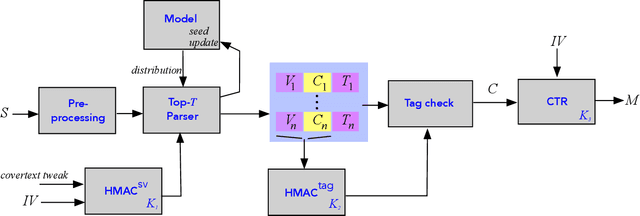

Abstract:We introduce a new type of format-transforming encryption where the format of ciphertexts is implicitly encoded within a machine-learned generative model. Around this primitive, we build a system for covert messaging over large, public internet platforms (e.g., Twitter). Loosely, our system composes an authenticated encryption scheme, with a method for encoding random ciphertext bits into samples from the generative model's family of seed-indexed token-distributions. By fixing a deployment scenario, we are forced to consider system-level and algorithmic solutions to real challenges -- such as receiver-side parsing ambiguities, and the low information-carrying capacity of actual token-distributions -- that were elided in prior work. We use GPT-2 as our generative model so that our system cryptographically transforms plaintext bitstrings into natural-language covertexts suitable for posting to public platforms. We consider adversaries with full view of the internet platform's content, whose goal is to surface posts that are using our system for covert messaging. We carry out a suite of experiments to provide heuristic evidence of security and to explore tradeoffs between operational efficiency and detectability.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge