James G. Lopez

Adaptive Stress Testing of Trajectory Predictions in Flight Management Systems

Nov 04, 2020

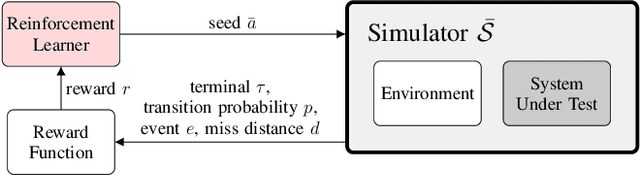

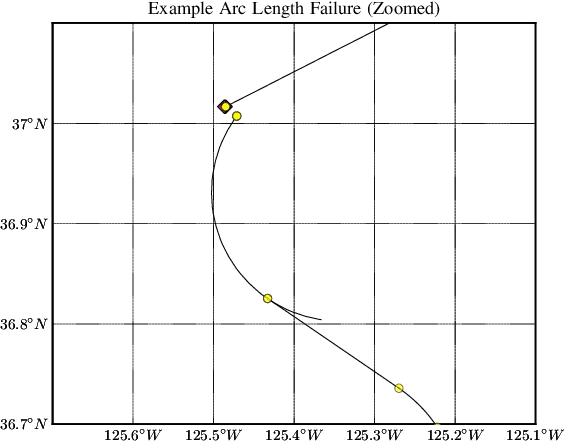

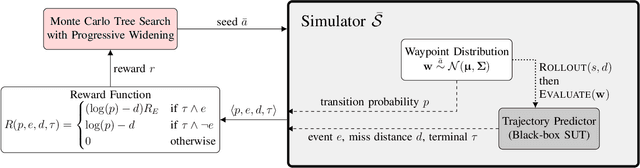

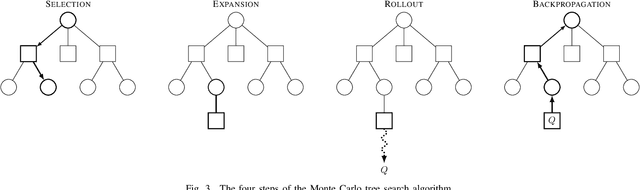

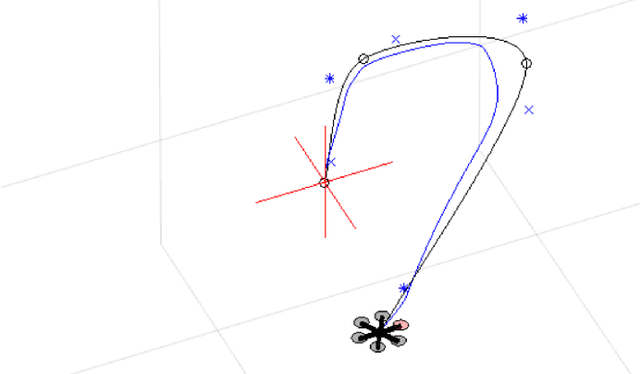

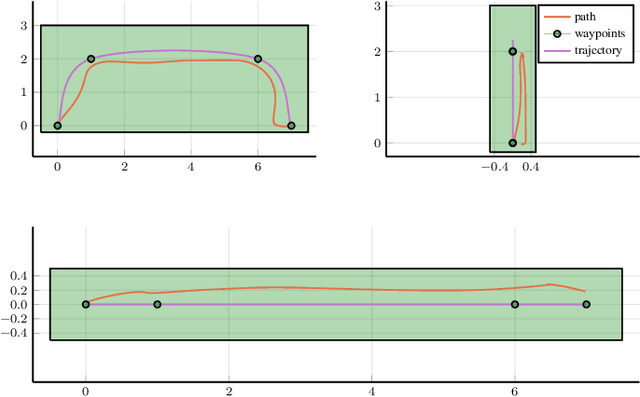

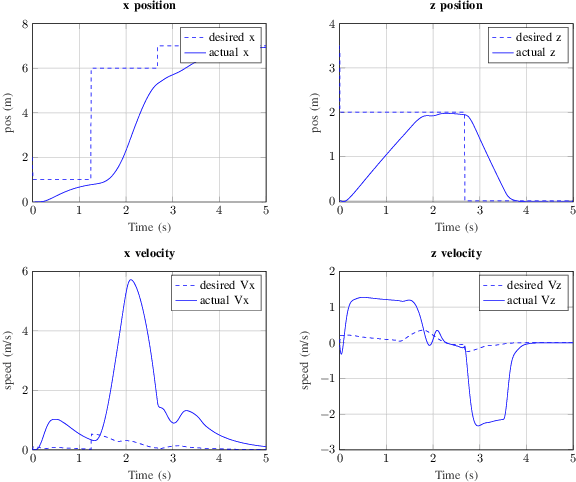

Abstract:To find failure events and their likelihoods in flight-critical systems, we investigate the use of an advanced black-box stress testing approach called adaptive stress testing. We analyze a trajectory predictor from a developmental commercial flight management system which takes as input a collection of lateral waypoints and en-route environmental conditions. Our aim is to search for failure events relating to inconsistencies in the predicted lateral trajectories. The intention of this work is to find likely failures and report them back to the developers so they can address and potentially resolve shortcomings of the system before deployment. To improve search performance, this work extends the adaptive stress testing formulation to be applied more generally to sequential decision-making problems with episodic reward by collecting the state transitions during the search and evaluating at the end of the simulated rollout. We use a modified Monte Carlo tree search algorithm with progressive widening as our adversarial reinforcement learner. The performance is compared to direct Monte Carlo simulations and to the cross-entropy method as an alternative importance sampling baseline. The goal is to find potential problems otherwise not found by traditional requirements-based testing. Results indicate that our adaptive stress testing approach finds more failures and finds failures with higher likelihood relative to the baseline approaches.

Runtime Safety Assurance Using Reinforcement Learning

Oct 20, 2020

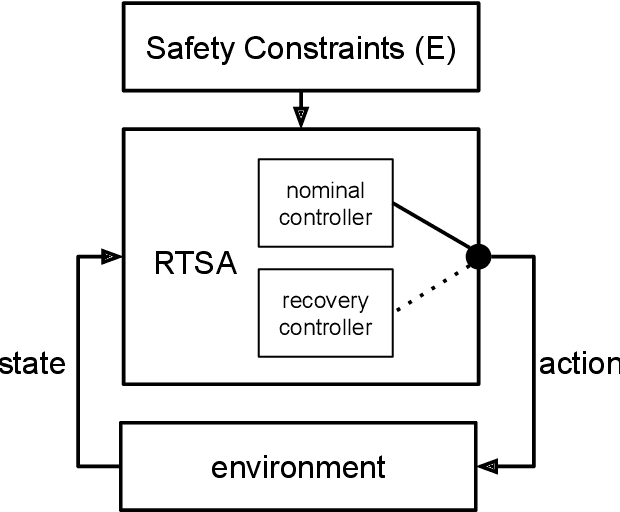

Abstract:The airworthiness and safety of a non-pedigreed autopilot must be verified, but the cost to formally do so can be prohibitive. We can bypass formal verification of non-pedigreed components by incorporating Runtime Safety Assurance (RTSA) as mechanism to ensure safety. RTSA consists of a meta-controller that observes the inputs and outputs of a non-pedigreed component and verifies formally specified behavior as the system operates. When the system is triggered, a verified recovery controller is deployed. Recovery controllers are designed to be safe but very likely disruptive to the operational objective of the system, and thus RTSA systems must balance safety and efficiency. The objective of this paper is to design a meta-controller capable of identifying unsafe situations with high accuracy. High dimensional and non-linear dynamics in which modern controllers are deployed along with the black-box nature of the nominal controllers make this a difficult problem. Current approaches rely heavily on domain expertise and human engineering. We frame the design of RTSA with the Markov decision process (MDP) framework and use reinforcement learning (RL) to solve it. Our learned meta-controller consistently exhibits superior performance in our experiments compared to our baseline, human engineered approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge