James Brotchie

RIOT: Recursive Inertial Odometry Transformer for Localisation from Low-Cost IMU Measurements

Mar 03, 2023

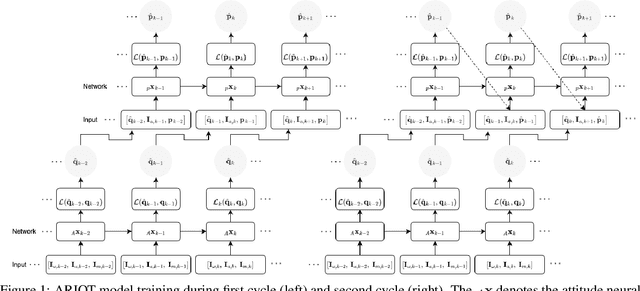

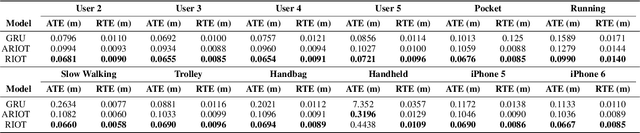

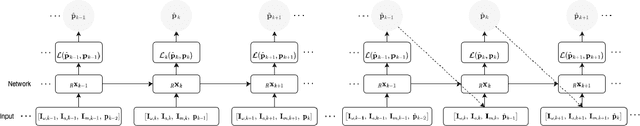

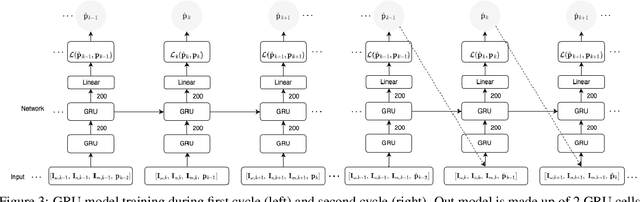

Abstract:Inertial localisation is an important technique as it enables ego-motion estimation in conditions where external observers are unavailable. However, low-cost inertial sensors are inherently corrupted by bias and noise, which lead to unbound errors, making straight integration for position intractable. Traditional mathematical approaches are reliant on prior system knowledge, geometric theories and are constrained by predefined dynamics. Recent advances in deep learning, that benefit from ever-increasing volumes of data and computational power, allow for data driven solutions that offer more comprehensive understanding. Existing deep inertial odometry solutions rely on estimating the latent states, such as velocity, or are dependant on fixed sensor positions and periodic motion patterns. In this work we propose taking the traditional state estimation recursive methodology and applying it in the deep learning domain. Our approach, which incorporates the true position priors in the training process, is trained on inertial measurements and ground truth displacement data, allowing recursion and to learn both motion characteristics and systemic error bias and drift. We present two end-to-end frameworks for pose invariant deep inertial odometry that utilise self-attention to capture both spatial features and long-range dependencies in inertial data. We evaluate our approaches against a custom 2-layer Gated Recurrent Unit, trained in the same manner on the same data, and tested each approach on a number of different users, devices and activities. Each network had a sequence length weighted relative trajectory error mean $\leq0.4594$m, highlighting the effectiveness of our learning process used in the development of the models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge