Jalal Al-afandi

Saliency Map Based Data Augmentation

May 29, 2022

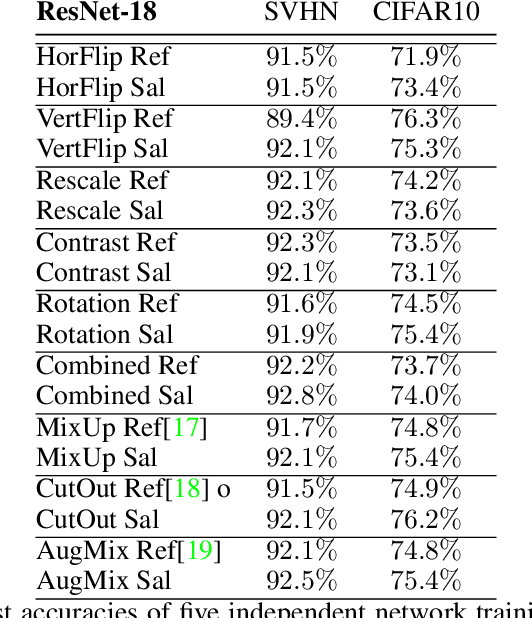

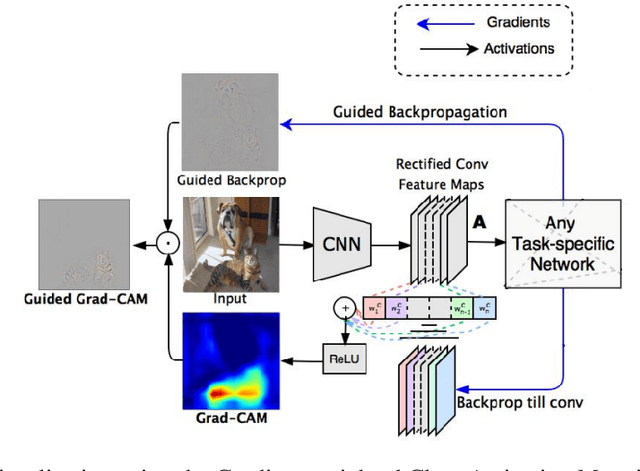

Abstract:Data augmentation is a commonly applied technique with two seemingly related advantages. With this method one can increase the size of the training set generating new samples and also increase the invariance of the network against the applied transformations. Unfortunately all images contain both relevant and irrelevant features for classification therefore this invariance has to be class specific. In this paper we will present a new method which uses saliency maps to restrict the invariance of neural networks to certain regions, providing higher test accuracy in classification tasks.

Filtered Batch Normalization

Oct 16, 2020

Abstract:It is a common assumption that the activation of different layers in neural networks follow Gaussian distribution. This distribution can be transformed using normalization techniques, such as batch-normalization, increasing convergence speed and improving accuracy. In this paper we would like to demonstrate, that activations do not necessarily follow Gaussian distribution in all layers. Neurons in deeper layers are more selective and specific which can result extremely large, out-of-distribution activations. We will demonstrate that one can create more consistent mean and variance values for batch normalization during training by filtering out these activations which can further improve convergence speed and yield higher validation accuracy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge