Jakub Wiśniewski

Prevention is better than cure: a case study of the abnormalities detection in the chest

May 18, 2023

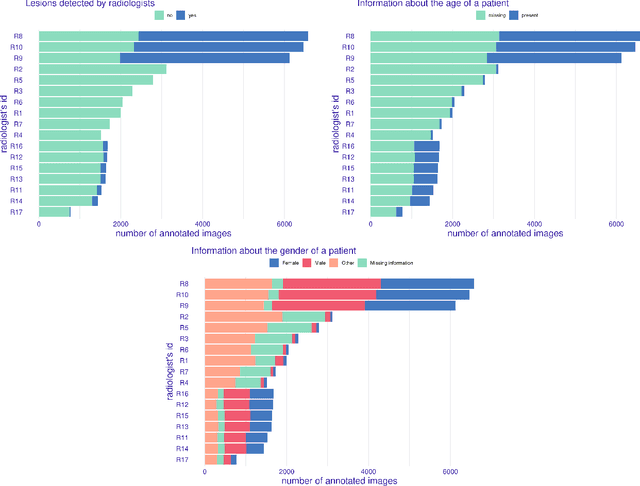

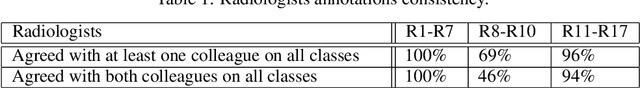

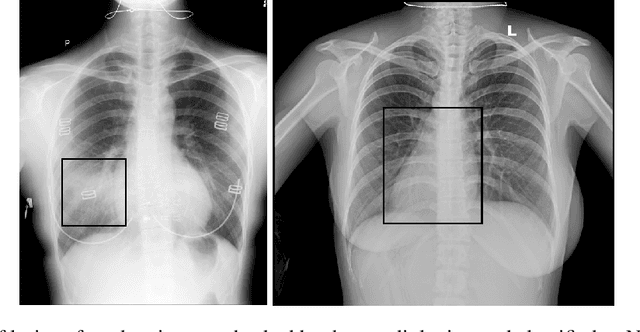

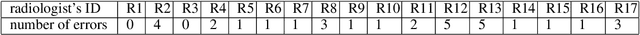

Abstract:Prevention is better than cure. This old truth applies not only to the prevention of diseases but also to the prevention of issues with AI models used in medicine. The source of malfunctioning of predictive models often lies not in the training process but reaches the data acquisition phase or design of the experiment phase. In this paper, we analyze in detail a single use case - a Kaggle competition related to the detection of abnormalities in X-ray lung images. We demonstrate how a series of simple tests for data imbalance exposes faults in the data acquisition and annotation process. Complex models are able to learn such artifacts and it is difficult to remove this bias during or after the training. Errors made at the data collection stage make it difficult to validate the model correctly. Based on this use case, we show how to monitor data and model balance (fairness) throughout the life cycle of a predictive model, from data acquisition to parity analysis of model scores.

fairmodels: A Flexible Tool For Bias Detection, Visualization, And Mitigation

Apr 01, 2021

Abstract:Machine learning decision systems are getting omnipresent in our lives. From dating apps to rating loan seekers, algorithms affect both our well-being and future. Typically, however, these systems are not infallible. Moreover, complex predictive models are really eager to learn social biases present in historical data that can lead to increasing discrimination. If we want to create models responsibly then we need tools for in-depth validation of models also from the perspective of potential discrimination. This article introduces an R package fairmodels that helps to validate fairness and eliminate bias in classification models in an easy and flexible fashion. The fairmodels package offers a model-agnostic approach to bias detection, visualization and mitigation. The implemented set of functions and fairness metrics enables model fairness validation from different perspectives. The package includes a series of methods for bias mitigation that aim to diminish the discrimination in the model. The package is designed not only to examine a single model, but also to facilitate comparisons between multiple models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge