Jakob Wilm

Technical University of Denmark, Visual Computing, Denmark, University of Southern Denmark, Maersk Mc-Kinney Moller Institute, Denmark

Image-Based Alignment of 3D Scans

Sep 14, 2021

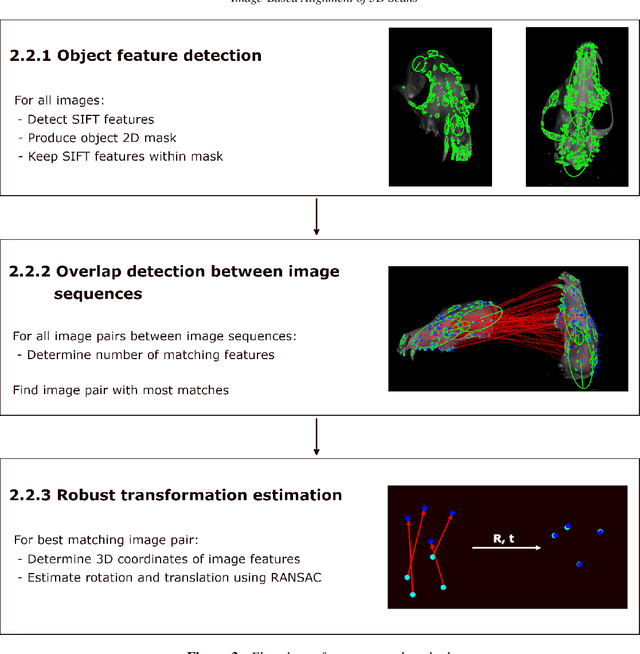

Abstract:Full 3D scanning can efficiently be obtained using structured light scanning combined with a rotation stage. In this setting it is, however, necessary to reposition the object and scan it in different poses in order to cover the entire object. In this case, correspondence between the scans is lost, since the object was moved. In this paper, we propose a fully automatic method for aligning the scans of an object in two different poses. This is done by matching 2D features between images from two poses and utilizing correspondence between the images and the scanned point clouds. To demonstrate the approach, we present the results of scanning three dissimilar objects.

Superaccurate Camera Calibration via Inverse Rendering

Mar 20, 2020Abstract:The most prevalent routine for camera calibration is based on the detection of well-defined feature points on a purpose-made calibration artifact. These could be checkerboard saddle points, circles, rings or triangles, often printed on a planar structure. The feature points are first detected and then used in a nonlinear optimization to estimate the internal camera parameters.We propose a new method for camera calibration using the principle of inverse rendering. Instead of relying solely on detected feature points, we use an estimate of the internal parameters and the pose of the calibration object to implicitly render a non-photorealistic equivalent of the optical features. This enables us to compute pixel-wise differences in the image domain without interpolation artifacts. We can then improve our estimate of the internal parameters by minimizing pixel-wise least-squares differences. In this way, our model optimizes a meaningful metric in the image space assuming normally distributed noise characteristic for camera sensors.We demonstrate using synthetic and real camera images that our method improves the accuracy of estimated camera parameters as compared with current state-of-the-art calibration routines. Our method also estimates these parameters more robustly in the presence of noise and in situations where the number of calibration images is limited.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge