Jakob Andert

Hybrid Reinforcement Learning and Model Predictive Control for Adaptive Control of Hydrogen-Diesel Dual-Fuel Combustion

Apr 23, 2025Abstract:Reinforcement Learning (RL) and Machine Learning Integrated Model Predictive Control (ML-MPC) are promising approaches for optimizing hydrogen-diesel dual-fuel engine control, as they can effectively control multiple-input multiple-output systems and nonlinear processes. ML-MPC is advantageous for providing safe and optimal controls, ensuring the engine operates within predefined safety limits. In contrast, RL is distinguished by its adaptability to changing conditions through its learning-based approach. However, the practical implementation of either method alone poses challenges. RL requires high variance in control inputs during early learning phases, which can pose risks to the system by potentially executing unsafe actions, leading to mechanical damage. Conversely, ML-MPC relies on an accurate system model to generate optimal control inputs and has limited adaptability to system drifts, such as injector aging, which naturally occur in engine applications. To address these limitations, this study proposes a hybrid RL and ML-MPC approach that uses an ML-MPC framework while incorporating an RL agent to dynamically adjust the ML-MPC load tracking reference in response to changes in the environment. At the same time, the ML-MPC ensures that actions stay safe throughout the RL agent's exploration. To evaluate the effectiveness of this approach, fuel pressure is deliberately varied to introduce a model-plant mismatch between the ML-MPC and the engine test bench. The result of this mismatch is a root mean square error (RMSE) in indicated mean effective pressure of 0.57 bar when running the ML-MPC. The experimental results demonstrate that RL successfully adapts to changing boundary conditions by altering the tracking reference while ML-MPC ensures safe control inputs. The quantitative improvement in load tracking by implementing RL is an RSME of 0.44 bar.

Safe Reinforcement Learning for Real-World Engine Control

Jan 28, 2025

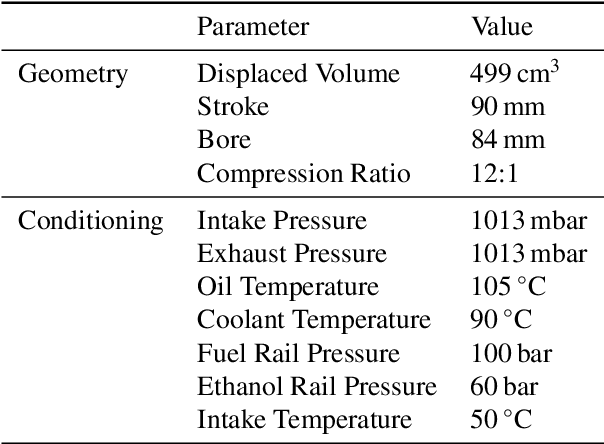

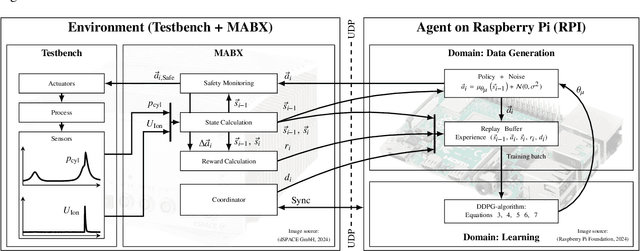

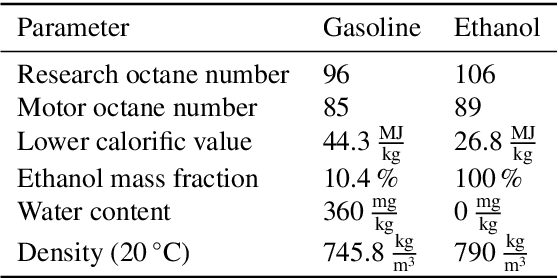

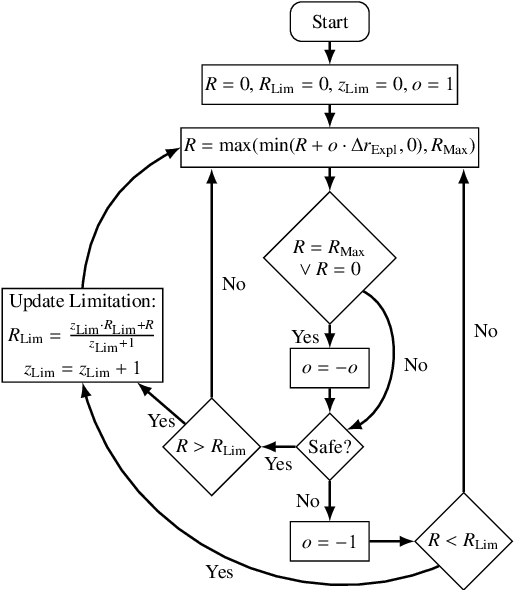

Abstract:This work introduces a toolchain for applying Reinforcement Learning (RL), specifically the Deep Deterministic Policy Gradient (DDPG) algorithm, in safety-critical real-world environments. As an exemplary application, transient load control is demonstrated on a single-cylinder internal combustion engine testbench in Homogeneous Charge Compression Ignition (HCCI) mode, that offers high thermal efficiency and low emissions. However, HCCI poses challenges for traditional control methods due to its nonlinear, autoregressive, and stochastic nature. RL provides a viable solution, however, safety concerns, such as excessive pressure rise rates, must be addressed when applying to HCCI. A single unsuitable control input can severely damage the engine or cause misfiring and shut down. Additionally, operating limits are not known a priori and must be determined experimentally. To mitigate these risks, real-time safety monitoring based on the k-nearest neighbor algorithm is implemented, enabling safe interaction with the testbench. The feasibility of this approach is demonstrated as the RL agent learns a control policy through interaction with the testbench. A root mean square error of 0.1374 bar is achieved for the indicated mean effective pressure, comparable to neural network-based controllers from the literature. The toolchain's flexibility is further demonstrated by adapting the agent's policy to increase ethanol energy shares, promoting renewable fuel use while maintaining safety. This RL approach addresses the longstanding challenge of applying RL to safety-critical real-world environments. The developed toolchain, with its adaptability and safety mechanisms, paves the way for future applicability of RL in engine testbenches and other safety-critical settings.

LExCI: A Framework for Reinforcement Learning with Embedded Systems

Dec 05, 2023Abstract:Advances in artificial intelligence (AI) have led to its application in many areas of everyday life. In the context of control engineering, reinforcement learning (RL) represents a particularly promising approach as it is centred around the idea of allowing an agent to freely interact with its environment to find an optimal strategy. One of the challenges professionals face when training and deploying RL agents is that the latter often have to run on dedicated embedded devices. This could be to integrate them into an existing toolchain or to satisfy certain performance criteria like real-time constraints. Conventional RL libraries, however, cannot be easily utilised in conjunction with that kind of hardware. In this paper, we present a framework named LExCI, the Learning and Experiencing Cycle Interface, which bridges this gap and provides end-users with a free and open-source tool for training agents on embedded systems using the open-source library RLlib. Its operability is demonstrated with two state-of-the-art RL-algorithms and a rapid control prototyping system.

Transfer of Reinforcement Learning-Based Controllers from Model- to Hardware-in-the-Loop

Oct 25, 2023

Abstract:The process of developing control functions for embedded systems is resource-, time-, and data-intensive, often resulting in sub-optimal cost and solutions approaches. Reinforcement Learning (RL) has great potential for autonomously training agents to perform complex control tasks with minimal human intervention. Due to costly data generation and safety constraints, however, its application is mostly limited to purely simulated domains. To use RL effectively in embedded system function development, the generated agents must be able to handle real-world applications. In this context, this work focuses on accelerating the training process of RL agents by combining Transfer Learning (TL) and X-in-the-Loop (XiL) simulation. For the use case of transient exhaust gas re-circulation control for an internal combustion engine, use of a computationally cheap Model-in-the-Loop (MiL) simulation is made to select a suitable algorithm, fine-tune hyperparameters, and finally train candidate agents for the transfer. These pre-trained RL agents are then fine-tuned in a Hardware-in-the-Loop (HiL) system via TL. The transfer revealed the need for adjusting the reward parameters when advancing to real hardware. Further, the comparison between a purely HiL-trained and a transferred agent showed a reduction of training time by a factor of 5.9. The results emphasize the necessity to train RL agents with real hardware, and demonstrate that the maturity of the transferred policies affects both training time and performance, highlighting the strong synergies between TL and XiL simulation.

Introducing a Deep Neural Network-based Model Predictive Control Framework for Rapid Controller Implementation

Oct 12, 2023Abstract:Model Predictive Control (MPC) provides an optimal control solution based on a cost function while allowing for the implementation of process constraints. As a model-based optimal control technique, the performance of MPC strongly depends on the model used where a trade-off between model computation time and prediction performance exists. One solution is the integration of MPC with a machine learning (ML) based process model which are quick to evaluate online. This work presents the experimental implementation of a deep neural network (DNN) based nonlinear MPC for Homogeneous Charge Compression Ignition (HCCI) combustion control. The DNN model consists of a Long Short-Term Memory (LSTM) network surrounded by fully connected layers which was trained using experimental engine data and showed acceptable prediction performance with under 5% error for all outputs. Using this model, the MPC is designed to track the Indicated Mean Effective Pressure (IMEP) and combustion phasing trajectories, while minimizing several parameters. Using the acados software package to enable the real-time implementation of the MPC on an ARM Cortex A72, the optimization calculations are completed within 1.4 ms. The external A72 processor is integrated with the prototyping engine controller using a UDP connection allowing for rapid experimental deployment of the NMPC. The IMEP trajectory following of the developed controller was excellent, with a root-mean-square error of 0.133 bar, in addition to observing process constraints.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge