Jake Ketchum

Force and Speed in a Soft Stewart Platform

Apr 18, 2025Abstract:Many soft robots struggle to produce dynamic motions with fast, large displacements. We develop a parallel 6 degree-of-freedom (DoF) Stewart-Gough mechanism using Handed Shearing Auxetic (HSA) actuators. By using soft actuators, we are able to use one third as many mechatronic components as a rigid Stewart platform, while retaining a working payload of 2kg and an open-loop bandwidth greater than 16Hz. We show that the platform is capable of both precise tracing and dynamic disturbance rejection when controlling a ball and sliding puck using a Proportional Integral Derivative (PID) controller. We develop a machine-learning-based kinematics model and demonstrate a functional workspace of roughly 10cm in each translation direction and 28 degrees in each orientation. This 6DoF device has many of the characteristics associated with rigid components - power, speed, and total workspace - while capturing the advantages of soft mechanisms.

Active Exploration for Real-Time Haptic Training

May 20, 2024Abstract:Tactile perception is important for robotic systems that interact with the world through touch. Touch is an active sense in which tactile measurements depend on the contact properties of an interaction--e.g., velocity, force, acceleration--as well as properties of the sensor and object under test. These dependencies make training tactile perceptual models challenging. Additionally, the effects of limited sensor life and the near-field nature of tactile sensors preclude the practical collection of exhaustive data sets even for fairly simple objects. Active learning provides a mechanism for focusing on only the most informative aspects of an object during data collection. Here we employ an active learning approach that uses a data-driven model's entropy as an uncertainty measure and explore relative to that entropy conditioned on the sensor state variables. Using a coverage-based ergodic controller, we train perceptual models in near-real time. We demonstrate our approach using a biomimentic sensor, exploring "tactile scenes" composed of shapes, textures, and objects. Each learned representation provides a perceptual sensor model for a particular tactile scene. Models trained on actively collected data outperform their randomly collected counterparts in real-time training tests. Additionally, we find that the resulting network entropy maps can be used to identify high salience portions of a tactile scene.

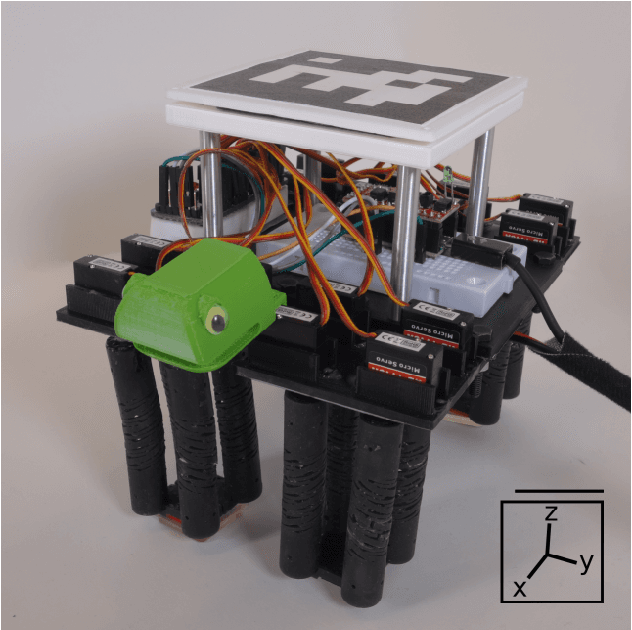

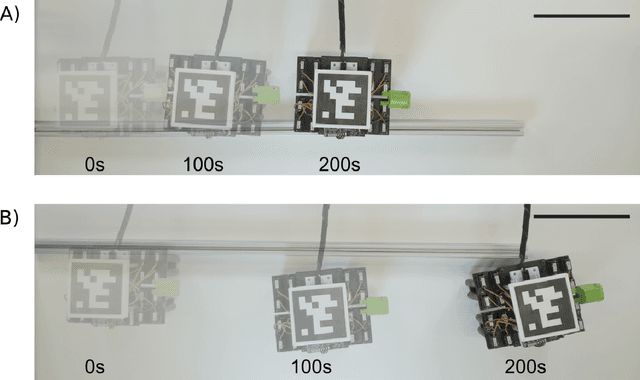

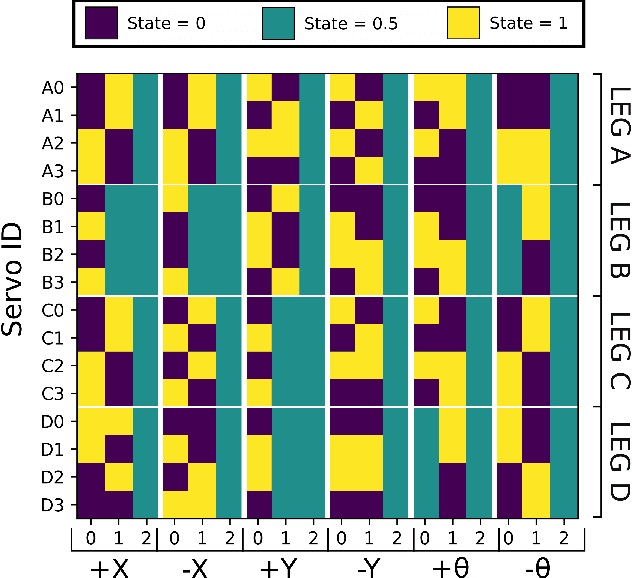

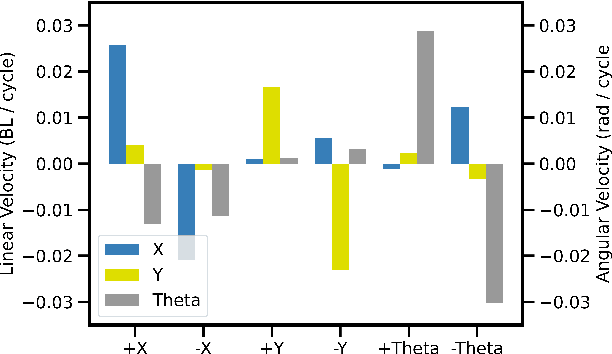

Automated Gait Generation For Walking, Soft Robotic Quadrupeds

Oct 07, 2023

Abstract:Gait generation for soft robots is challenging due to the nonlinear dynamics and high dimensional input spaces of soft actuators. Limitations in soft robotic control and perception force researchers to hand-craft open loop controllers for gait sequences, which is a non-trivial process. Moreover, short soft actuator lifespans and natural variations in actuator behavior limit machine learning techniques to settings that can be learned on the same time scales as robot deployment. Lastly, simulation is not always possible, due to heterogeneity and nonlinearity in soft robotic materials and their dynamics change due to wear. We present a sample-efficient, simulation free, method for self-generating soft robot gaits, using very minimal computation. This technique is demonstrated on a motorized soft robotic quadruped that walks using four legs constructed from 16 "handed shearing auxetic" (HSA) actuators. To manage the dimension of the search space, gaits are composed of two sequential sets of leg motions selected from 7 possible primitives. Pairs of primitives are executed on one leg at a time; we then select the best-performing pair to execute while moving on to subsequent legs. This method -- which uses no simulation, sophisticated computation, or user input -- consistently generates good translation and rotation gaits in as low as 4 minutes of hardware experimentation, outperforming hand-crafted gaits. This is the first demonstration of completely autonomous gait generation in a soft robot.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge