Jacqueline Ankenbauer

Global Localization in Unstructured Environments using Semantic Object Maps Built from Various Viewpoints

Mar 08, 2023

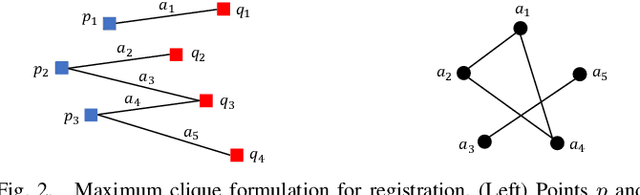

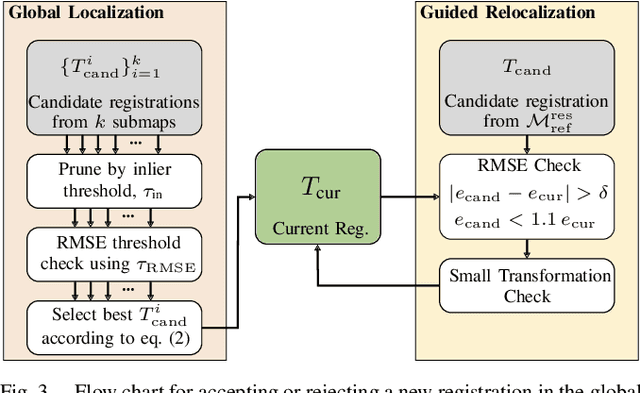

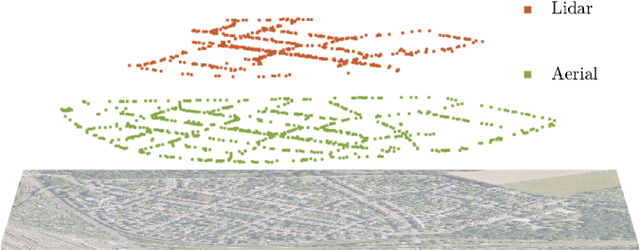

Abstract:We present a novel framework for global localization and guided relocalization of a vehicle in an unstructured environment. Compared to existing methods, our pipeline does not rely on cues from urban fixtures (e.g., lane markings, buildings), nor does it make assumptions that require the vehicle to be navigating on a road network. Instead, we achieve localization in both urban and non-urban environments by robustly associating and registering the vehicle's local semantic object map with a compact semantic reference map, potentially built from other viewpoints, time periods, and/or modalities. Robustness to noise, outliers, and missing objects is achieved through our graph-based data association algorithm. Further, the guided relocalization capability of our pipeline mitigates drift inherent in odometry-based localization after the initial global localization. We evaluate our pipeline on two publicly-available, real-world datasets to demonstrate its effectiveness at global localization in both non-urban and urban environments. The Katwijk Beach Planetary Rover dataset is used to show our pipeline's ability to perform accurate global localization in unstructured environments. Demonstrations on the KITTI dataset achieve an average pose error of 3.8m across all 35 localization events on Sequence 00 when localizing in a reference map created from aerial images. Compared to existing works, our pipeline is more general because it can perform global localization in unstructured environments using maps built from different viewpoints.

View-Invariant Localization using Semantic Objects in Changing Environments

Sep 28, 2022

Abstract:This paper proposes a novel framework for real-time localization and egomotion tracking of a vehicle in a reference map. The core idea is to map the semantic objects observed by the vehicle and register them to their corresponding objects in the reference map. While several recent works have leveraged semantic information for cross-view localization, the main contribution of this work is a view-invariant formulation that makes the approach directly applicable to any viewpoint configuration for which objects are detectable. Another distinctive feature is robustness to changes in the environment/objects due to a data association scheme suited for extreme outlier regimes (e.g., 90% association outliers). To demonstrate our framework, we consider an example of localizing a ground vehicle in a reference object map using only cars as objects. While only a stereo camera is used for the ground vehicle, we consider reference maps constructed a priori from ground viewpoints using stereo cameras and Lidar scans, and georeferenced aerial images captured at a different date to demonstrate the framework's robustness to different modalities, viewpoints, and environment changes. Evaluations on the KITTI dataset show that over a 3.7 km trajectory, localization occurs in 36 sec and is followed by real-time egomotion tracking with an average position error of 8.5 m in a Lidar reference map, and on an aerial object map where 77% of objects are outliers, localization is achieved in 71 sec with an average position error of 7.9 m.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge