Jacek P. Dmochowski

Learning latent causal relationships in multiple time series

Mar 21, 2022Abstract:Identifying the causal structure of systems with multiple dynamic elements is critical to several scientific disciplines. The conventional approach is to conduct statistical tests of causality, for example with Granger Causality, between observed signals that are selected a priori. Here it is posited that, in many systems, the causal relations are embedded in a latent space that is expressed in the observed data as a linear mixture. A technique for blindly identifying the latent sources is presented: the observations are projected into pairs of components -- driving and driven -- to maximize the strength of causality between the pairs. This leads to an optimization problem with closed form expressions for the objective function and gradient that can be solved with off-the-shelf techniques. After demonstrating proof-of-concept on synthetic data with known latent structure, the technique is applied to recordings from the human brain and historical cryptocurrency prices. In both cases, the approach recovers multiple strong causal relationships that are not evident in the observed data. The proposed technique is unsupervised and can be readily applied to any multiple time series to shed light on the causal relationships underlying the data.

Correlated Components Analysis - Extracting Reliable Dimensions in Multivariate Data

Sep 10, 2018

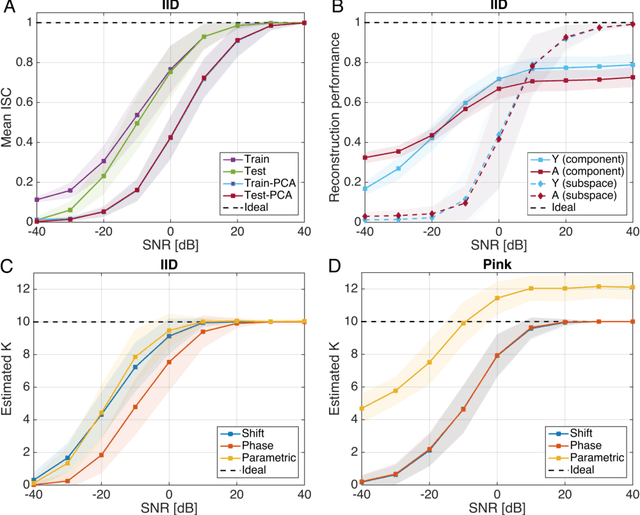

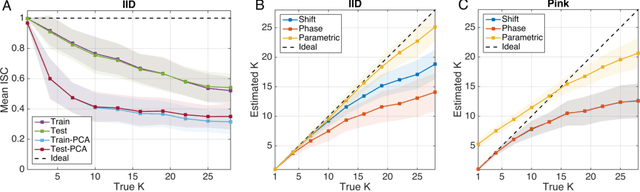

Abstract:How does one find dimensions in multivariate data that are reliably expressed across repetitions? For example, in a brain imaging study one may want to identify combinations of neural signals that are reliably expressed across multiple trials or subjects. For a behavioral assessment with multiple ratings, one may want to identify an aggregate score that is reliably reproduced across raters. Correlated Components Analysis (CorrCA) addresses this problem by identifying components that are maximally correlated between repetitions (e.g. trials, subjects, raters). Here we formalize this as the maximization of the ratio of between-repetition to within-repetition covariance. We show that this criterion maximizes repeat-reliability, defined as mean over variance across repeats, and that it leads to CorrCA or to multi-set Canonical Correlation Analysis, depending on the constraints. Surprisingly, we also find that CorrCA is equivalent to Linear Discriminant Analysis for equal-mean signals, which provides an unexpected link between classic concepts of multivariate analysis. We provided an exact parametric test for statistical significance based on the F-statistic for normally distributed independent samples, and present and validate shuffle statistics for the case of dependent samples. Regularization and extension to non-linear mappings using kernels are also presented. The algorithms are demonstrated on a series of data analysis applications, and we provide all code and data required to reproduce the results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge