Jaakko Suutala

Better Classifier Calibration for Small Data Sets

Feb 24, 2020

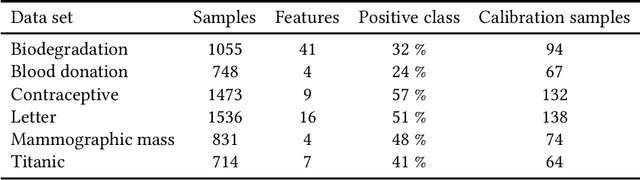

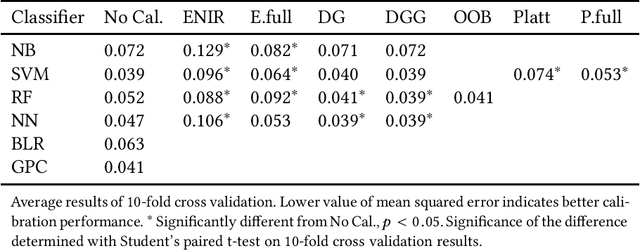

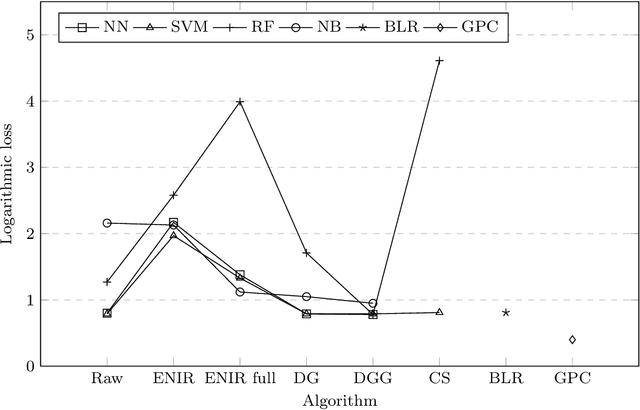

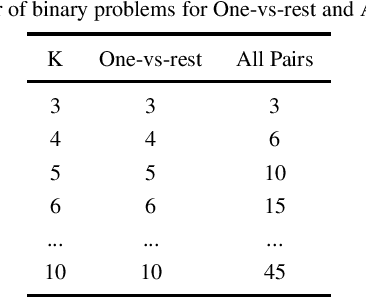

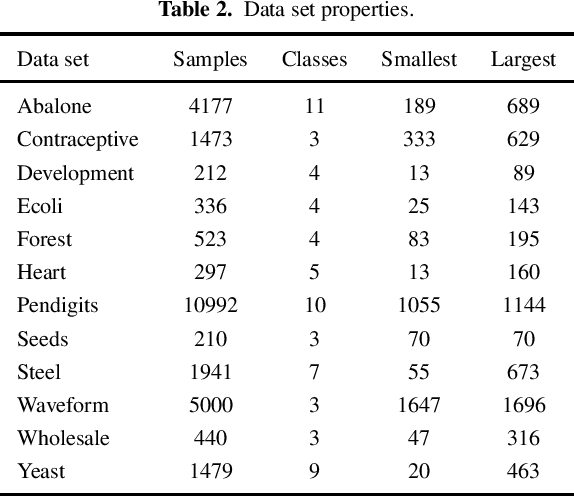

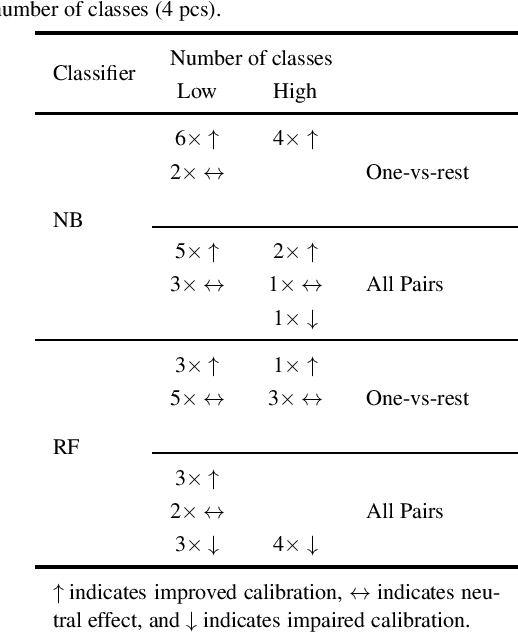

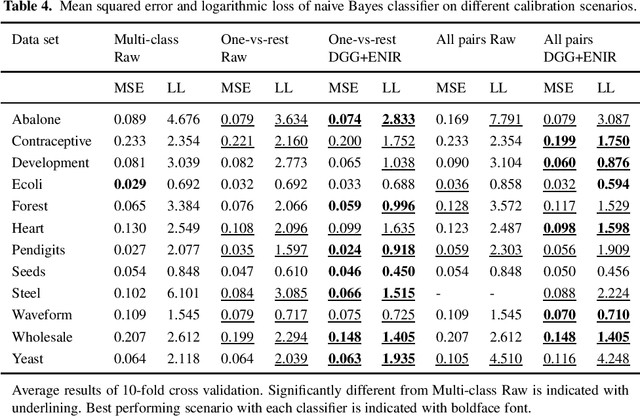

Abstract:Classifier calibration does not always go hand in hand with the classifier's ability to separate the classes. There are applications where good classifier calibration, i.e. the ability to produce accurate probability estimates, is more important than class separation. When the amount of data for training is limited, the traditional approach to improve calibration starts to crumble. In this article we show how generating more data for calibration is able to improve calibration algorithm performance in many cases where a classifier is not naturally producing well-calibrated outputs and the traditional approach fails. The proposed approach adds computational cost but considering that the main use case is with small data sets this extra computational cost stays insignificant and is comparable to other methods in prediction time. From the tested classifiers the largest improvement was detected with the random forest and naive Bayes classifiers. Therefore, the proposed approach can be recommended at least for those classifiers when the amount of data available for training is limited and good calibration is essential.

Better Multi-class Probability Estimates for Small Data Sets

Jan 30, 2020

Abstract:Many classification applications require accurate probability estimates in addition to good class separation but often classifiers are designed focusing only on the latter. Calibration is the process of improving probability estimates by post-processing but commonly used calibration algorithms work poorly on small data sets and assume the classification task to be binary. Both of these restrictions limit their real-world applicability. Previously introduced Data Generation and Grouping algorithm alleviates the problem posed by small data sets and in this article, we will demonstrate that its application to multi-class problems is also possible which solves the other limitation. Our experiments show that calibration error can be decreased using the proposed approach and the additional computational cost is acceptable.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge