J. Huang

LimeSoDa: A Dataset Collection for Benchmarking of Machine Learning Regressors in Digital Soil Mapping

Feb 27, 2025

Abstract:Digital soil mapping (DSM) relies on a broad pool of statistical methods, yet determining the optimal method for a given context remains challenging and contentious. Benchmarking studies on multiple datasets are needed to reveal strengths and limitations of commonly used methods. Existing DSM studies usually rely on a single dataset with restricted access, leading to incomplete and potentially misleading conclusions. To address these issues, we introduce an open-access dataset collection called Precision Liming Soil Datasets (LimeSoDa). LimeSoDa consists of 31 field- and farm-scale datasets from various countries. Each dataset has three target soil properties: (1) soil organic matter or soil organic carbon, (2) clay content and (3) pH, alongside a set of features. Features are dataset-specific and were obtained by optical spectroscopy, proximal- and remote soil sensing. All datasets were aligned to a tabular format and are ready-to-use for modeling. We demonstrated the use of LimeSoDa for benchmarking by comparing the predictive performance of four learning algorithms across all datasets. This comparison included multiple linear regression (MLR), support vector regression (SVR), categorical boosting (CatBoost) and random forest (RF). The results showed that although no single algorithm was universally superior, certain algorithms performed better in specific contexts. MLR and SVR performed better on high-dimensional spectral datasets, likely due to better compatibility with principal components. In contrast, CatBoost and RF exhibited considerably better performances when applied to datasets with a moderate number (< 20) of features. These benchmarking results illustrate that the performance of a method is highly context-dependent. LimeSoDa therefore provides an important resource for improving the development and evaluation of statistical methods in DSM.

User Identity Protection in EEG-based Brain-Computer Interfaces

Dec 13, 2024

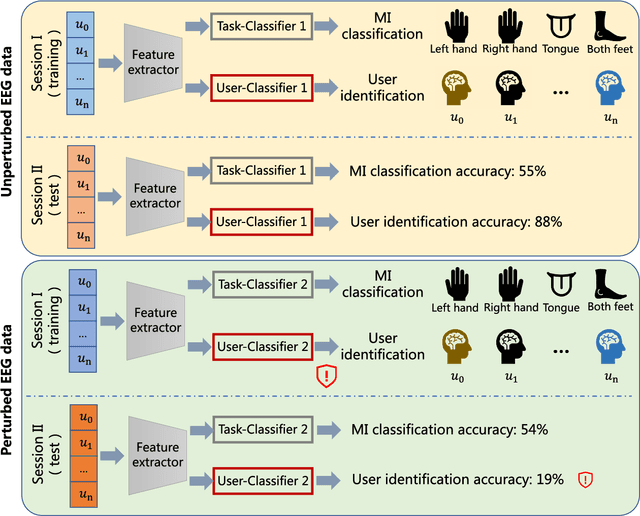

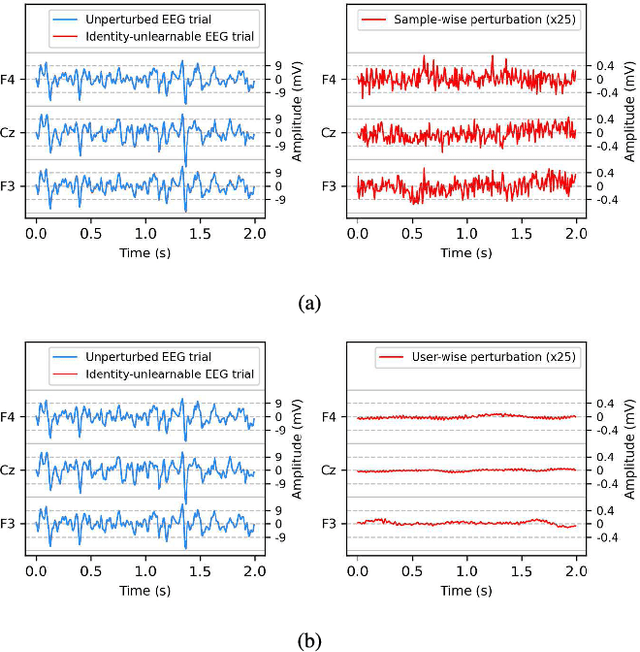

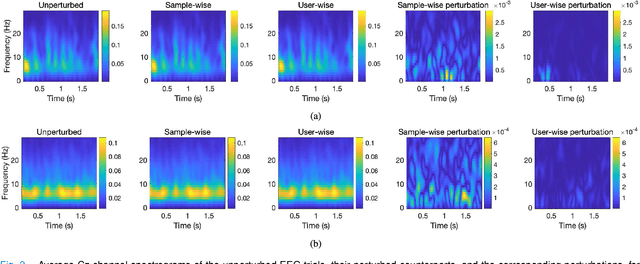

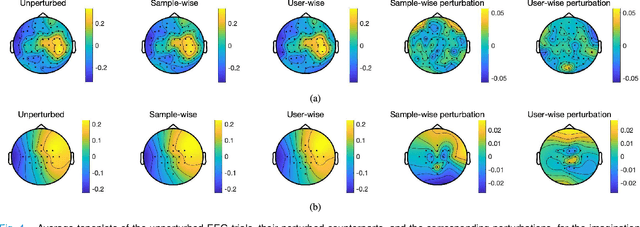

Abstract:A brain-computer interface (BCI) establishes a direct communication pathway between the brain and an external device. Electroencephalogram (EEG) is the most popular input signal in BCIs, due to its convenience and low cost. Most research on EEG-based BCIs focuses on the accurate decoding of EEG signals; however, EEG signals also contain rich private information, e.g., user identity, emotion, and so on, which should be protected. This paper first exposes a serious privacy problem in EEG-based BCIs, i.e., the user identity in EEG data can be easily learned so that different sessions of EEG data from the same user can be associated together to more reliably mine private information. To address this issue, we further propose two approaches to convert the original EEG data into identity-unlearnable EEG data, i.e., removing the user identity information while maintaining the good performance on the primary BCI task. Experiments on seven EEG datasets from five different BCI paradigms showed that on average the generated identity-unlearnable EEG data can reduce the user identification accuracy from 70.01\% to at most 21.36\%, greatly facilitating user privacy protection in EEG-based BCIs.

Artificial Intelligence for the Electron Ion Collider (AI4EIC)

Jul 17, 2023Abstract:The Electron-Ion Collider (EIC), a state-of-the-art facility for studying the strong force, is expected to begin commissioning its first experiments in 2028. This is an opportune time for artificial intelligence (AI) to be included from the start at this facility and in all phases that lead up to the experiments. The second annual workshop organized by the AI4EIC working group, which recently took place, centered on exploring all current and prospective application areas of AI for the EIC. This workshop is not only beneficial for the EIC, but also provides valuable insights for the newly established ePIC collaboration at EIC. This paper summarizes the different activities and R&D projects covered across the sessions of the workshop and provides an overview of the goals, approaches and strategies regarding AI/ML in the EIC community, as well as cutting-edge techniques currently studied in other experiments.

AI-assisted Optimization of the ECCE Tracking System at the Electron Ion Collider

May 20, 2022Abstract:The Electron-Ion Collider (EIC) is a cutting-edge accelerator facility that will study the nature of the "glue" that binds the building blocks of the visible matter in the universe. The proposed experiment will be realized at Brookhaven National Laboratory in approximately 10 years from now, with detector design and R&D currently ongoing. Notably, EIC is one of the first large-scale facilities to leverage Artificial Intelligence (AI) already starting from the design and R&D phases. The EIC Comprehensive Chromodynamics Experiment (ECCE) is a consortium that proposed a detector design based on a 1.5T solenoid. The EIC detector proposal review concluded that the ECCE design will serve as the reference design for an EIC detector. Herein we describe a comprehensive optimization of the ECCE tracker using AI. The work required a complex parametrization of the simulated detector system. Our approach dealt with an optimization problem in a multidimensional design space driven by multiple objectives that encode the detector performance, while satisfying several mechanical constraints. We describe our strategy and show results obtained for the ECCE tracking system. The AI-assisted design is agnostic to the simulation framework and can be extended to other sub-detectors or to a system of sub-detectors to further optimize the performance of the EIC detector.

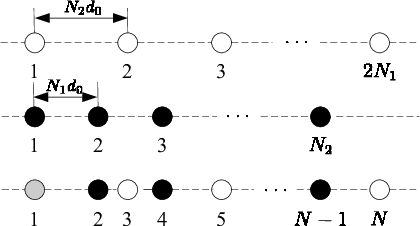

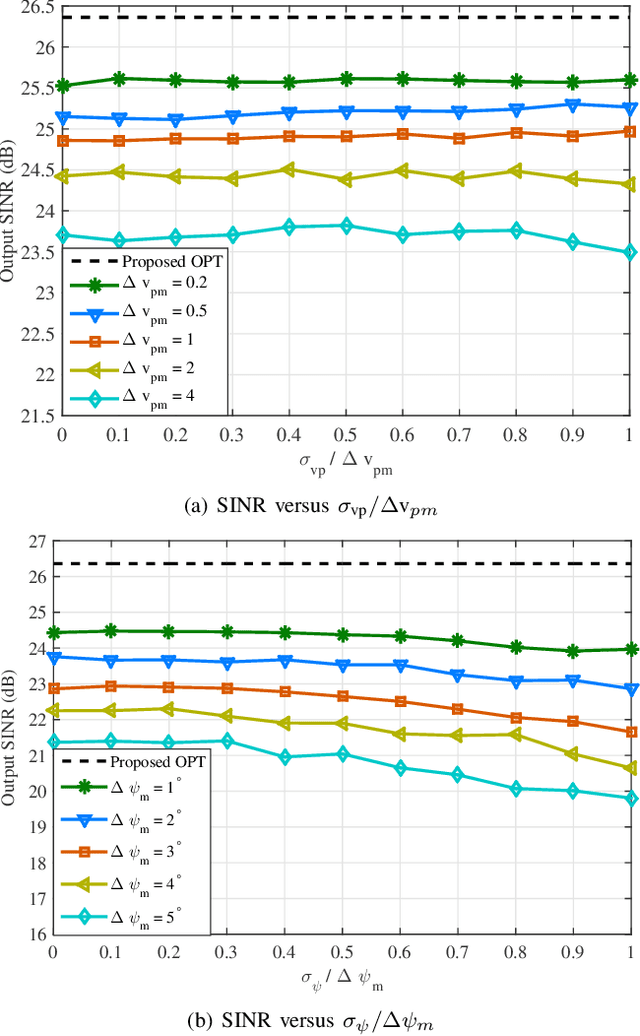

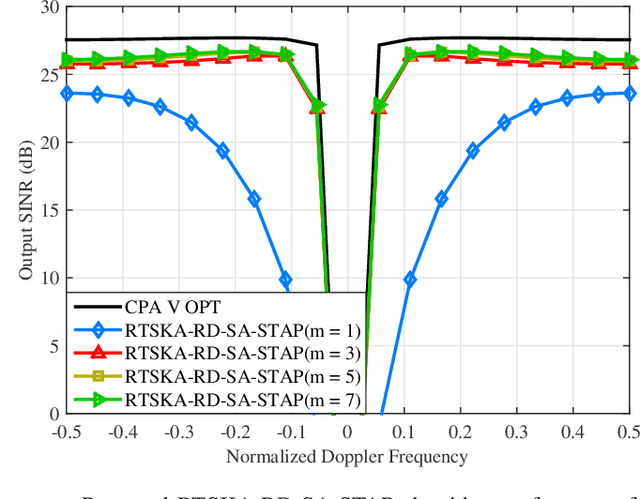

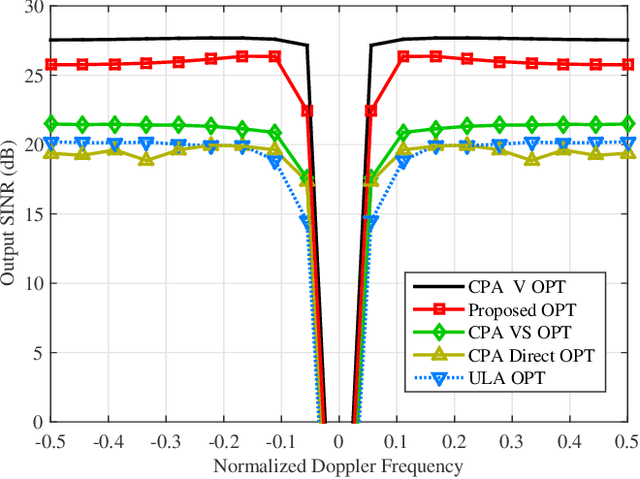

Study of Robust Two-Stage Reduced-Dimension Sparsity-Aware STAP with Coprime Arrays

Dec 23, 2019

Abstract:Space-time adaptive processing (STAP) algorithms with coprime arrays can provide good clutter suppression potential with low cost in airborne radar systems as compared with their uniform linear arrays counterparts. However, the performance of these algorithms is limited by the training samples support in practical applications. To address this issue, a robust two-stage reduced-dimension (RD) sparsity-aware STAP algorithm is proposed in this work. In the first stage, an RD virtual snapshot is constructed using all spatial channels but only $m$ adjacent Doppler channels around the target Doppler frequency to reduce the slow-time dimension of the signal. In the second stage, an RD sparse measurement modeling is formulated based on the constructed RD virtual snapshot, where the sparsity of clutter and the prior knowledge of the clutter ridge are exploited to formulate an RD overcomplete dictionary. Moreover, an orthogonal matching pursuit (OMP)-like method is proposed to recover the clutter subspace. In order to set the stopping parameter of the OMP-like method, a robust clutter rank estimation approach is developed. Compared with recently developed sparsity-aware STAP algorithms, the size of the proposed sparse representation dictionary is much smaller, resulting in low complexity. Simulation results show that the proposed algorithm is robust to prior knowledge errors and can provide good clutter suppression performance in low sample support.

The Language of Search

Oct 12, 2011

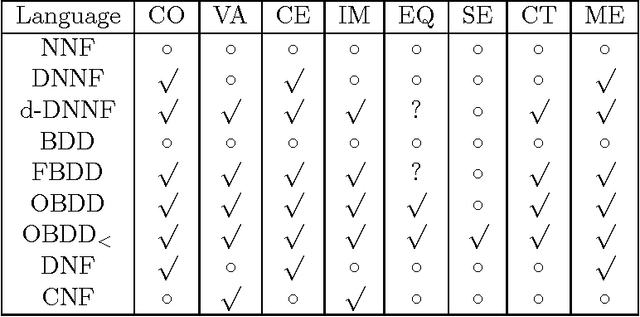

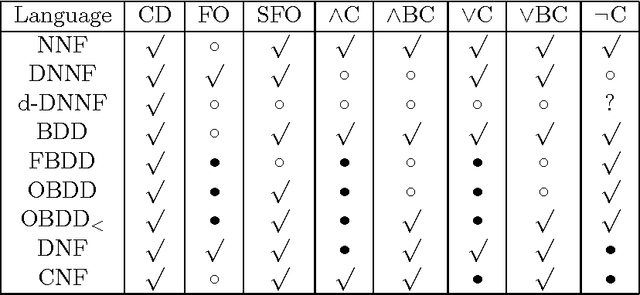

Abstract:This paper is concerned with a class of algorithms that perform exhaustive search on propositional knowledge bases. We show that each of these algorithms defines and generates a propositional language. Specifically, we show that the trace of a search can be interpreted as a combinational circuit, and a search algorithm then defines a propositional language consisting of circuits that are generated across all possible executions of the algorithm. In particular, we show that several versions of exhaustive DPLL search correspond to such well-known languages as FBDD, OBDD, and a precisely-defined subset of d-DNNF. By thus mapping search algorithms to propositional languages, we provide a uniform and practical framework in which successful search techniques can be harnessed for compilation of knowledge into various languages of interest, and a new methodology whereby the power and limitations of search algorithms can be understood by looking up the tractability and succinctness of the corresponding propositional languages.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge