J. Elisenda Grigsby

On Functional Dimension and Persistent Pseudodimension

Oct 22, 2024Abstract:For any fixed feedforward ReLU neural network architecture, it is well-known that many different parameter settings can determine the same function. It is less well-known that the degree of this redundancy is inhomogeneous across parameter space. In this work, we discuss two locally applicable complexity measures for ReLU network classes and what we know about the relationship between them: (1) the local functional dimension [14, 18], and (2) a local version of VC dimension that we call persistent pseudodimension. The former is easy to compute on finite batches of points; the latter should give local bounds on the generalization gap, which would inform an understanding of the mechanics of the double descent phenomenon [7].

Hidden symmetries of ReLU networks

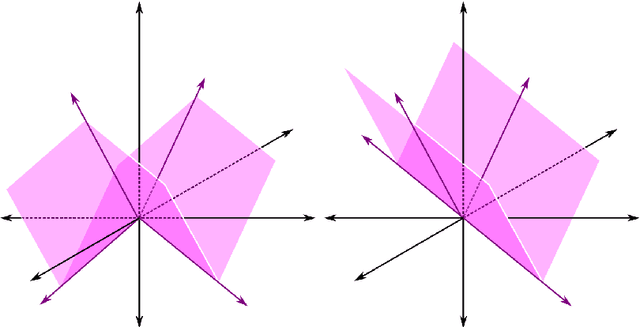

Jun 09, 2023Abstract:The parameter space for any fixed architecture of feedforward ReLU neural networks serves as a proxy during training for the associated class of functions - but how faithful is this representation? It is known that many different parameter settings can determine the same function. Moreover, the degree of this redundancy is inhomogeneous: for some networks, the only symmetries are permutation of neurons in a layer and positive scaling of parameters at a neuron, while other networks admit additional hidden symmetries. In this work, we prove that, for any network architecture where no layer is narrower than the input, there exist parameter settings with no hidden symmetries. We also describe a number of mechanisms through which hidden symmetries can arise, and empirically approximate the functional dimension of different network architectures at initialization. These experiments indicate that the probability that a network has no hidden symmetries decreases towards 0 as depth increases, while increasing towards 1 as width and input dimension increase.

Functional dimension of feedforward ReLU neural networks

Sep 08, 2022

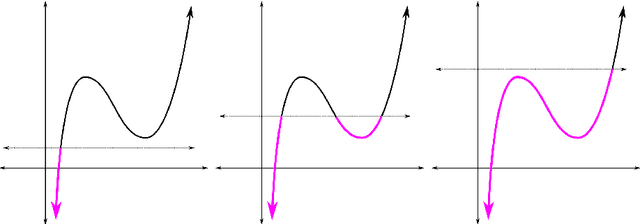

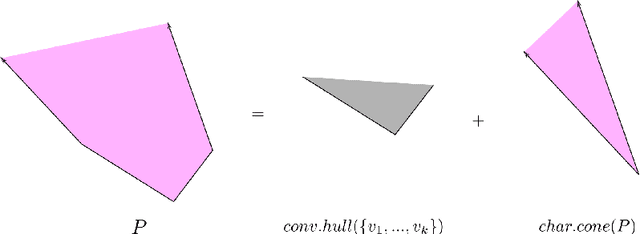

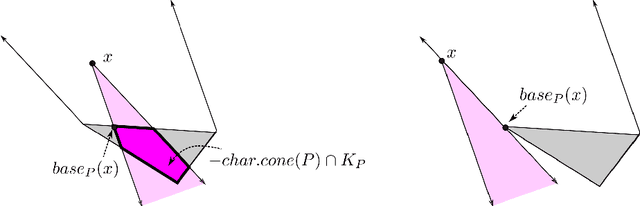

Abstract:It is well-known that the parameterized family of functions representable by fully-connected feedforward neural networks with ReLU activation function is precisely the class of piecewise linear functions with finitely many pieces. It is less well-known that for every fixed architecture of ReLU neural network, the parameter space admits positive-dimensional spaces of symmetries, and hence the local functional dimension near any given parameter is lower than the parametric dimension. In this work we carefully define the notion of functional dimension, show that it is inhomogeneous across the parameter space of ReLU neural network functions, and continue an investigation - initiated in [14] and [5] - into when the functional dimension achieves its theoretical maximum. We also study the quotient space and fibers of the realization map from parameter space to function space, supplying examples of fibers that are disconnected, fibers upon which functional dimension is non-constant, and fibers upon which the symmetry group acts non-transitively.

Local and global topological complexity measures OF ReLU neural network functions

Apr 12, 2022

Abstract:We apply a generalized piecewise-linear (PL) version of Morse theory due to Grunert-Kuhnel-Rote to define and study new local and global notions of topological complexity for fully-connected feedforward ReLU neural network functions, F: R^n -> R. Along the way, we show how to construct, for each such F, a canonical polytopal complex K(F) and a deformation retract of the domain onto K(F), yielding a convenient compact model for performing calculations. We also give a combinatorial description of local complexity for depth 2 networks, and a construction showing that local complexity can be arbitrarily high.

On transversality of bent hyperplane arrangements and the topological expressiveness of ReLU neural networks

Aug 20, 2020

Abstract:Let F:R^n -> R be a feedforward ReLU neural network. It is well-known that for any choice of parameters, F is continuous and piecewise (affine) linear. We lay some foundations for a systematic investigation of how the architecture of F impacts the geometry and topology of its possible decision regions for binary classification tasks. Following the classical progression for smooth functions in differential topology, we first define the notion of a generic, transversal ReLU neural network and show that almost all ReLU networks are generic and transversal. We then define a partially-oriented linear 1-complex in the domain of F and identify properties of this complex that yield an obstruction to the existence of bounded connected components of a decision region. We use this obstruction to prove that a decision region of a generic, transversal ReLU network F: R^n -> R with a single hidden layer of dimension (n + 1) can have no more than one bounded connected component.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge