Jérémy Lebreton

High performance Lunar landing simulations

Sep 17, 2024

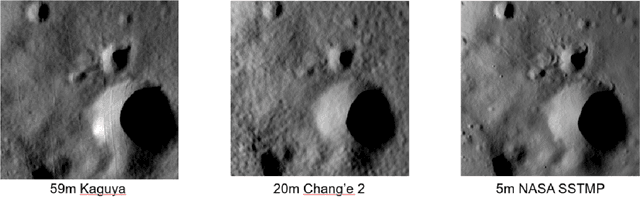

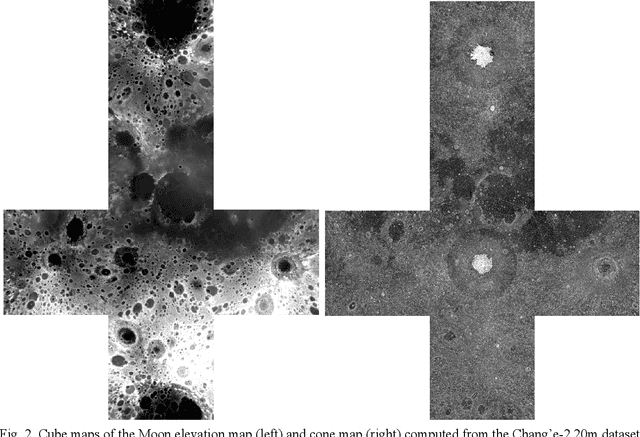

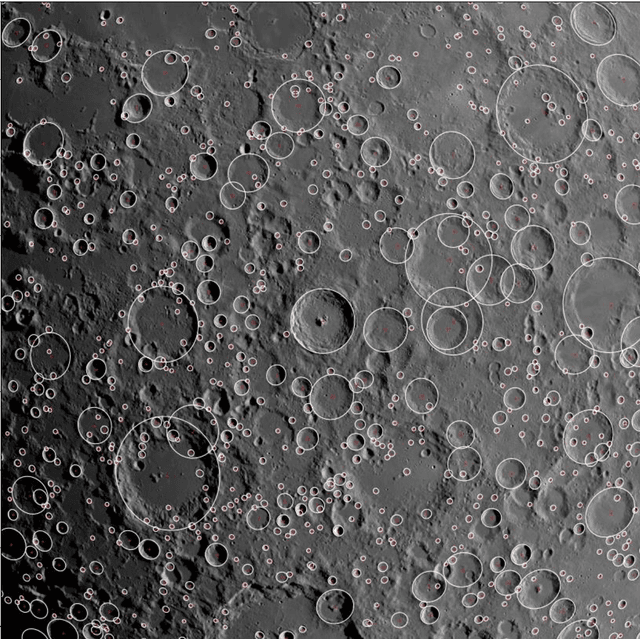

Abstract:Autonomous precision navigation to land onto the Moon relies on vision sensors. Computer vision algorithms are designed, trained and tested using synthetic simulations. High quality terrain models have been produced by Moon orbiters developed by several nations, with resolutions ranging from tens or hundreds of meters globally down to few meters locally. The SurRender software is a powerful simulator able to exploit the full potential of these datasets in raytracing. New interfaces include tools to fuse multi-resolution DEMs and procedural texture generation. A global model of the Moon at 20m resolution was integrated representing several terabytes of data which SurRender can render continuously and in real-time. This simulator will be a precious asset for the development of future missions.

Training Datasets Generation for Machine Learning: Application to Vision Based Navigation

Sep 17, 2024

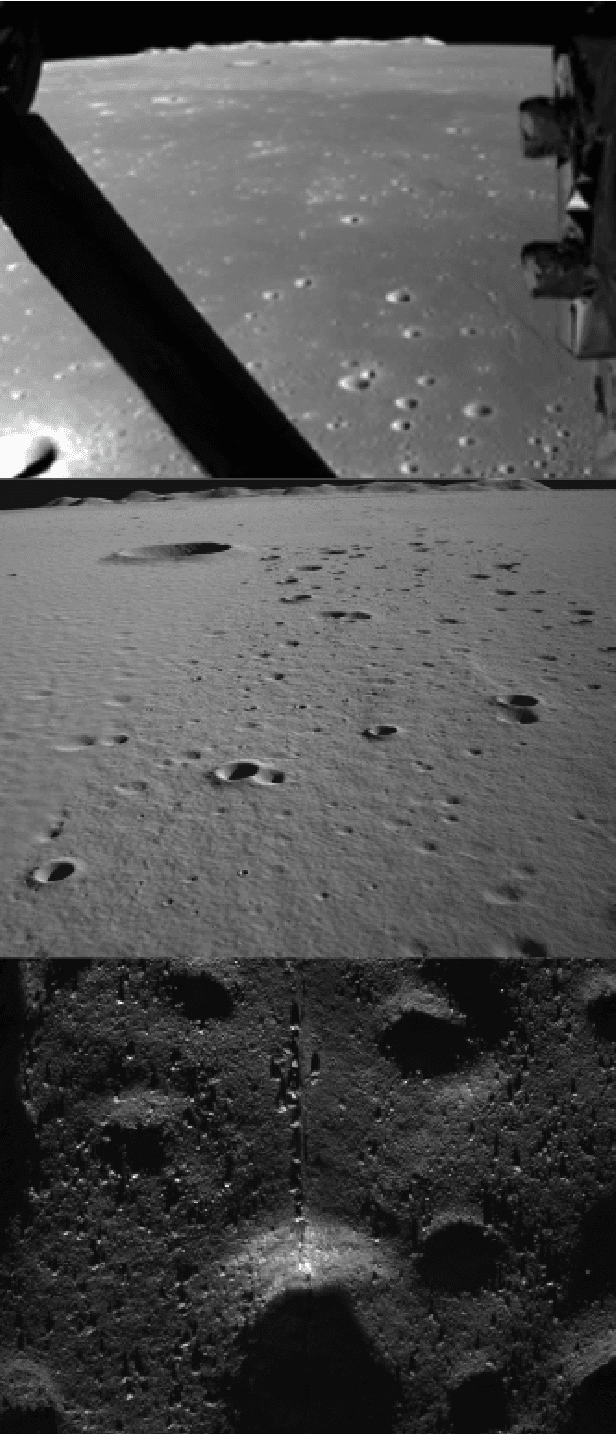

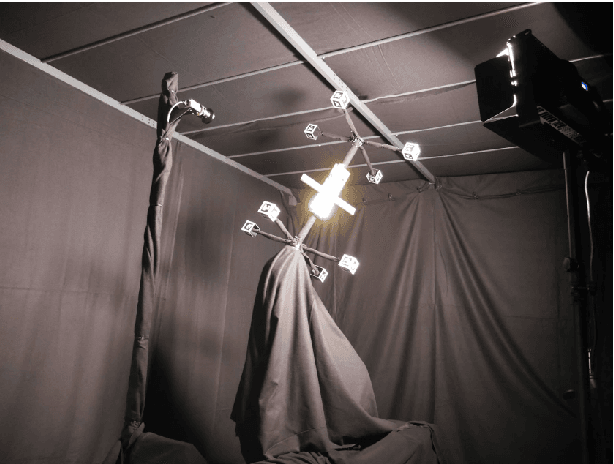

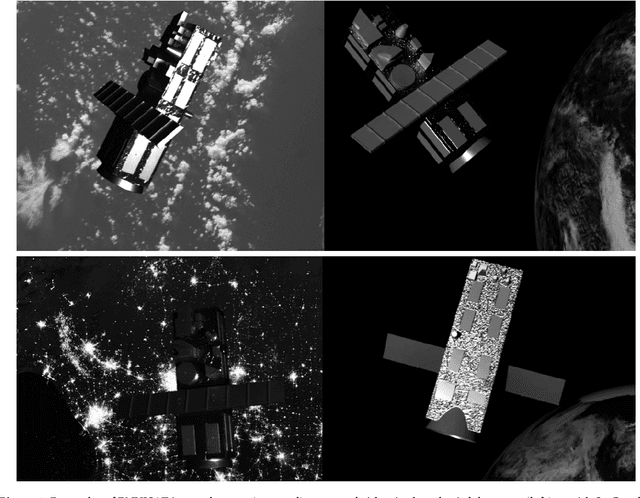

Abstract:Vision Based Navigation consists in utilizing cameras as precision sensors for GNC after extracting information from images. To enable the adoption of machine learning for space applications, one of obstacles is the demonstration that available training datasets are adequate to validate the algorithms. The objective of the study is to generate datasets of images and metadata suitable for training machine learning algorithms. Two use cases were selected and a robust methodology was developed to validate the datasets including the ground truth. The first use case is in-orbit rendezvous with a man-made object: a mockup of satellite ENVISAT. The second use case is a Lunar landing scenario. Datasets were produced from archival datasets (Chang'e 3), from the laboratory at DLR TRON facility and at Airbus Robotic laboratory, from SurRender software high fidelity image simulator using Model Capture and from Generative Adversarial Networks. The use case definition included the selection of algorithms as benchmark: an AI-based pose estimation algorithm and a dense optical flow algorithm were selected. Eventually it is demonstrated that datasets produced with SurRender and selected laboratory facilities are adequate to train machine learning algorithms.

Vision-Based Estimation of Small Body Rotational State during the Approach Phase

Feb 22, 2023Abstract:The heterogeneity of the small body population complicates the prediction of the small body properties before the spacecraft's arrival. In the context of autonomous small body exploration, it is crucial to develop algorithms that estimate the small body characteristics before orbit insertion and close proximity operations. This paper develops a vision-based estimation of the small-body rotational state (i.e., the center of rotation and rotation axis direction) during the approach phase. In this mission phase, the spacecraft observes the celestial body rotating and tracks features in images. As feature tracks are the projection of landmarks' circular movement, the possible rotation axes are computed. Then, the rotation axis solution is chosen among the possible candidates by exploiting feature motion and a heuristic approach. Finally, the center of rotation is estimated from the center of brightness. The algorithm is tested on more than 800 test cases with two different asteroids (i.e., Bennu and Itokawa), three different lighting conditions, and more than 100 different rotation axis orientations. Results show that the rotation axis can be determined with limited error in most cases implying that the proposed algorithm is a valuable method for autonomous small body characterization.

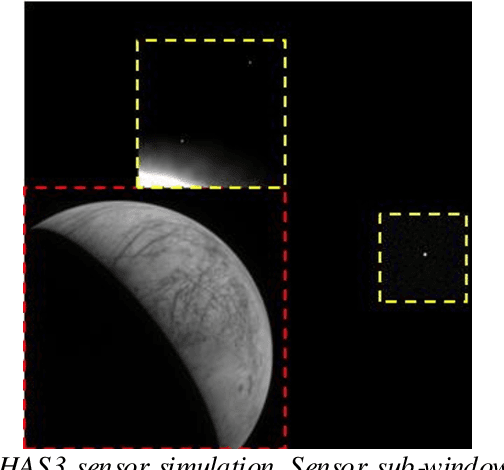

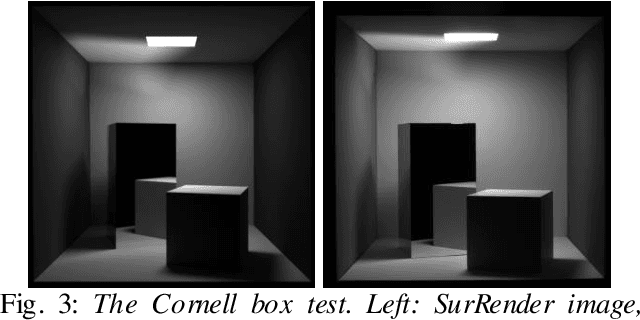

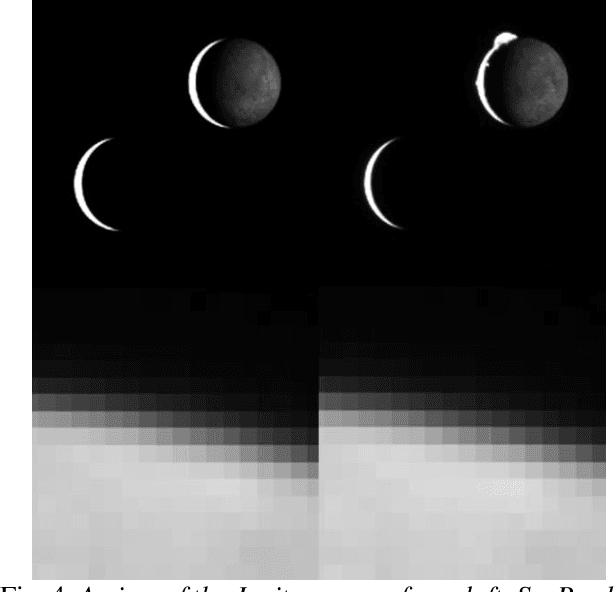

Image simulation for space applications with the SurRender software

Jun 21, 2021

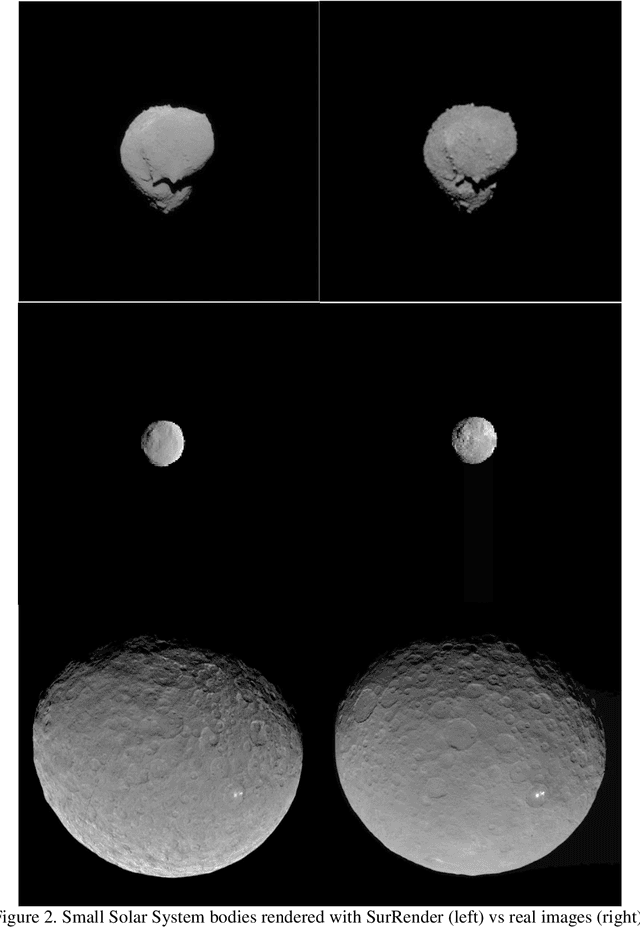

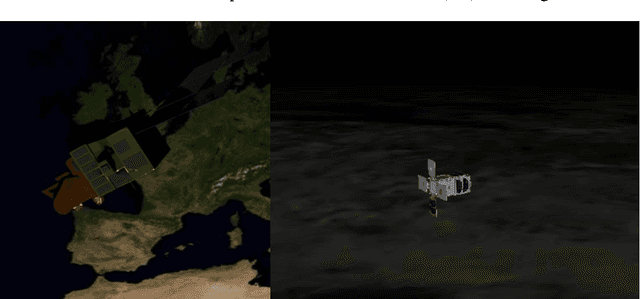

Abstract:Image Processing algorithms for vision-based navigation require reliable image simulation capacities. In this paper we explain why traditional rendering engines may present limitations that are potentially critical for space applications. We introduce Airbus SurRender software v7 and provide details on features that make it a very powerful space image simulator. We show how SurRender is at the heart of the development processes of our computer vision solutions and we provide a series of illustrations of rendered images for various use cases ranging from Moon and Solar System exploration, to in orbit rendezvous and planetary robotics.

Scientific image rendering for space scenes with the SurRender software

Oct 02, 2018

Abstract:Spacecraft autonomy can be enhanced by vision-based navigation (VBN) techniques. Applications range from manoeuvers around Solar System objects and landing on planetary surfaces, to in-orbit servicing or space debris removal. The development and validation of VBN algorithms relies on the availability of physically accurate relevant images. Yet archival data from past missions can rarely serve this purpose and acquiring new data is often costly. The SurRender software is an image simulator that addresses the challenges of realistic image rendering, with high representativeness for space scenes. Images are rendered by raytracing, which implements the physical principles of geometrical light propagation, in physical units. A macroscopic instrument model and scene objects reflectance functions are used. SurRender is specially optimized for space scenes, with huge distances between objects and scenes up to Solar System size. Raytracing conveniently tackles some important effects for VBN algorithms: image quality, eclipses, secondary illumination, subpixel limb imaging, etc. A simulation is easily setup (in MATLAB, Python, and more) by specifying the position of the bodies (camera, Sun, planets, satellites) over time, 3D shapes and material surface properties. SurRender comes with its own modelling tool enabling to go beyond existing models for shapes, materials and sensors (projection, temporal sampling, electronics, etc.). It is natively designed to simulate different kinds of sensors (visible, LIDAR, etc.). Tools are available for manipulating huge datasets to store albedo maps and digital elevation models, or for procedural (fractal) texturing that generates high-quality images for a large range of observing distances (from millions of km to touchdown). We illustrate SurRender performances with a selection of case studies, placing particular emphasis on a 900-km Moon flyby simulation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge