Get our free extension to see links to code for papers anywhere online!Free add-on: code for papers everywhere!Free add-on: See code for papers anywhere!

Ivana Roenzweig

Goldilocks Neural Networks

Feb 26, 2020Figures and Tables:

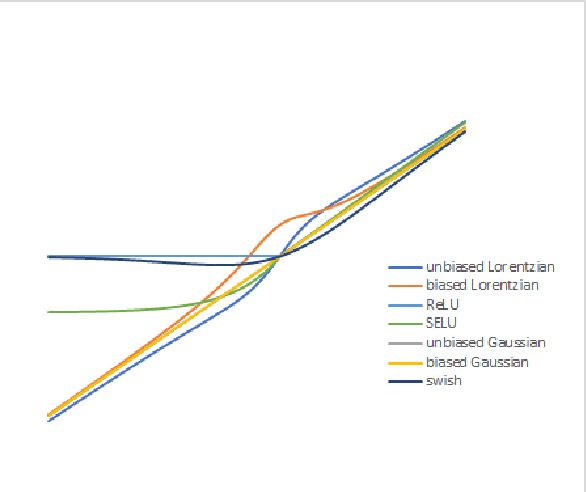

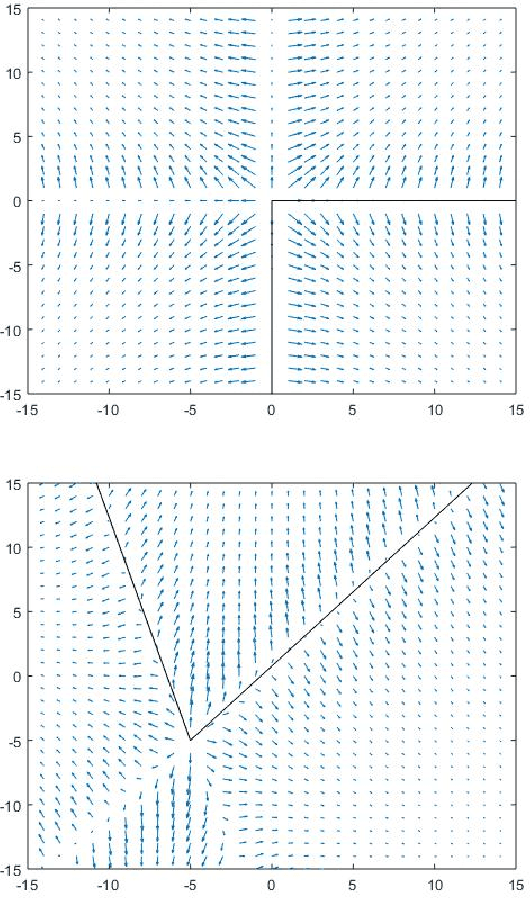

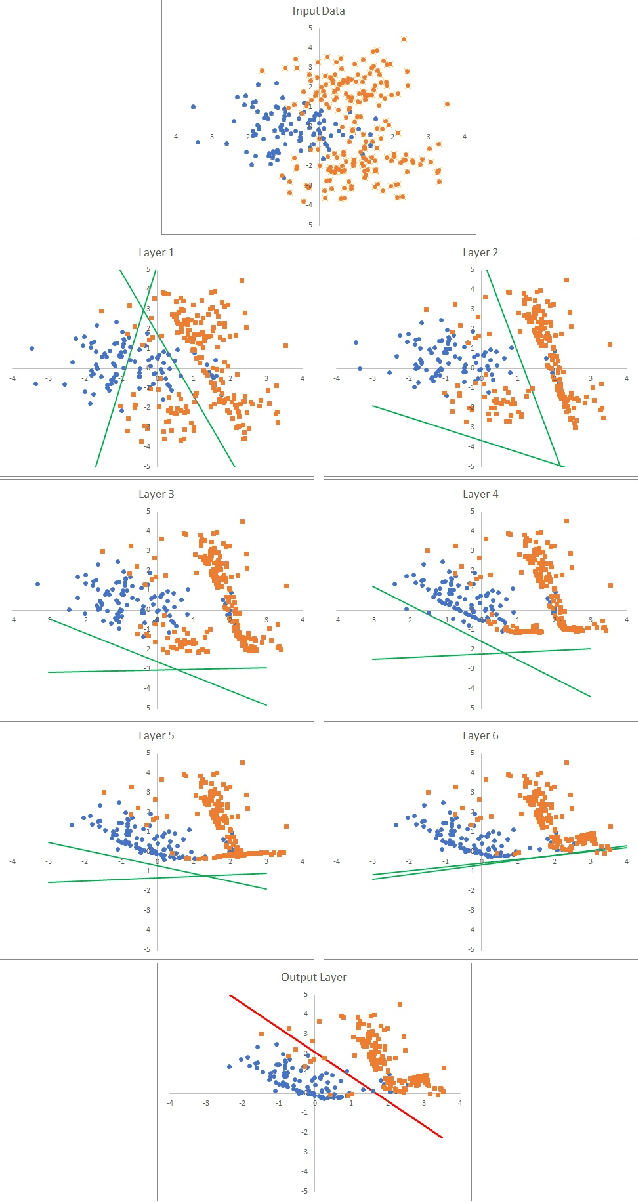

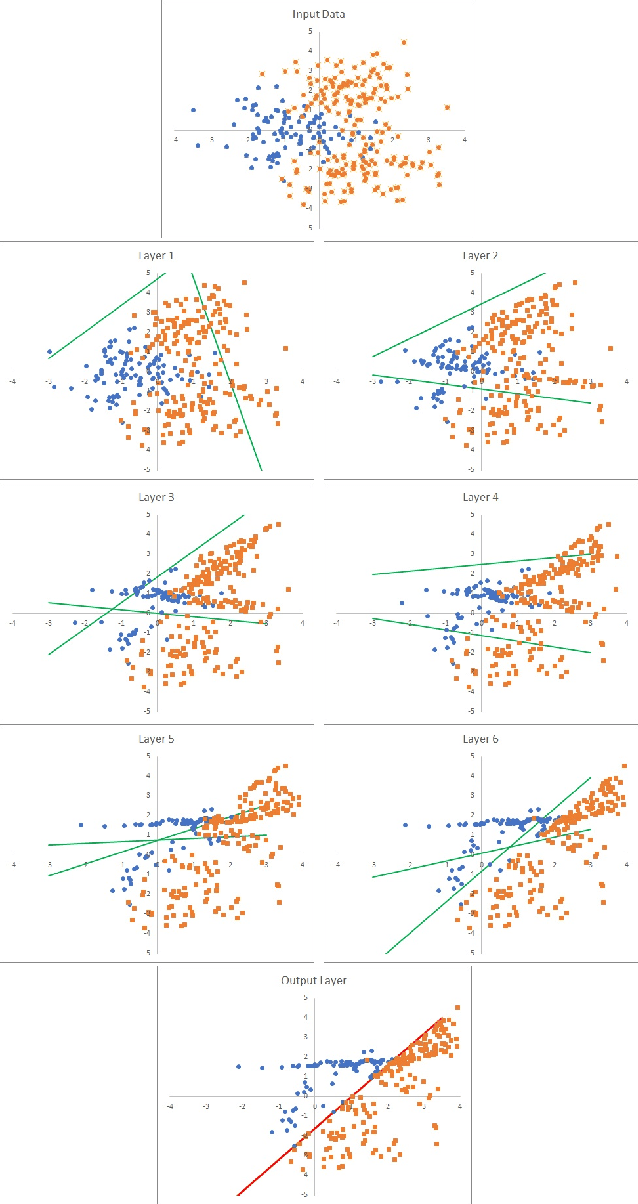

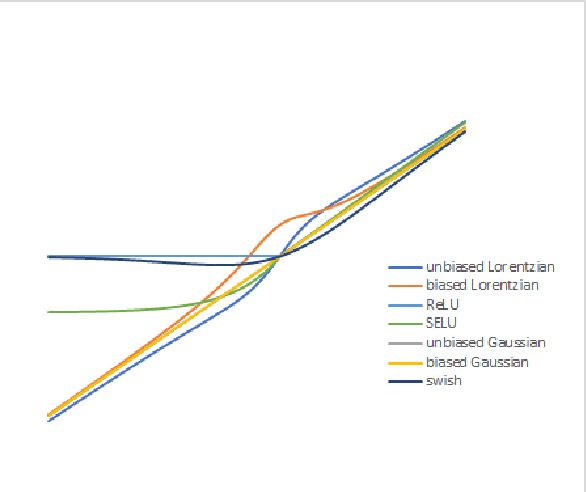

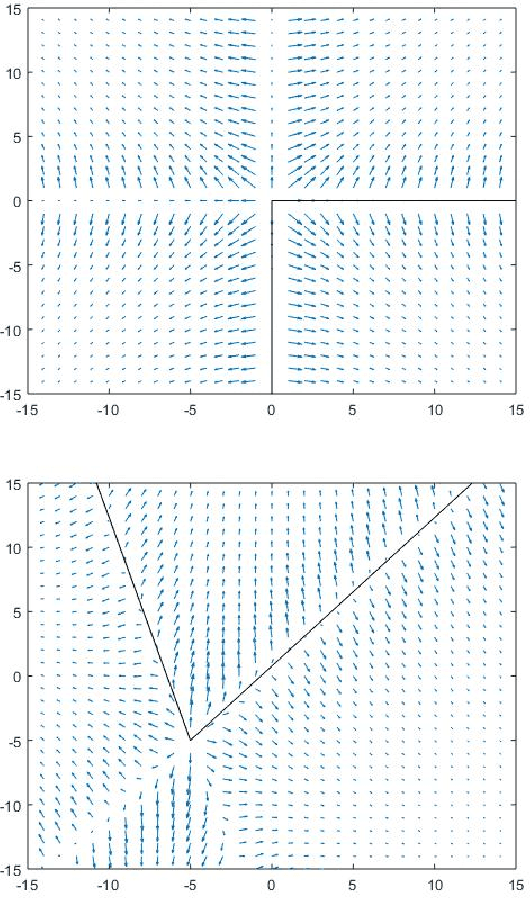

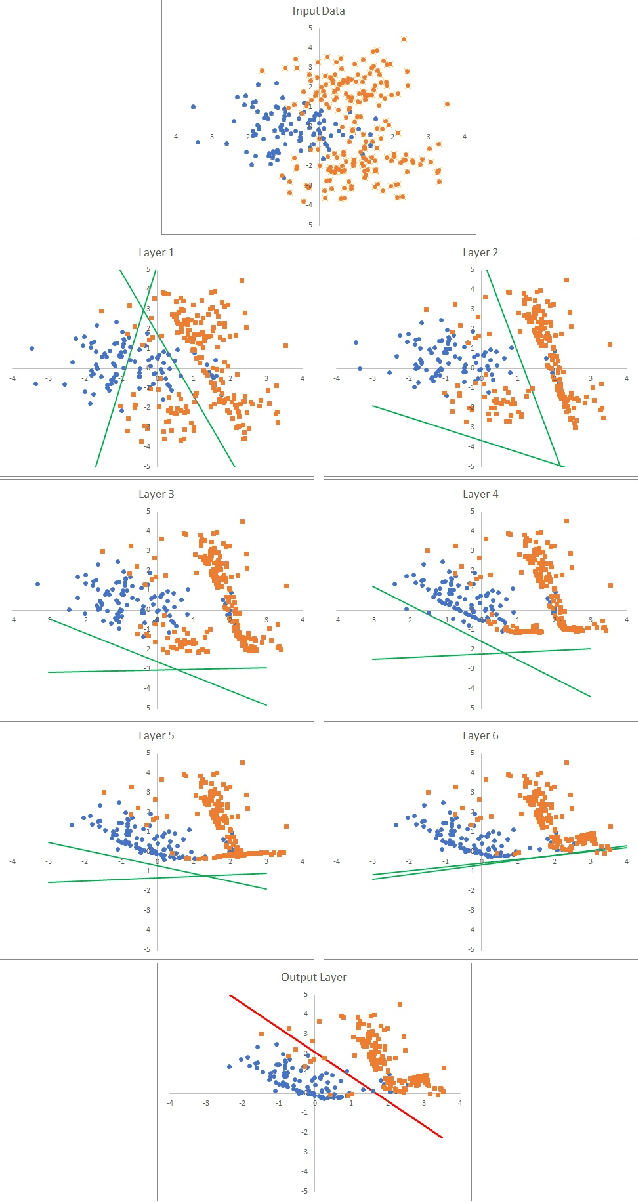

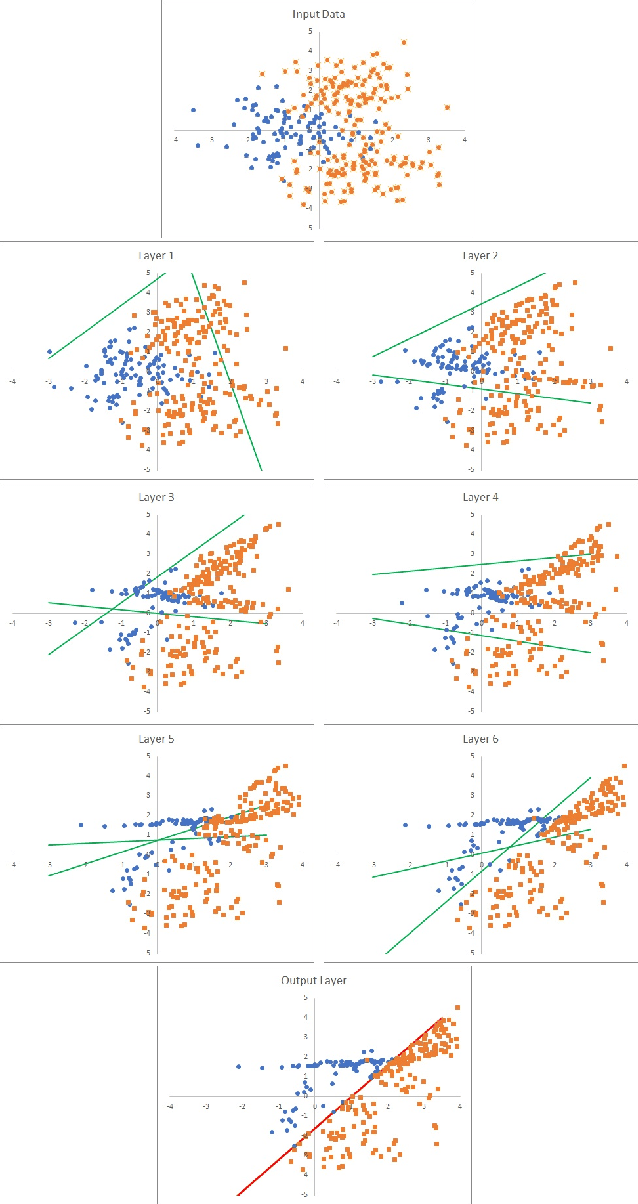

Abstract:We introduce the new "Goldilocks" class of activation functions, which non-linearly deform the input signal only locally when the input signal is in the appropriate range. The small local deformation of the signal enables better understanding of how and why the signal is transformed through the layers. Numerical results on CIFAR-10 and CIFAR-100 data sets show that Goldilocks networks perform better than, or comparably to SELU and RELU, while introducing tractability of data deformation through the layers.

Via

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge