Irshad A. Meer

Explainable AI for UAV Mobility Management: A Deep Q-Network Approach for Handover Minimization

Apr 25, 2025Abstract:The integration of unmanned aerial vehicles (UAVs) into cellular networks presents significant mobility management challenges, primarily due to frequent handovers caused by probabilistic line-of-sight conditions with multiple ground base stations (BSs). To tackle these challenges, reinforcement learning (RL)-based methods, particularly deep Q-networks (DQN), have been employed to optimize handover decisions dynamically. However, a major drawback of these learning-based approaches is their black-box nature, which limits interpretability in the decision-making process. This paper introduces an explainable AI (XAI) framework that incorporates Shapley Additive Explanations (SHAP) to provide deeper insights into how various state parameters influence handover decisions in a DQN-based mobility management system. By quantifying the impact of key features such as reference signal received power (RSRP), reference signal received quality (RSRQ), buffer status, and UAV position, our approach enhances the interpretability and reliability of RL-based handover solutions. To validate and compare our framework, we utilize real-world network performance data collected from UAV flight trials. Simulation results show that our method provides intuitive explanations for policy decisions, effectively bridging the gap between AI-driven models and human decision-makers.

Learning Based Dynamic Cluster Reconfiguration for UAV Mobility Management with 3D Beamforming

Jan 31, 2024Abstract:In modern cell-less wireless networks, mobility management is undergoing a significant transformation, transitioning from single-link handover management to a more adaptable multi-connectivity cluster reconfiguration approach, including often conflicting objectives like energy-efficient power allocation and satisfying varying reliability requirements. In this work, we address the challenge of dynamic clustering and power allocation for unmanned aerial vehicle (UAV) communication in wireless interference networks. Our objective encompasses meeting varying reliability demands, minimizing power consumption, and reducing the frequency of cluster reconfiguration. To achieve these objectives, we introduce a novel approach based on reinforcement learning using a masked soft actor-critic algorithm, specifically tailored for dynamic clustering and power allocation.

Reinforcement Learning Based Dynamic Power Control for UAV Mobility Management

Dec 07, 2023

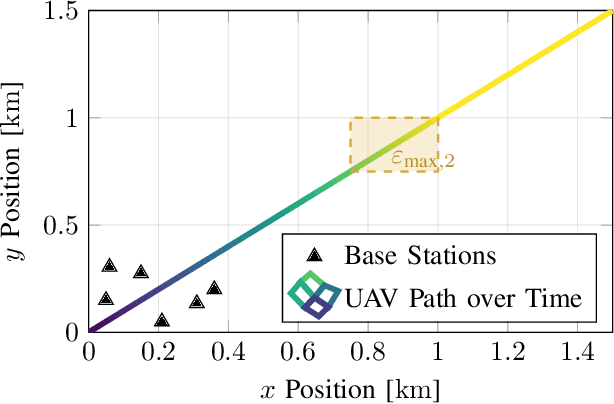

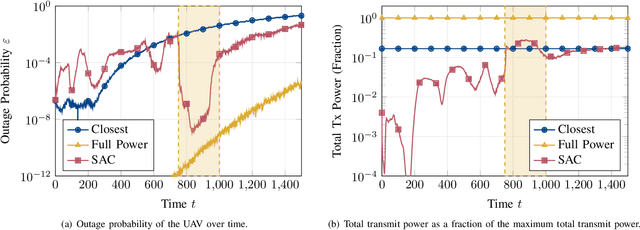

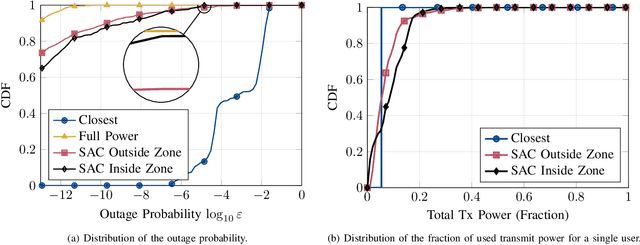

Abstract:Modern communication systems need to fulfill multiple and often conflicting objectives at the same time. In particular, new applications require high reliability while operating at low transmit powers. Moreover, reliability constraints may vary over time depending on the current state of the system. One solution to address this problem is to use joint transmissions from a number of base stations (BSs) to meet the reliability requirements. However, this approach is inefficient when considering the overall total transmit power. In this work, we propose a reinforcement learning-based power allocation scheme for an unmanned aerial vehicle (UAV) communication system with varying communication reliability requirements. In particular, the proposed scheme aims to minimize the total transmit power of all BSs while achieving an outage probability that is less than a tolerated threshold. This threshold varies over time, e.g., when the UAV enters a critical zone with high-reliability requirements. Our results show that the proposed learning scheme uses dynamic power allocation to meet varying reliability requirements, thus effectively conserving energy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge