Ildebrando De Courten

Probabilistic Tracking with Deep Factors

Dec 02, 2021

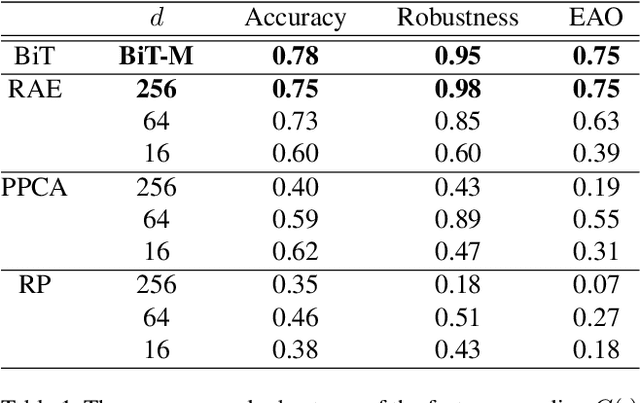

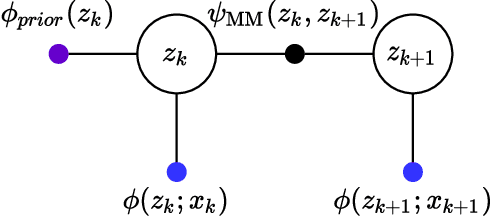

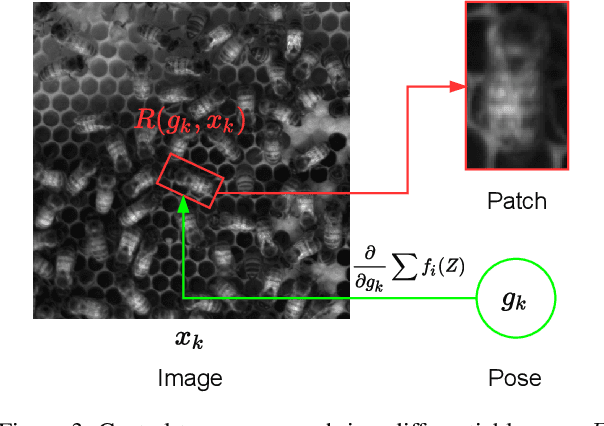

Abstract:In many applications of computer vision it is important to accurately estimate the trajectory of an object over time by fusing data from a number of sources, of which 2D and 3D imagery is only one. In this paper, we show how to use a deep feature encoding in conjunction with generative densities over the features in a factor-graph based, probabilistic tracking framework. We present a likelihood model that combines a learned feature encoder with generative densities over them, both trained in a supervised manner. We also experiment with directly inferring probability through the use of image classification models that feed into the likelihood formulation. These models are used to implement deep factors that are added to the factor graph to complement other factors that represent domain-specific knowledge such as motion models and/or other prior information. Factors are then optimized together in a non-linear least-squares tracking framework that takes the form of an Extended Kalman Smoother with a Gaussian prior. A key feature of our likelihood model is that it leverages the Lie group properties of the tracked target's pose to apply the feature encoding on an image patch, extracted through a differentiable warp function inspired by spatial transformer networks. To illustrate the proposed approach we evaluate it on a challenging social insect behavior dataset, and show that using deep features does outperform these earlier linear appearance models used in this setting.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge