Hyungki Kim

Method for the generation of depth images for view-based shape retrieval of 3D CAD model from partial point cloud

Jun 30, 2020

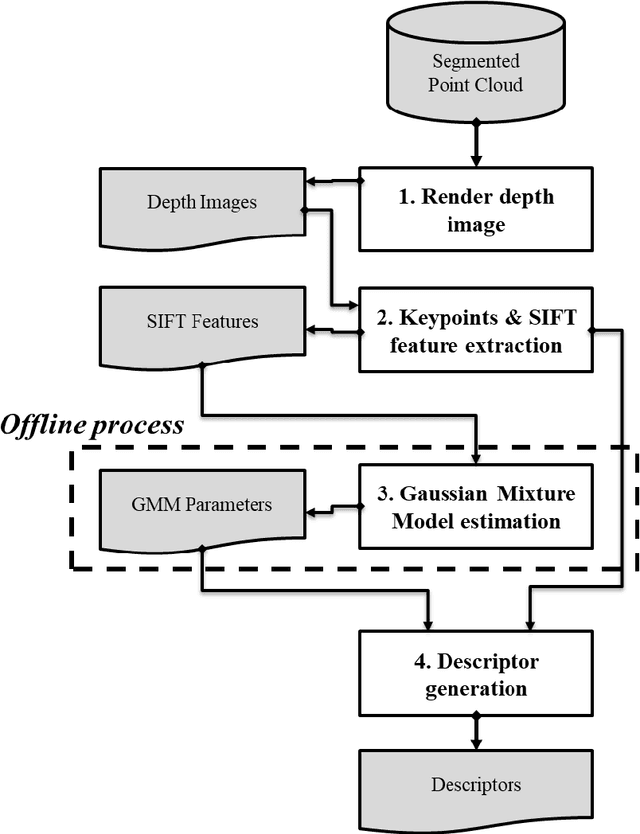

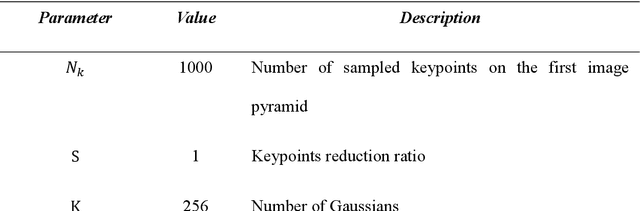

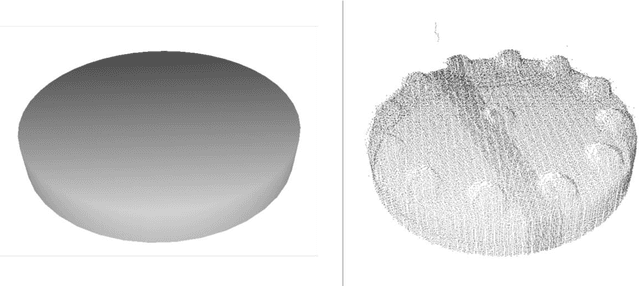

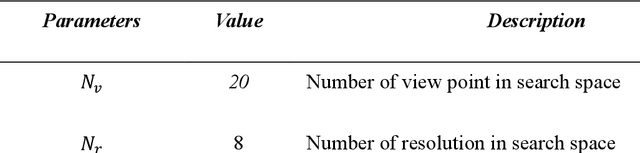

Abstract:A laser scanner can easily acquire the geometric data of physical environments in the form of a point cloud. Recognizing objects from a point cloud is often required for industrial 3D reconstruction, which should include not only geometry information but also semantic information. However, recognition process is often a bottleneck in 3D reconstruction because it requires expertise on domain knowledge and intensive labor. To address this problem, various methods have been developed to recognize objects by retrieving the corresponding model in the database from an input geometry query. In recent years, the technique of converting geometric data into an image and applying view-based 3D shape retrieval has demonstrated high accuracy. Depth image which encodes depth value as intensity of pixel is frequently used for view-based 3D shape retrieval. However, geometric data collected from objects is often incomplete due to the occlusions and the limit of line of sight. Image generated by occluded point clouds lowers the performance of view-based 3D object retrieval due to loss of information. In this paper, we propose a method of viewpoint and image resolution estimation method for view-based 3D shape retrieval from point cloud query. Automatic selection of viewpoint and image resolution by calculating the data acquisition rate and density from the sampled viewpoints and image resolutions are proposed. The retrieval performance from the images generated by the proposed method is experimented and compared for various dataset. Additionally, view-based 3D shape retrieval performance with deep convolutional neural network has been experimented with the proposed method.

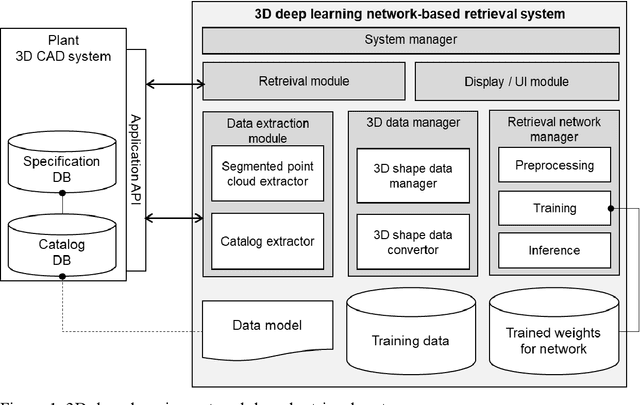

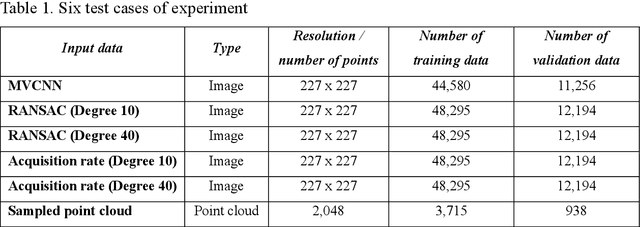

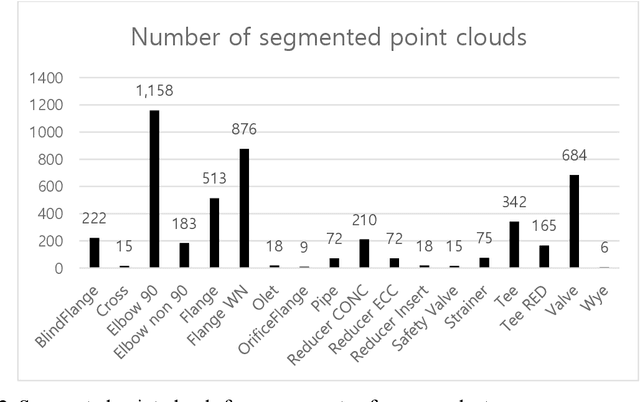

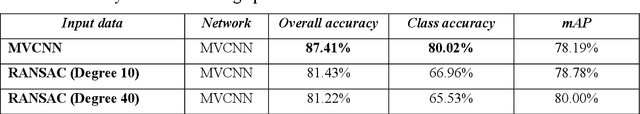

Deep-learning-based classification and retrieval of components of a process plant from segmented point clouds

Dec 13, 2019

Abstract:Technology to recognize the type of component represented by a point cloud is required in the reconstruction process of an as-built model of a process plant based on laser scanning. The reconstruction process of a process plant through laser scanning is divided into point cloud registration, point cloud segmentation, and component type recognition and placement. Loss of shape data or imbalance of point cloud density problems generally occur in the point cloud data collected from large-scale facilities. In this study, we experimented with the possibility of applying object recognition technology based on 3D deep learning networks, which have been showing high performance recently, and analyzed the results. For training data, we used a segmented point cloud repository about components that we constructed by scanning a process plant. For networks, we selected the multi-view convolutional neural network (MVCNN), which is a view-based method, and PointNet, which is designed to allow the direct input of point cloud data. In the case of the MVCNN, we also performed an experiment on the generation method for two types of multi-view images that can complement the shape occlusion of the segmented point cloud. In this experiment, the MVCNN showed the highest retrieval accuracy of approximately 87%, whereas PointNet showed the highest retrieval mean average precision of approximately 84%. Furthermore, both networks showed high recognition performance for the segmented point cloud of plant components when there was sufficient training data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge