Hubert Etienne

Listen to what they say: Better understand and detect online misinformation with user feedback

Oct 31, 2022

Abstract:Social media users who report content are key allies in the management of online misinformation, however, no research has been conducted yet to understand their role and the different trends underlying their reporting activity. We suggest an original approach to studying misinformation: examining it from the reporting users perspective at the content-level and comparatively across regions and platforms. We propose the first classification of reported content pieces, resulting from a review of c. 9,000 items reported on Facebook and Instagram in France, the UK, and the US in June 2020. This allows us to observe meaningful distinctions regarding reporting content between countries and platforms as it significantly varies in volume, type, topic, and manipulation technique. Examining six of these techniques, we identify a novel one that is specific to Instagram US and significantly more sophisticated than others, potentially presenting a concrete challenge for algorithmic detection and human moderation. We also identify four reporting behaviours, from which we derive four types of noise capable of explaining half of the inaccuracy found in content reported as misinformation. We finally show that breaking down the user reporting signal into a plurality of behaviours allows to train a simple, although competitive, classifier on a small dataset with a combination of basic users-reports to classify the different types of reported content pieces.

Confucius, Cyberpunk and Mr. Science: Comparing AI ethics between China and the EU

Nov 15, 2021

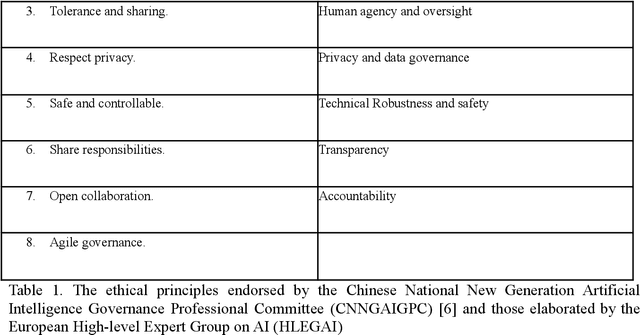

Abstract:The exponential development and application of artificial intelligence triggered an unprecedented global concern for potential social and ethical issues. Stakeholders from different industries, international foundations, governmental organisations and standards institutions quickly improvised and created various codes of ethics attempting to regulate AI. A major concern is the large homogeneity and presumed consensualism around these principles. While it is true that some ethical doctrines, such as the famous Kantian deontology, aspire to universalism, they are however not universal in practice. In fact, ethical pluralism is more about differences in which relevant questions to ask rather than different answers to a common question. When people abide by different moral doctrines, they tend to disagree on the very approach to an issue. Even when people from different cultures happen to agree on a set of common principles, it does not necessarily mean that they share the same understanding of these concepts and what they entail. In order to better understand the philosophical roots and cultural context underlying ethical principles in AI, we propose to analyse and compare the ethical principles endorsed by the Chinese National New Generation Artificial Intelligence Governance Professional Committee (CNNGAIGPC) and those elaborated by the European High-level Expert Group on AI (HLEGAI). China and the EU have very different political systems and diverge in their cultural heritages. In our analysis, we wish to highlight that principles that seem similar a priori may actually have different meanings, derived from different approaches and reflect distinct goals.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge