Huapeng Su

3dDepthNet: Point Cloud Guided Depth Completion Network for Sparse Depth and Single Color Image

Mar 20, 2020

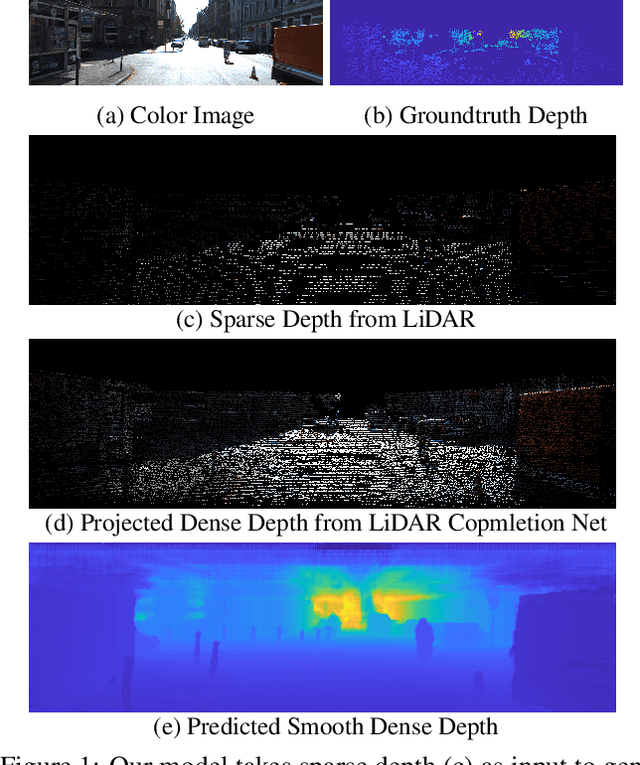

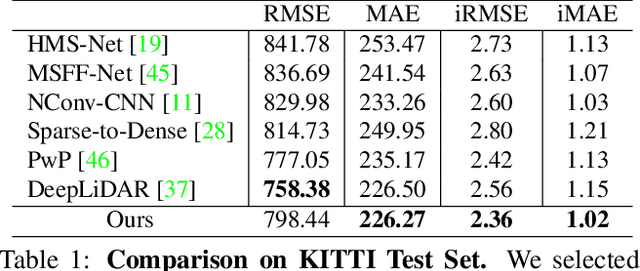

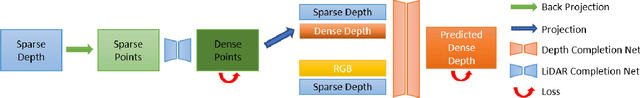

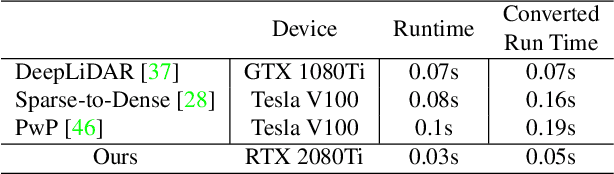

Abstract:In this paper, we propose an end-to-end deep learning network named 3dDepthNet, which produces an accurate dense depth image from a single pair of sparse LiDAR depth and color image for robotics and autonomous driving tasks. Based on the dimensional nature of depth images, our network offers a novel 3D-to-2D coarse-to-fine dual densification design that is both accurate and lightweight. Depth densification is first performed in 3D space via point cloud completion, followed by a specially designed encoder-decoder structure that utilizes the projected dense depth from 3D completion and the original RGB-D images to perform 2D image completion. Experiments on the KITTI dataset show our network achieves state-of-art accuracy while being more efficient. Ablation and generalization tests prove that each module in our network has positive influences on the final results, and furthermore, our network is resilient to even sparser depth.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge