Huanran Xue

Towards Communication-efficient Vertical Federated Learning Training via Cache-enabled Local Updates

Jul 29, 2022

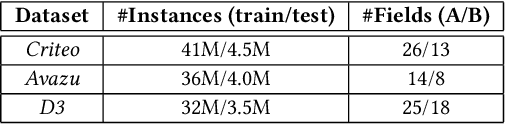

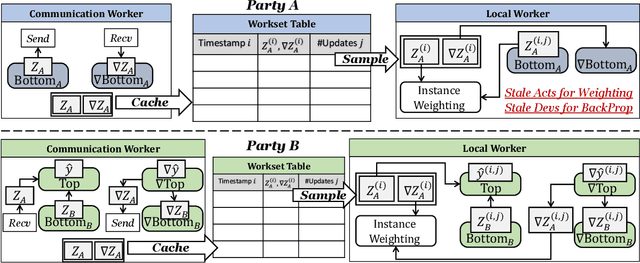

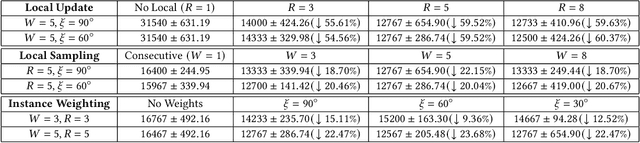

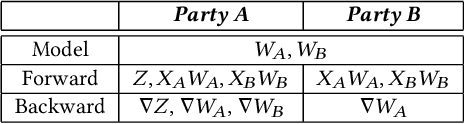

Abstract:Vertical federated learning (VFL) is an emerging paradigm that allows different parties (e.g., organizations or enterprises) to collaboratively build machine learning models with privacy protection. In the training phase, VFL only exchanges the intermediate statistics, i.e., forward activations and backward derivatives, across parties to compute model gradients. Nevertheless, due to its geo-distributed nature, VFL training usually suffers from the low WAN bandwidth. In this paper, we introduce CELU-VFL, a novel and efficient VFL training framework that exploits the local update technique to reduce the cross-party communication rounds. CELU-VFL caches the stale statistics and reuses them to estimate model gradients without exchanging the ad hoc statistics. Significant techniques are proposed to improve the convergence performance. First, to handle the stochastic variance problem, we propose a uniform sampling strategy to fairly choose the stale statistics for local updates. Second, to harness the errors brought by the staleness, we devise an instance weighting mechanism that measures the reliability of the estimated gradients. Theoretical analysis proves that CELU-VFL achieves a similar sub-linear convergence rate as vanilla VFL training but requires much fewer communication rounds. Empirical results on both public and real-world workloads validate that CELU-VFL can be up to six times faster than the existing works.

BlindFL: Vertical Federated Machine Learning without Peeking into Your Data

Jun 16, 2022

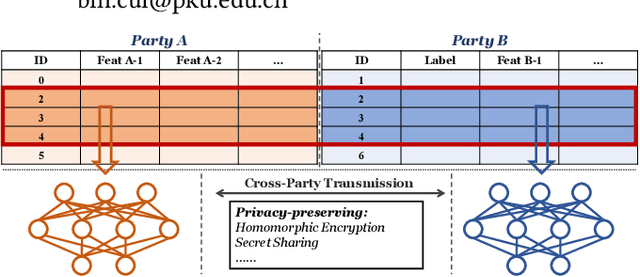

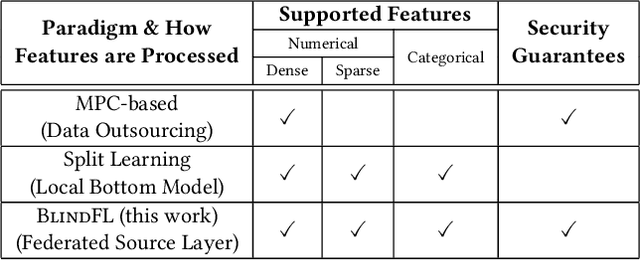

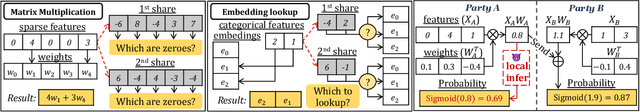

Abstract:Due to the rising concerns on privacy protection, how to build machine learning (ML) models over different data sources with security guarantees is gaining more popularity. Vertical federated learning (VFL) describes such a case where ML models are built upon the private data of different participated parties that own disjoint features for the same set of instances, which fits many real-world collaborative tasks. Nevertheless, we find that existing solutions for VFL either support limited kinds of input features or suffer from potential data leakage during the federated execution. To this end, this paper aims to investigate both the functionality and security of ML modes in the VFL scenario. To be specific, we introduce BlindFL, a novel framework for VFL training and inference. First, to address the functionality of VFL models, we propose the federated source layers to unite the data from different parties. Various kinds of features can be supported efficiently by the federated source layers, including dense, sparse, numerical, and categorical features. Second, we carefully analyze the security during the federated execution and formalize the privacy requirements. Based on the analysis, we devise secure and accurate algorithm protocols, and further prove the security guarantees under the ideal-real simulation paradigm. Extensive experiments show that BlindFL supports diverse datasets and models efficiently whilst achieves robust privacy guarantees.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge