Huanneng Qiu

Crossing the Reality Gap with Evolved Plastic Neurocontrollers

Feb 23, 2020

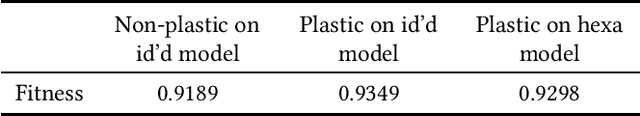

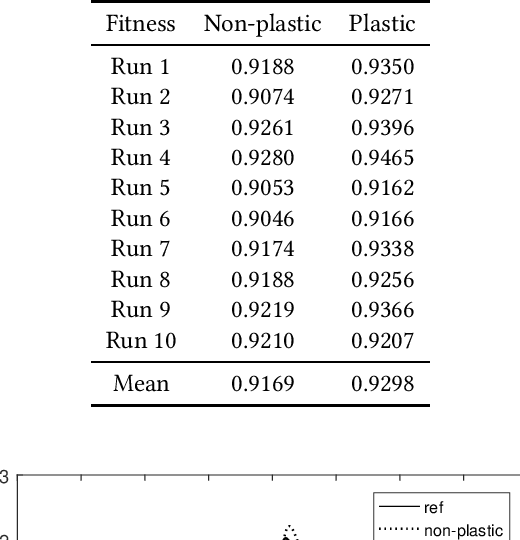

Abstract:A critical issue in evolutionary robotics is the transfer of controllers learned in simulation to reality. This is especially the case for small Unmanned Aerial Vehicles (UAVs), as the platforms are highly dynamic and susceptible to breakage. Previous approaches often require simulation models with a high level of accuracy, otherwise significant errors may arise when the well-designed controller is being deployed onto the targeted platform. Here we try to overcome the transfer problem from a different perspective, by designing a spiking neurocontroller which uses synaptic plasticity to cross the reality gap via online adaptation. Through a set of experiments we show that the evolved plastic spiking controller can maintain its functionality by self-adapting to model changes that take place after evolutionary training, and consequently exhibit better performance than its non-plastic counterpart.

Evolving Spiking Neural Networks for Nonlinear Control Problems

Mar 04, 2019

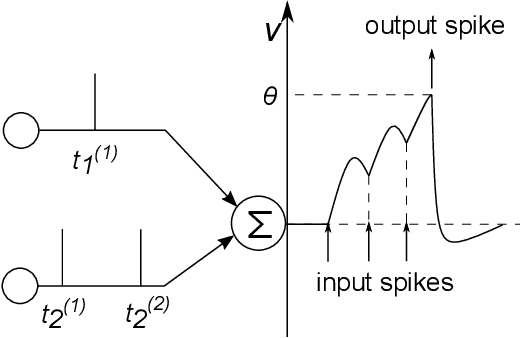

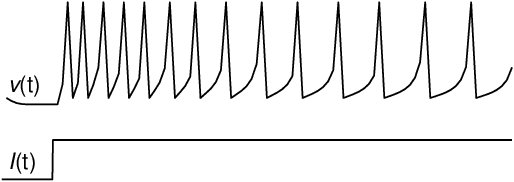

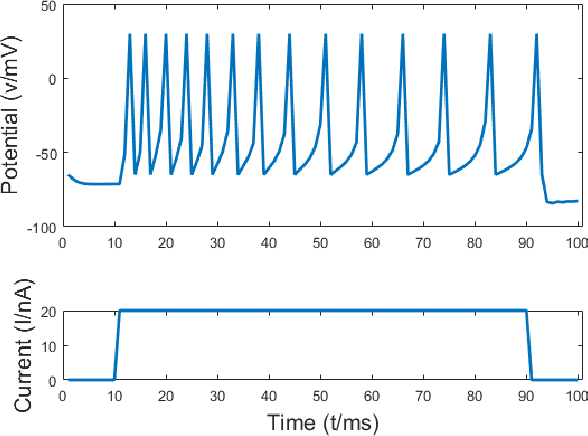

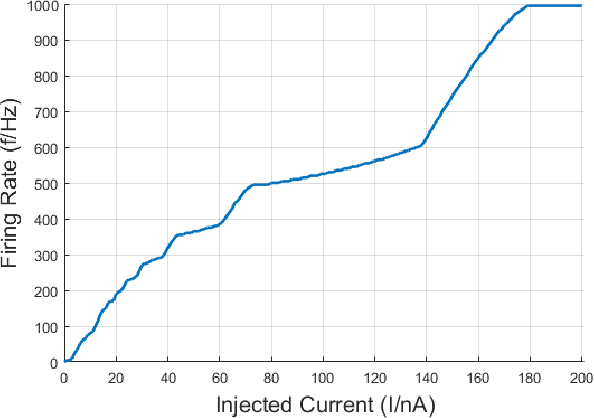

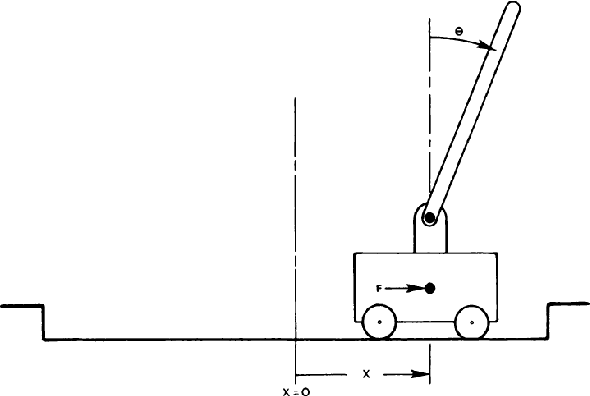

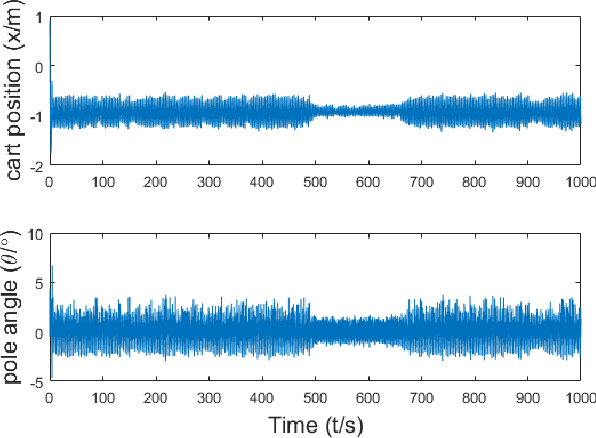

Abstract:Spiking Neural Networks are powerful computational modelling tools that have attracted much interest because of the bioinspired modelling of synaptic interactions between neurons. Most of the research employing spiking neurons has been non-behavioural and discontinuous. Comparatively, this paper presents a recurrent spiking controller that is capable of solving nonlinear control problems in continuous domains using a popular topology evolution algorithm as the learning mechanism. We propose two mechanisms necessary to the decoding of continuous signals from discrete spike transmission: (i) a background current component to maintain frequency sufficiency for spike rate decoding, and (ii) a general network structure that derives strength from topology evolution. We demonstrate that the proposed spiking controller can learn significantly faster to discover functional solutions than sigmoidal neural networks in solving a classic nonlinear control problem.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge