Huang-Ting Shieh

Retrieval-Augmented Language Model for Extreme Multi-Label Knowledge Graph Link Prediction

May 21, 2024Abstract:Extrapolation in Large language models (LLMs) for open-ended inquiry encounters two pivotal issues: (1) hallucination and (2) expensive training costs. These issues present challenges for LLMs in specialized domains and personalized data, requiring truthful responses and low fine-tuning costs. Existing works attempt to tackle the problem by augmenting the input of a smaller language model with information from a knowledge graph (KG). However, they have two limitations: (1) failing to extract relevant information from a large one-hop neighborhood in KG and (2) applying the same augmentation strategy for KGs with different characteristics that may result in low performance. Moreover, open-ended inquiry typically yields multiple responses, further complicating extrapolation. We propose a new task, the extreme multi-label KG link prediction task, to enable a model to perform extrapolation with multiple responses using structured real-world knowledge. Our retriever identifies relevant one-hop neighbors by considering entity, relation, and textual data together. Our experiments demonstrate that (1) KGs with different characteristics require different augmenting strategies, and (2) augmenting the language model's input with textual data improves task performance significantly. By incorporating the retrieval-augmented framework with KG, our framework, with a small parameter size, is able to extrapolate based on a given KG. The code can be obtained on GitHub: https://github.com/exiled1143/Retrieval-Augmented-Language-Model-for-Multi-Label-Knowledge-Graph-Link-Prediction.git

Born for Auto-Tagging: Faster and better with new objective functions

Jun 15, 2022

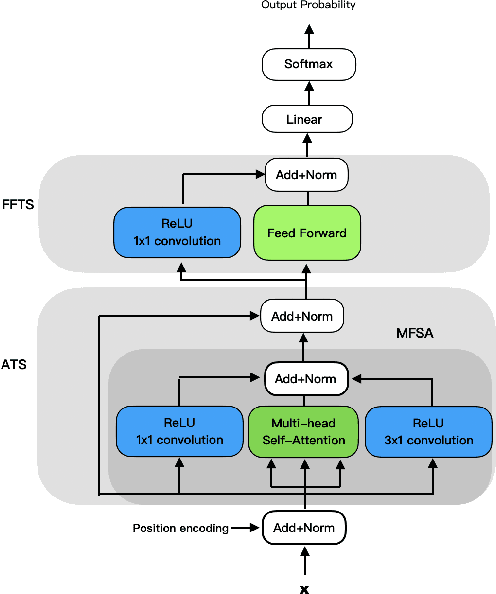

Abstract:Keyword extraction is a task of text mining. It is applied to increase search volume in SEO and ads. Implemented in auto-tagging, it makes tagging on a mass scale of online articles and photos efficiently and accurately. BAT is invented for auto-tagging which served as awoo's AI marketing platform (AMP). awoo AMP not only provides service as a customized recommender system but also increases the converting rate in E-commerce. The strength of BAT converges faster and better than other SOTA models, as its 4-layer structure achieves the best F scores at 50 epochs. In other words, it performs better than other models which require deeper layers at 100 epochs. To generate rich and clean tags, awoo creates new objective functions to maintain similar ${\rm F_1}$ scores with cross-entropy while enhancing ${\rm F_2}$ scores simultaneously. To assure the even better performance of F scores awoo revamps the learning rate strategy proposed by Transformer \cite{Transformer} to increase ${\rm F_1}$ and ${\rm F_2}$ scores at the same time.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge